What Is This ‘Broadcast Quality’ Thing Anyway? Part II

In Part I, we looked at how the definition of broadcast quality has evolved over the decades and the FCC’s attempts to enforce standards. In Part II, we look at several more past examples and examine how broadcast quality is determined in the digital age.

ALEXANDRIA, VA.—What really defines “broadcast quality” and has such a thing ever really existed?

Even though television gear has become a lot more reliable over the years, on-air slippages in performance do occur. If this happens during peak viewing hours and there's no backup equipment, the only choices are to shut down and make immediate repairs, or limp along with a somewhat less than "broadcast quality" signal until signoff (or in the case of today's 24-hour operations, a time when viewership is low) and then fix the problem. Such a decision has never been difficult, as "the show must go on" philosophy almost always trumps degraded signal quality.

Let me give you an example involving a 1950s low-budget small-market TV station in a southwestern state. It was described as really a “shoestring” operation by its former owner (the person who related the story to me). One evening the station got a call from a viewer who was very excited about a particular program being transmitted. It seems that individual had won a color TV (a big-ticket item then) in a raffle and the network show the station was carrying was the first time the set had displayed color (1950s network offerings were mostly monochrome). The station’s then-owner and chief engineer was also excited, admitting to me that he didn’t know that his rather antique Dumont transmitter could even pass color. When I asked if he couldn’t see off-air burst and chroma information on a waveform monitor, he replied, “at that time we didn’t even own one.”

Strike another blow for maintaining “broadcast quality.”

There’s also the story from a couple of decades earlier involving a network affiliated radio station in a medium-sized market. The station was getting complaints about a “squeal” in the audio on network programs. However, as the audio sounded fine in the control room, station engineering chalked it up to listeners’ set problems or maybe some sort of interference in their neighborhoods. Finally, the number of complaints increased to the point that it was apparent something just might be wrong with their signal. The problem was eventually traced to an oscillating amplifier used by the local phone company to deliver the network audio. It seems that the speaker used for control room monitoring had such poor high frequency response that operators couldn’t hear the “squeal.” “Broadcast quality” I have to wonder?

‘RELAXED’ QC MONITORING

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

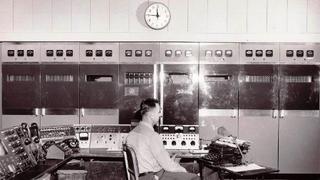

Fast forward to the 21st century. I visit quite a few broadcast facilities, and during the past few years have observed that more and more master control rooms are now monitoring everything on consumer-grade large screen displays driven by multiviewers. In other areas (graphics production) I also no longer see the broadcast grade “critical” and expensive CRT monitors, but rather garden-variety computer displays.

The same sort of “relaxed” QC monitoring is also happening in camera control rooms. Yes, there are plenty of “critical” monitors available (Dolby Labs, Sony, Panasonic, JVC, Plura, and a number of others all come to mind), but I don’t see nearly as many “broadcast quality” monitors deployed where color and overall image quality assessment is taking place as I did when the CRT was king. And speaking of monitoring, how about the multichannel “surround” sound that we can transmit now that we’ve gone to digital—5.1 and maybe even 7.1-channel audio? Come on—show of hands—how many of you have created a 5.1 or 7.1 listening environment in your master control room so that operators can ascertain that the correct audio is where it ought to be? (I thought so.)

What I see in my visits is most often a control room display indicating presence and relative amplitudes of various channels. Also, the monitor speakers, however many or few, are usually potted down; and in some cases there’s no one there to listen to or look at a surround display. I even visited one new major market facility that was constructed without a master control at all. Everything was running on automatic pilot and MC operations (such as they were—transmitter remote control point/logging and news microwave monitors) were contained in an alcove at the end of the newsroom.

CONTENT TRUMPS QUALITY

So back to my original rhetorical question: was there ever really such a thing as “broadcast quality?”

Yes, when I was moving through the professional TV ranks in the ’60s, ’70s, ’80s, ’90s, and even a few years into the new millennium, we certainly had plenty of paper with ink on it describing how things ought to be done. And we tried really hard to keep reasonably good looking (and sounding) signals on the air. However, if you worked during this period you might recall that there were also three stock “excuses” when things didn’t go exactly by the book: (1) It looks good leaving here, (2) We can live with it, and (3) The trouble must be “west of Denver” or “east of Denver” depending on where you were and whether New York or L.A. was feeding the network. (For those of you not old enough to remember, Denver was the location of a major AT&T Long Lines switching center).

We tried to keep a “broadcast quality” signal on the air; honest to Pete we did, but sometimes things slipped a little bit (or even a lot). However, content always trumps quality and as long as we had the ball game or soap opera or whatever up at its scheduled time, the phone didn’t ring much, even if the quality slipped a little.

I recently asked the chief engineer of a modern-day TV station about consumer complaints and he responded without hesitation that the few received dealt almost entirely with aspect ratio and loudness issues, and in working with the complainants, in virtually every case the problems were traced to consumers having monkeyed around with their remote controls. (“It looks good leaving here!”) He added that complaints about color, resolution (even when SD was intercut with HD), and other video attributes were almost non-existent.

So, back to my questions: (1) what is “broadcast quality” and (2) did it ever exist?

Based on my nearly 50 years of involvement in broadcasting, I would have to answer that (1) no one is really 100 percent sure and (2) no, I don’t think it ever really did. However, we always tried to put out a quality signal and I hope that broadcasters always will!

James O'Neal is a retired broadcast engineer who worked in that field for some 37 years before joiningRadio World’ssister publication,TV Technology, where he served as technology editor for nearly a decade. He is a regular contributor to both publications, as well as a number of others. He can be reached via TV Technology.