Closed Captioning Advances Slated for NAB 2016

OTTAWA, ONTARIO—As of Jan. 1, 2016, the FCC now requires broadcasters to close caption “straight lift clips”—video clips pulled from TV shows originally broadcast with captions—that they post online. This change comes under the Twenty-First Century Communications and Video Accessibility Act of 2010.

The good news: This requirement, plus other directives by the FCC to beef up broadcast closed captioning, is being addressed by many closed captioning software/equipment vendors today. The latest iterations of their solutions, which are also being enhanced to deal with the newest video streaming formats, will be unveiled at the 2016 NAB Show in April. Here’s an advance look at what they’re bringing to Vegas.

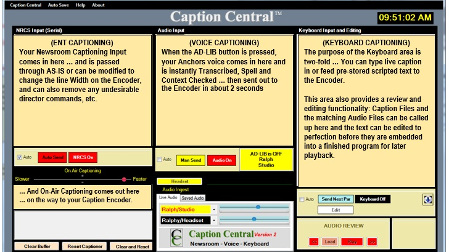

COMPROMPTER EXPANDS ‘CAPTION CENTRAL’ TO SUPPORT MULTIPLE VOICES LIVE

Comprompter is introducing a multi-person version of CC2 at this year’s show.

Known for its pioneering work in developing computer-driven teleprompting, Comprompter has since moved into the closed captioning arena with its Caption Central software. Version 2.0 of the company’s Caption Central software, which was introduced at the 2015 NAB Show, uses Nuance’s voice recognition software engine to reduce the need for live human captioning. CC2 achieves this by having each on-air announcer do an off-air seven-minute “voice training session” with the CC2 software engine, allowing the system to recognize and correlate the announcer’s distinct speech patterns.

“When announcers do their training sessions conscientiously, we can achieve 98 percent accuracy translating voice into text,” said Ralph King, Comprompter’s CEO. “This includes having the station staff add their local vocabulary of ‘People, Places and Things’ into the Nuance dictionary, so that we know those names and how to spell them.”

Comprompter is introducing a multi-person version of CC2 at this year’s show. “Our new system will use a powerful voice server to convert voice-to-text and will serve up to eight people at a time,” King said. “The 'Caption Central Multi' was designed to handle segments and shows with multiple talents that are basically unscripted with people just speaking off the cuff about cooking, politics or swapping jokes, or whatever."

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

EEG BRINGS iCAP TO LIVE PRODUCTION IP VIDEO

iCap from closed captioning provider EEG, marries real-time transcribers (both human and machine-based) to broadcast audio streams, allowing broadcasters to get the kind of expert real-time live captioning they require, without the use of phone lines. The company provides the necessary onsite hardware (e.g. the EEG HD492 iCap encoder), or virtual implementation to the broadcasters, and provides iCap cloud access to the transcribers for access to the broadcast stream and return of caption data.

At the NAB Show, EEG will spotlight the first iCap-encoder for encoding closed captions to live production IP video, according to Bill McLaughlin, vice president of product development for the Farmingdale, N.Y.-based company. The new iteration will support “both compressed MPEG transport streams and uncompressed standards such as ASPEN and VSF TR-03, which both use the SMPTE 2038 standard for ancillary tracks,” he said.

EEG launched iCap in 2007, and the product has since evolved in order to keep up with the latest workflow requirements of broadcasters. “The captioning piece must be flexible enough to move seamlessly across platforms as the industry changes,” said McLaughlin. “As a cloud service, iCap is always adapting to new standards so it can be delivered how it is needed. Having this kind of flexibility in any service today is key.” (EEG’s iCap technology was recently integrated in Imagine Communication’s Versio integrated playout platform.)

ENCO SYSTEMS’ VOICE RECOGNITION ENGINE REQUIRES NO TRAINING

Most voice recognition systems for closed captioning typically require some form of training before being deployed. Everyone whose voice is to be automatically converted into text must first plug in a computer microphone and read a range of words into the software, so that it can recognize their speech patterns later when spoken on air.

ENCO Systems’ enCaption3 voice recognition system—which will be showcased at the NAB Show—does not require any form of pre-training. “Just set up the enCaption3 system hardware and software, and the system is ready to convert any voice it hears into closed captioned text,” said Julia Maerz, ENCO Systems’ head of international sales. “This is because enCaption 3 is a fully automated, independent speech recognition system that has the power to convert any voice to text.”

Maerz added that enCaption3 can connect to newsroom systems to get proper spellings of locale information for street names. “We output directly to live closed captioning encoders or output to captioning software like Adobe Premiere,” she said.

NEXIDIA ENHANCES CLOSED CAPTION QUALITY

In a world where broadcasters have to produce closed captioning for both OTA and OTT playout—and do more to keep up with the FCC’s tightening closed caption requirements—Nexidia QC is a headache reliever. The reason? The company’s Illuminate software—comprised of QC, Align, and Comply modules—actively analyzes video files to check on the accuracy of existing closed captioning, by comparing it to the file’s audio tracks.

Tim Murphy, Nexidia’s senior director of product management

“Nexidia Illuminate can verify which language is being spoken on the audio track independent of the file’s metadata, and check the presence of video description as well,” said Tim Murphy, Nexidia’s senior director of product management. “An included REST API and integrations with the leading workflow automation and broadcast compliance solutions enables easy integration into a client’s environment.”

Nexidia will show various Nexidia Illuminate demonstration cases at the NAB Show, to illustrate to delegates just how well the system works in practice. In concert with Volicon (see below), Nexidia will also unveil an integration of Volicon Share and Nexidia Align to align captions for reuse in broadcast, IP and or OTT distribution.

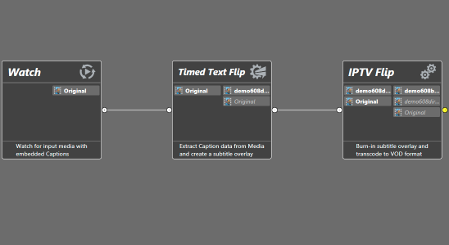

TELESTREAM ADDS CC/SUBTITLING CAPABILITY TO VANTAGE

For the NAB Show, Telestream is adding the closed captioning capabilities of its MacCaption (Mac)/CaptionMaker (Windows) standalone platforms to the company’s Vantage Media Processing Platform. “Branded as ‘Timed Text Flip,’ this new module will bring integrated closed captioning technology into the Vantage workflow," said Giovanni Galvez, Telestream’s captioning product manager, adding that the feature will also be added to Vantage Cloud.

At the upcoming NAB Show, Telestream will showcase the new ‘Timed Text Flip’ closed caption module for its MacCaption (Mac)/CaptionMaker (Windows) standalone platforms. These closed captioning services are being added to its Vantage Media Processing platform.

As well as offering MacCaption/ CaptionMaker’s existing suite of closed captioning tools, Timed Text Flip will provide some enhanced capabilities. For instance, "one of the things we¹ve added is a way to easily convert timed text data to burn-in subtitles; those that are always seen with the video," said Galvez. This is aimed at broadcasters and program producers delivering content to non-English language markets, who need to "burn in subtitles" for the market’s local language users.

"Broadcasters concerned about meeting the FCC’s requirement to close caption ‘straight lift clips’ can relax," Galvez added. "We have products that make the edits and keep the captions in an automated way," he said; namely, MacCaption/CaptionMaker, Timed Text Flip, and Post Producer.

VOLICON TEAMS WITH NEXIDIA TO MONITOR CAPTIONING QUALITY

The FCC’s ongoing changes to its closed captioning policy indicates the commission’s intention not just to extend close captioning to the Web, but also to enhance and improve the quality of broadcaster-driven closed captioning on all media—and to demand such performance from broadcasters on a 24/7 basis.

With this in mind, Volicon has teamed with Nexidia to develop an automated closed captioning monitoring solution that constantly compares a video’s closed captioning text with its audio track. The product, which will be unveiled at the NAB Show, achieves this degree of tech magic by combining “phoneme-level” speech technology with content monitoring and logging. (A phoneme is “the smallest unit of speech that can be used to make one word different from another word,” according to Merriam-Webster.) This analysis is performed when the content is ingested, with the broadcaster/playback provider being alerted to specific discrepancies and where in the media/closed captioning file they are located.

“If the captioning and the audio track don’t match, you get an alarm and the discrepancy is logged,” said Andrew Sachs, vice president of product management for the Burlington, Mass.-based company. “You get this data before the content goes live, so that you can correct it and be compliant with the FCC’s rules.”

James Careless is an award-winning journalist who has written for TV Technology since the 1990s. He has covered HDTV from the days of the six competing HDTV formats that led to the 1993 Grand Alliance, and onwards through ATSC 3.0 and OTT. He also writes for Radio World, along with other publications in aerospace, defense, public safety, streaming media, plus the amusement park industry for something different.