‘Book ’Em, Danno’: A/V Fingerprinting

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Audio and video are about to get fingerprinted. Despite much success and many valiant efforts to keep them aligned, often enough these miscreants head off at their own speed, drift apart and cause lip-sync problems.

Now it’s time to “book ’em, Danno” as a character on a classic TV cop show once said. It’s time for audio and video to get fingerprinted. So that no matter where they roam or what path they take, there could always be “someone” watching and, if needed, rein them in to alignment.

Over the years a number of different solutions have arisen to deal with A/V sync issues. Some are out-of-service processes that check signal paths for A/V sync before sending any content down the line. As the name implies, these can’t be used with live content.

Then there are in-service processes where lip sync could be measured with actual content, but there has been no compatibility among various techniques or manufacturers.

That’s why SMPTE has stepped in. The SMPTE Drafting Group (24TB-01 AHG Lip-sync) is close to completing its work on some of the standards needed for A/V fingerprinting, and is continuing its work on others.

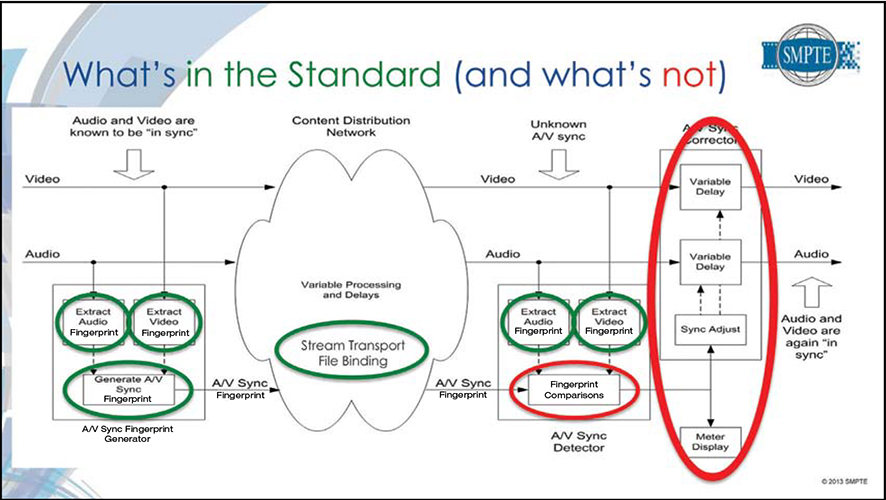

Fig. 1: Simplified block diagram for audio and video fingerprinting and lip sync correction, showing the extent of the SMPTE standardization work. courtesy of SMPTE. MEASUREMENT OBJECTIVES

According to Paul Briscoe, chair of this SMPTE Drafting Group, and who has also presented a webinar on the subject, the goal of the committee was to establish a standardized in-service measurement (without precluding out-of-service use) that would be: interoperable among different manufacturers; not modify any content; be able to be used on-air within live content at anytime; be medium- agnostic and not care how the media is moved around, in plant or out, through just about all kinds of processing. It needed to have low data rates to avoid using DSPs and not be too complex to keep costs reasonable to implement.

The SMPTE group chose fingerprinting technology to accomplish these goals.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

“The fingerprint is a summary description of a frame of video and the audio associated therewith,” Briscoe said. “It is a very compact bit of metadata that is calculated directly from the audio and video.”

To derive the fingerprint, certain simple characteristics are measured as they change over time from frame to frame or field to field of video, and sample to sample of digital audio.

“For each frame, we generate one set of fingerprints, one each from audio and video,” Briscoe said. “These are bundled together for each frame and transmitted along with, but not within, the video and audio. In actual fact, the fingerprints will come after the frame for which they have been calculated, but this isn’t a problem as we are not interested in the relationship between a given frame and its fingerprint, but the relationship between video and audio that it represents. The correlation function at the receiving end will figure it out.”

The correlation function that Briscoe referred to is part of an application (not part of the SMPTE standard) that, at any point along a signal path, can take a look at the incoming audio and video, create local fingerprints and correlate them with the original reference fingerprints to determine if there is any lip sync error. We’ll go into this more later, but for now, let’s return to the metadata.

“For every video frame, we produce one video fingerprint byte, and a variable number (format dependent) of audio fingerprint bytes,” Briscoe said. “These are bundled together into a fingerprint container, and that’s the metadata that is sent along with the audio and video over SDI, MPEG or IP. The same containers will be used in the file-based system.”

DERIVING FINGERPRINTS

Let’s see how each of the fingerprints is derived.

Multichannel audio is first downmixed to mono, although individual channels, including multiple languages, could be fingerprinted as well. Up to 32 audio fingerprints could be associated with corresponding video. According to Briscoe, operating practice will determine which audio will be fingerprinted.

The audio fingerprinting process works on digital audio samples associated with a frame of video or field depending on format.

“We use 16-bit samples at 48 kHz sample rate,” Briscoe said. Any other sample rate is converted to 48 kHz, and any digital audio word greater than 16 bits gets truncated to 16.

The samples are fed into two processing blocks—a mean detector and an envelope detector. “The mean detector is a long time-constant process, which outputs a value corresponding to the long-term average of the audio level,” Briscoe said. “The envelope detector is a short time-constant process, which outputs a value corresponding to the [near] instantaneous value of the audio level.”

For each sample of audio, the mean and the envelope value is compared and a bit is produced, a “1” if the mean is greater than the envelope, and a zero otherwise. All the ones and zeroes are accumulated for the entire duration of the video frame.

“We tally up all of the ones, and then decimate them to a smaller number using an algorithm that considers the video frame rate, with the goal being to establish approximately 1 millisecond of accuracy,” Briscoe said.

The decimator reduces the data rate from 48 kHz to around 900 bits per second. This is the audio fingerprint. The reduced data rate allows it to be more easily transported, and get correlated downstream more quickly.

On the video side, “we look at gross changes in the picture from frame to frame [for interlace, or field to field, if progressive].” Briscoe said. “The fingerprint is generated by [simple horizontal] downsampling the video to a common low-resolution format, which is used for all formats of picture.”

The picture is scaled to a common SD resolution; then 960 specific points in a central window of the image are sampled for the luminance value. These 960 points are compared to 960 points from the prior frame to identify any that have changed by a value greater than 32.

“A count of those is kept, and the result [zero to 960], is divided by four to render it to a single byte for transport,” Briscoe said. “This is the video fingerprint.”

Once we have the fingerprint metadata, how does it travel with the audio and video and not get lost along the way? And how is it used to correct lip sync errors? Tune in next time.

Mary C. Gruszka is a systems design engineer, project manager, consultant and writer based in the New York metro area. She can be reached viaTV Technology.