Overcompensating AI

GenAI technologies can misfire in many ways, so human judgment still matters

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Artificial intelligence is rapidly becoming a core part of professional decision-making and our personal lives.

Presently, one of the greatest concerns facing AI solutions is termed overcompensation. “Overcompensating AI” means that when inappropriate prompts are sent to the AI engine, the system may produce overexaggerated responses or the funneling of the wrong dimensions, which generates an inappropriate solution. Issues like overcompensation highlight “the challenges of building and deploying AI systems that are both effective and responsible in direction,” Google says.

This article is a follow-up to those discussed in my April column, “Uncorking AI.” The purpose of this extension is to alert potential users that “false” outputs can be created from excessively biased or wrong data models that could have been generated before the current user started their prompts or sessions. AI learns by succession: It is trained by previous data sets composed of varying inputs and solutions it generates.

In addition to the examples herein, we’ll also look at practical solutions commercially available now and used today by many businesses, including the media.

Note that users of any AI should always be aware that uncertainty and challenges should always be expected, and thoughtful user criticism is essential to the output generated by any GenAI product regardless of the application.

Every provider of AI will strive to be “perfect” in its answers to prompts, inputs and inquiries of its systems. But this drive toward perfection might result in what is referred to as “overfitting,” that is, the solution reaching a point where the model it creates becomes excessively specialized.

Drive for Perfection and Automation Bias

This action may result in what the industry sometimes calls “hallucination.” In such cases, the details of products in their model may be camouflaged (i.e., hidden) to the user, and later the data model may be found to contain training data that, in turn, causes output solutions to perform poorly on new or unforeseen inputs (prompts).

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

When decisions rely too much on AI, there becomes a safety factor (i.e., an “overreliance”) that can stem from factors like “automation bias.” When users favor AI-generated information or lack a thorough understanding of the system limitations, studies have shown that people may find the AI suggestions sufficient or satisfactory and may tend to go outside their own judgment—even in high-stakes situations.

Human Evaluation vs. AI Observations

AI is great at objective assessments. It can easily filter and sort information based on metrics, which helps streamline specific tasks. However, when subjective judgment is required, human intuition is still superior.

In trying to reach “perfection,” the models and the outputs produced from those models can lead to “overfitting.” In other words, when or if a model becomes overly specialized and dependent solely on its training data—without a broad enough set of data points—it struggles to react appropriately to new or unexpected inputs from the user.

When the system overcompensates or has an overreliance bias to its data sets or models, implementations further downstream can result in continual misguidance to the outputs it provides. Thus, any AI system requires a balanced approach combining capabilities with human oversight and critical thinking.

Augmentation Tool

Research supports the idea that AI should best act as an augmentation tool. Reasoning by humans should routinely be incorporated into decision-making instead of simply replacing it. This process further “trains” the AI model while protecting decisions appropriately. Through Objective Assessments, which utilize AI, organizations can still free up human capacity for less-intensive decisions built typically on excessive efforts.

Subjective judgment may sometimes be required, in which case, human intuition is still superior—at least until such time that the computer solutions can better mimic the human brain in function and in performance, something that is still likely a decade or more away.

When properly navigated, AI helps people to make informed decisions without depending on subject-matter expertise. A collaborative approach involves experts who can critically evaluate AI “suggestions” instead of just accepting them without further analysis as “true without question.”

AI tools are constantly being created by leading software providers; without elaborating too far—some include Canva, Microsoft Co-Pilot, Grammarly and OpenAI’s ChatGPT (developed in November 2022). Such tools range from Large Language Models (LLM) to natural language, and to software generation and translation tools across multiple programming languages.

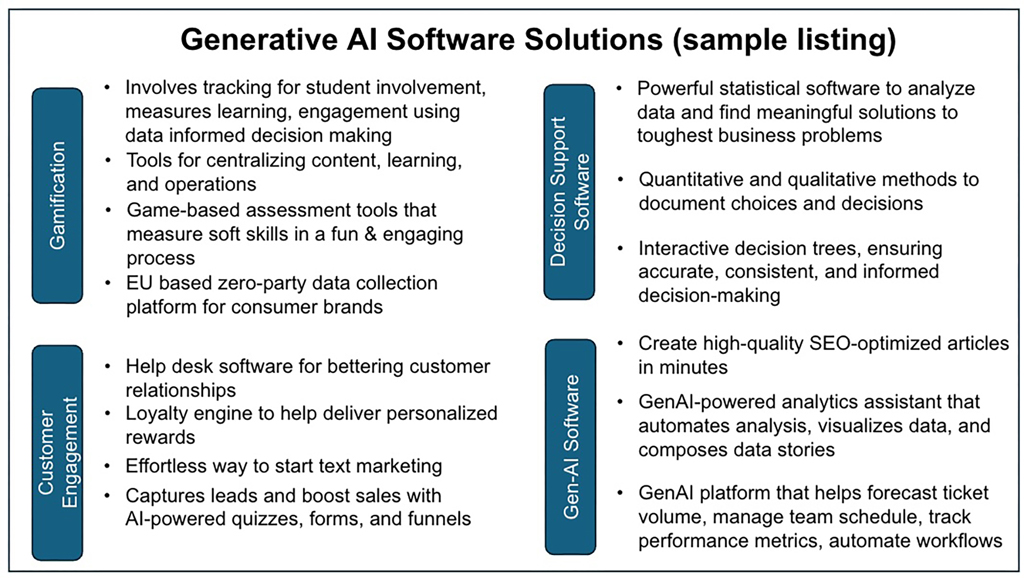

Software categories are quite broad—a small sampling of examples is in Fig 1. When choosing AI tool sets, it’s critical to consider factors like the specific use case, the software’s accuracy and performance, its data privacy and security measures, and the available training and support.

According to leading engineering and consulting firms, as much as 80% of an IT department’s budget can be consumed addressing outdated systems. Modernization is no longer just about efficiency, according to a report by Cognizant. Large-scale AI adoption will amplify the ability to rapidly respond to change.

Reaction time to market change can be improved and will allow the IT department to “innovate” instead of continuing to just “run” to stay up to date. To succeed in the AI age, we must, in turn, leverage technology and “overcome the tech debt that hampers innovation.”

Playbook for Growth and Innovation

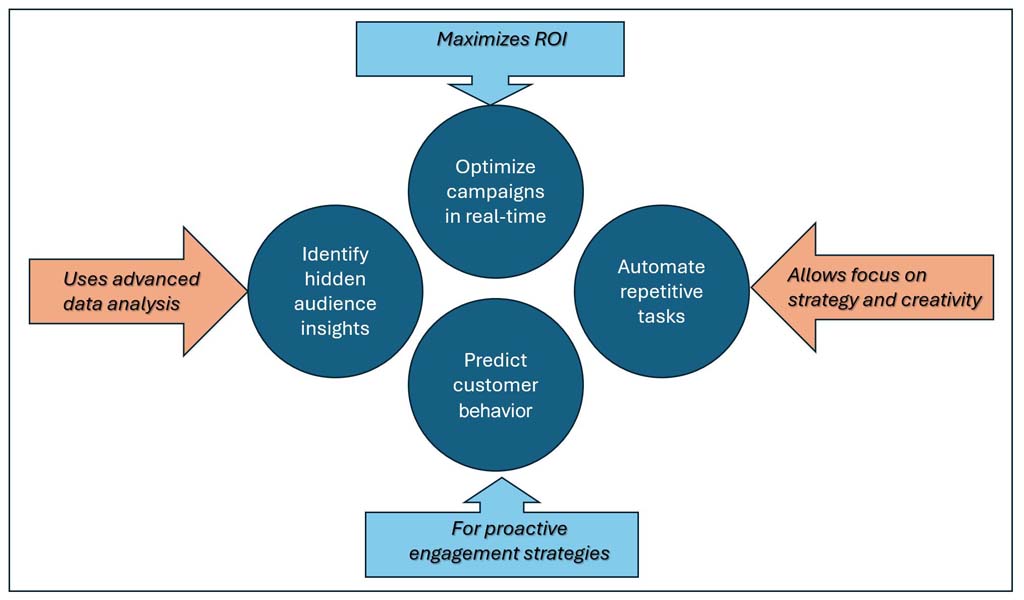

The world’s growth and marketing playbooks are currently being rewritten. Marketing is now using AI “to do” and not to just “think.” To reach such goals with AI, an understanding of AI Optimization (AIO) will become essential to ranking on search engines.

“Ranking” refers to the position of a webpage in the search engine results pages (SERPs) when a user enters a query. Ranking allows for increased visibility, more traffic to the web pages, and the potential for conversions—that is, more users taking desired actions such as purchases or engaging of a new/improved service. AI is extremely useful in helping a website developer improve results by supporting the best practices for search engine optimization.

Defining, in part, what a “growth playbook” means is that there is now a fundamental shift in how today’s dynamic business environment is going to achieve and sustain growth. Businesses must now rethink and adapt their methodologies (Fig. 2). This goes well beyond what a few years ago was termed a “digital transformation”—as it means tools created for growth—especially AI—are now routine and these tools are now necessary to stay ahead of the curve.

Agentic AI

Businesses are now shifting to an agent-centric operations model that will, in turn, collapse their operational and data silos, while moving toward designing systems that think, act and scale with intelligence. AI has become essential to this rapidly changing business model.

Streamlining the operational model requires shifting from individual (siloed) functions and/or departments to a set of consolidated, semiautomated and anti-autonomous operations that provide continuous operational improvements, reduced time to obtaining the solution, and cutting costs while simultaneously making customer satisfaction and acceptance a “norm” rather than an “occasional” priority.

Companies such as Amazon, FedEx and even Starbucks have all found customer satisfaction improvements by employing “AI agents” to perform tasks which previously required individual humans in siloed departments to achieve the same tasks.

In the case of Starbucks, the company noted its “Deep Brew” program and the newly introduced “Green Dot Assist” are designed to optimize everything from inventory management and customer service to employee training and new product development. The company’s AI platform continually analyzes customer data, including purchase history, location, time of day and even weather patterns, to personalize recommendations, offers and rewards through the Starbucks app.

Media Literacy Couples AI Research

On the media side, mainstream organizations emphasize the importance of media literacy and verifying sources in this new era of informational warfare—especially where weaponized AI can now produce convincing fake content at low cost. Furthermore, Stanford University has developed research tools that utilize AI to analyze cable news coverage patterns, including detecting faces, identifying figures, estimating demographics and analyzing topic trends. These analysis methods help identify biases and trends in news reporting, including patterns of interruptions in on-air discussions.

Currently, AI is used to analyze thousands of dialogues on cable news programs to better understand the nature of interruptions in political discussions. “Interruptions” are when a speaker is saying something and is cut off by someone else who goes on to express their own thing, leaving the former embittered. The psychology of such interruptions are then cataloged, analyzed and used to train others (e.g., reporters or producers); using AI to better inform, criticize and prepare an interviewer on how to mitigate the negative impacts of such interruptions.

These are just some of the myriad value propositions of AI in the business world. Stay tuned to this column in future issues on how to better understand and use AI in your business or media application.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.