The Basics of Infrastructure as Code

Provisioning and managing datacenters through machine-readable definition files is the premise of what is known as infrastructure as code (IaC)

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

If you are a media creation entity, you want to leverage as many potential opportunities as possible to progress through the stages of content creation. One of those is the development of a repeatable set of requirements, focused on specific workflow needs, such that operating in a “routine” mode is more easily achieved.

The ability to customize or replicate those functioning modes is advantageous when running multiple sets of processes simultaneously or independently. Cloud services or on-premise datacenters can provide effective conduits for such opportunities; however, having to reconfigure based upon systemic changes in the infrastructure can be time consuming, complex and require specialized resources especially for routine processes and simple updates.

Provisioning and managing datacenters through machine-readable definition files is the premise of what is known as infrastructure as code (IaC). Rather than supporting direct physical hardware configurations or solutions built on interactive configuration tools, IaC uses computecentric, machine language-based “files” to manage those compute processes.

In a cloud-based solution set, IaC deploys resources using templates, i.e., files that are both human-readable and machine-consumable and that instruct the systems to autonomously configure their functionality virtually automatically. Cloud service providers offer such IaC solution sets as a “built-in choice”—one that a user may use or ignore.

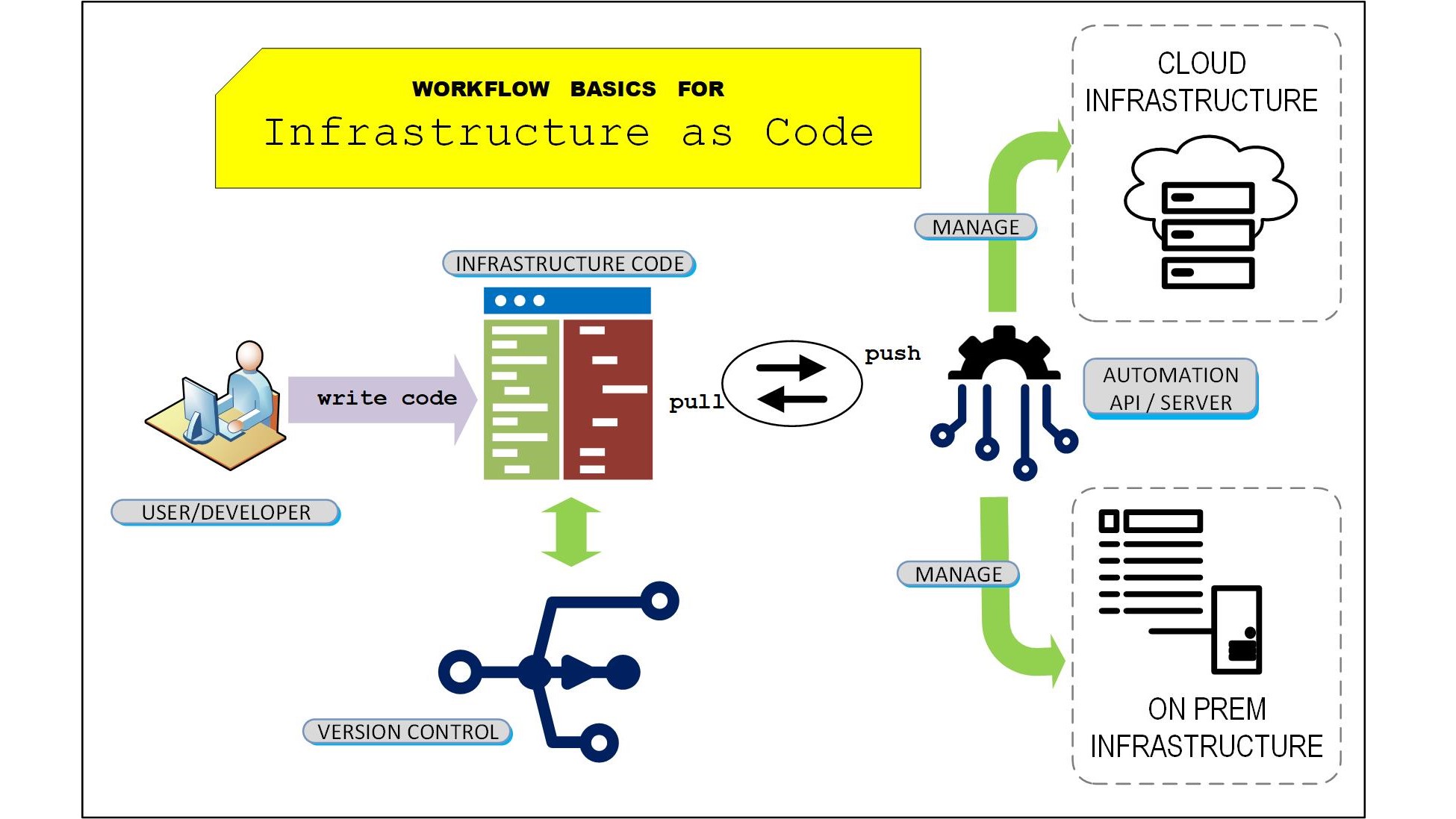

Fundamentally, once a code template is created, the cloud system then takes those code instructions and administers them to the cloud’s resources without any further direct user intervention. Any needs for the updating of called-out resources or for replacing any of the processor chains to achieve goals is handled as a background function and essentially become a “hands off” operation. Fig. 1 depicts the workflow basics from the user through the services, whether in the cloud or in an on-prem datacenter.

BENEFITS TO IAC

Benefits to the applications and uses of IaC include visibility, stability and scalability. Others include security, verification, repeatability and extensibility.

Repeatability, with security, is achieved when the same settings are utilized in each instance of the template. Verification that a given provisioning is stable and ready to run assures that if there is a failure, the infrastructure can be rolled back to a known state without a catastrophic collapse of the components. Operations can continue or be temporarily suspended depending upon the prescribed workflows.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Visibility lets the user obtain a clear reference point to what resources are being used on the account. Should something inadvertently change—such as a wrong setting or an accidentally deleted resource—the stability mechanism utilized in an IaC deployment can help resolve that change using a combination of a current or a previous control management version.

Scalability is equally important. Building a library around reusable code sets lends to the templated model, which can be easily and readily distributed to multiple services globally. Should a particular region need to ramp up for an unexpected deliverable, the closest cloud port could rapidly spin up the services and the infrastructure, based on the templates likely in use at another geographically distanced site. Users would not necessarily need to transport data to an alternate site if the repository can be brought into service in another region.

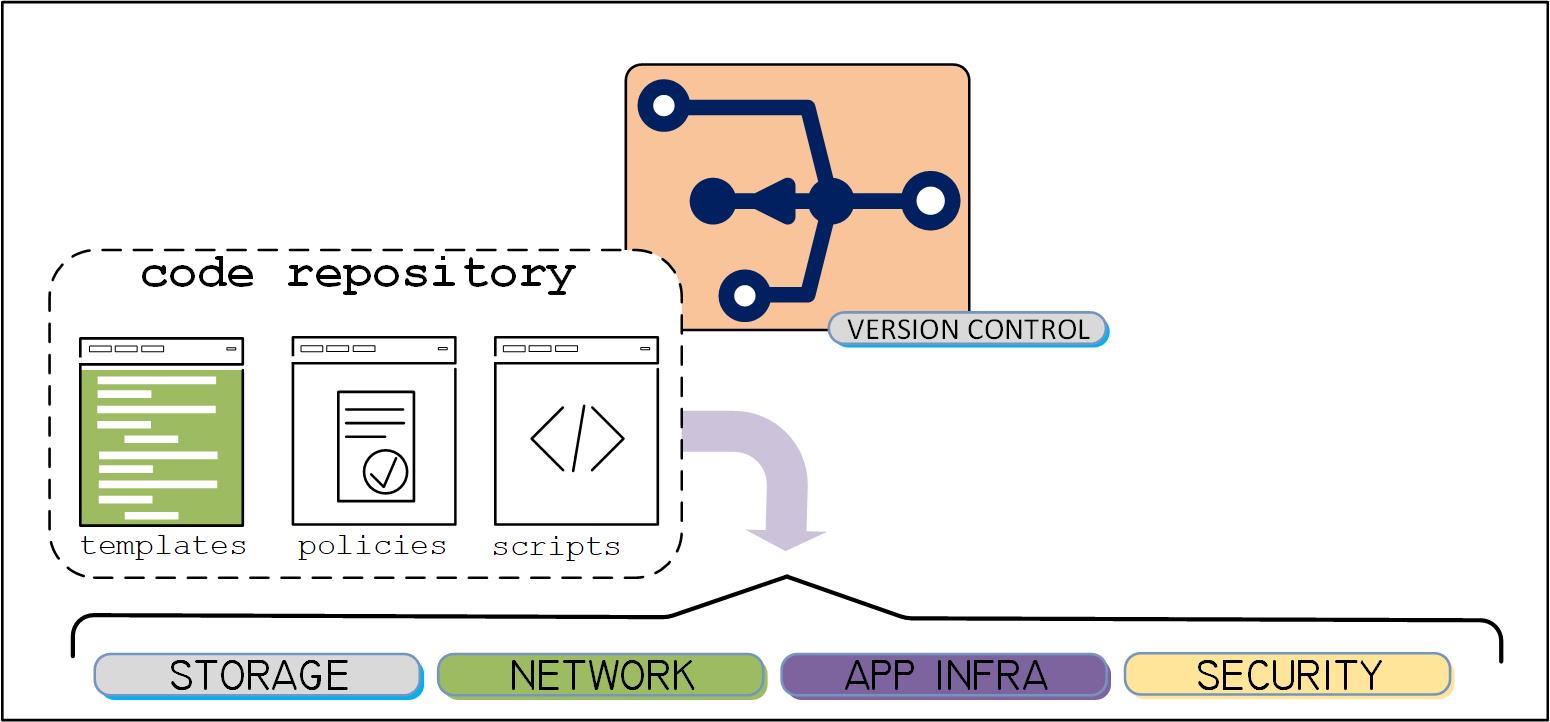

Fig. 2 diagrams where templates, scripts and policies are held in a common repository, which can be appropriately relegated to each global point-of-presence, i.e., a cloud zone or datacenter. Each of the practices can then be pushed into (or pulled from a repository) to the associated locations and functions.

EVERYTHING AS CODE

A similar approach is the practice of treating all the components of the solution as code. By storing configurations along with source code, in a repository and as a virtual environment, code sets can be cycled or recreated whenever needed. Even system designs would be stored as code in this model.

The everything as code (EaC) model mitigates the need for physical hardware and connections to be installed for each functional activity or task. This obviously would be impractical—and impossible—in a cloud-centric atmosphere. Thus, the previously required specialized physical skill sets and designer practices are transformed into a code-ready environment.

Native cloud applications once relegated to physical modifications have changed the entire cost model, making it easy to spin up a “virtual” infrastructure foundation regardless of location.

FAMILIAR STATEMENTS

Like IaC, an EaC model has similar beneficial statements. Repeatability, including the ability to move from one cloud provider to another, allows for the precise recreation of the environment that can further leverage new feature sets (such as faster performance or less cost per cycle). Tested infrastructure code can be developed, validated at scale (through compute modeling), and then directly promoted into production with the expectations, confidence and assurance it will function quickly and as designed.

The fear, uncertainty and doubt factor (FUD) with respect to server configuration drift is all but eliminated. These new models can literally self-heal themselves to almost any level—including a complete redeployment should a server die or need patching for continued operability. Since the entire infrastructure is developed in code, a mirror image of the system with no crossover dependencies can be spun up the moment an anomaly is detected. Operations just keep running.

INFRASTRUCTURE TOOLS

For cloud solutions to be practical, they need to be dynamic. Infrastructure resources fall into that category. It is akin to having infinite patching and shuffling capabilities without having a human actively manipulating the functionality. Each cloud provider is likely to have their own “flavor” of either IaC or EaC depending upon their feature sets.

Such tool sets allow cloud customers to specify their needed infrastructure resources without having to actually understand (logically or physically) how they are interfaced to one or another. The tools further let the users allocate which resources are needed, the parameter limits (how much for how long), and how those resources should be configured to perform selected tasks and activities.

In platform as a service (PaaS) architectures, users could use a particular platform’s user interface to assign or create resource sets and then manage those resources throughout its operations. In similar fashion, third-party solutions providers would make graphical user interface (GUI) products to manage both cloud and virtual infrastructures and sell those products to consumers. The drawback, however, was these were essentially “constrained” (specific) services that required substantial investment in initial specifications, design and testing before they could be rolled out into service.

While arguably the PaaS practice is practical once configured—and could be likely transported to various other cloud providers—the model was not as flexible. Apps required maintenance and upkeep when a systemic change in the cloud’s internal models were updated. Sometimes the changes impacted the PaaS applications and sometimes the PaaS would “self-adapt.” It was all about the type, use and applications, which were deployed at that time for that particular service.

CODE EXPERTISE EVOLVES

With open access to the virtual “moving parts” of the cloud, IaaS and PaaS models are changing. Where once codebased development was limited to a set of code-level experts, the new era is evolving to integrate machine learning and human-readable practices to become more prevalent and more productive.

Early adopters of cloud services recognized the needs for dynamic infrastructure platforms and are now changing their internal applications to implement their own self-provisioning and configuration capabilities. For those systems housed in private (non-public-cloud) datacenters, once the user/operators learn about processes, patterns, practices and accessibility, they can eventually orchestrate their own server structures, build their own server templates and promote the ability to update running servers without disrupting operations.

Karl Paulsen is the chief technology officer at Diversified, a SMPTE Fellow, and a regular contributor to TV Technology. You may reach Karl at kpaulsen@diversifiedus.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.