Is It Live, or Is It Automated Speech Recognition?

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

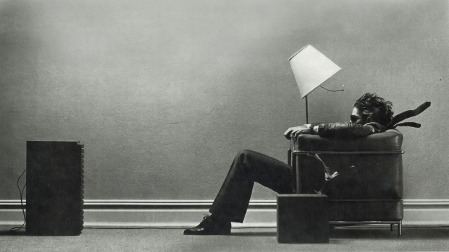

“Is it live, or is it Memorex” was an iconic 1970s ad campaign for cassette tapes (remember those?) from Memorex. The point of the successful advertisements was that it was impossible to tell the difference between the real thing (in the ad’s case, the heavenly voice of Ella Fitzgerald) and her recorded voice on tape.

This question of real versus artificial can be applied to the captioning industry, too, as technology has enabled speech recognition software to compete with humans to create captions. When debating the difference between the two, however, there is little doubt as to which is the real McCoy and which is the inferior copy.

For some, closed captions are simply those words that flow across the bottom of the television that describe the on-screen dialogue and action. But for nearly 50 million Americans in the deaf and hard-of-hearing community, captions are so much more—they are an important connection to a world that many in the hearing community take for granted. Captions provide a link to not only entertainment, but to education, news, and emergency information. A broad range of organizations, from tech firms to financial service advisers, also use captions to help employees, customers, and partners access and navigate the world around them.

In short, captions play a crucial function in the daily lives of millions. And it’s for this reason that clear, concise, accurate captions are absolutely essential.

THE IRREPLACEABLE HUMAN FACTOR

The real question isn’t whether you should caption your content; it’s how you should do it. Technology is making great strides, but the best approach to captioning remains rooted in the human experience of the spoken word. Professionals trained to provide captions bring human sensitivities and contextual awareness to the captioning table that no Automated Speech Recognition (ASR) system can.

Indeed, when comparing the captions created by humans to those created exclusively by even the smartest of machines, there’s an obvious disparity. ASR systems routinely fail to present names and technical terms properly, they stumble on accented or mumbled speech or background noises, and can have difficulty in determining the differences between what a speaker “said” and what they actually “meant.”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

THE COMPLEXITY BEHIND THE SCREEN

The whole point of captions is to enable accurate access to the audible content on the screen. But if captions present that content in ways that make no sense, the captions are useless. This is why human captioners— those who provide the “art” behind captioning technology—remain the lynchpin to successful captioning.

That is not to say that technology doesn’t matter. In fact, an understanding of the technologies involved in the presentation of live, broadcast, and streamed content plays a critical role. A content owner provides a captioning service provider access to the content. The provider then creates the captions and feeds them to an encoding system that merges the captions into the video. Beneath the surface of this interaction are a host of network, latency, and, in many cases, security challenges.

As a content owner, particularly if you are demanding real-time captioning services (as are used for newscasts and live corporate presentations), you need confidence that the network connections linking your feed to the captioner are reliable. If security is a concern—because you don’t want a show leaked before it is supposed to be aired or because your corporate presentations contain privileged materials—then you need to take into consideration the security standards, protocol, process and mechanisms your service provider is using to insulate your content and captions from misuse or theft. At the same time, you also need to make sure that those security mechanisms are not getting in the way of the speedy captioning services that you expect.

ACCESSIBILITY KNOWS NO BOUNDS

The other technology angle to consider centers on the manner in which people are viewing the content you produce. When captions first appeared on the bottom of American television screens in the early 1970s, broadcast TV was the king of video content. Today, with the emergence of on-demand video, more and more people—in every community—are watching content whenever and wherever they want. We can watch yesterday’s “Tonight Show” tomorrow from smartphones on the bus, PCs in the office, and tablets on the beach. What we can’t always do is watch it with the volume turned up. So, we watch it with the captions turned on.

Captions are the key to ensuring that your content is accessible to all people whenever and wherever they want to watch. And the most accurate, highest quality captions remain those captured by the human ear of trained captioning professionals to avoid the gaffes and error rates associated with speech recognition solutions.

Kevin Kilroy is the CEO of VITAC, a provider of live and offline captioning.