Why Captions and Subtitles Are a Key Part of Unlocking Global Streaming Success

International content presents a huge monetization opportunity for video service providers

Over the past several years, there has been a surge in interest and demand for non-English video content. Viewership is booming, especially among younger audiences in primarily English-speaking markets like the U.S., U.K., Canada, and Australia.

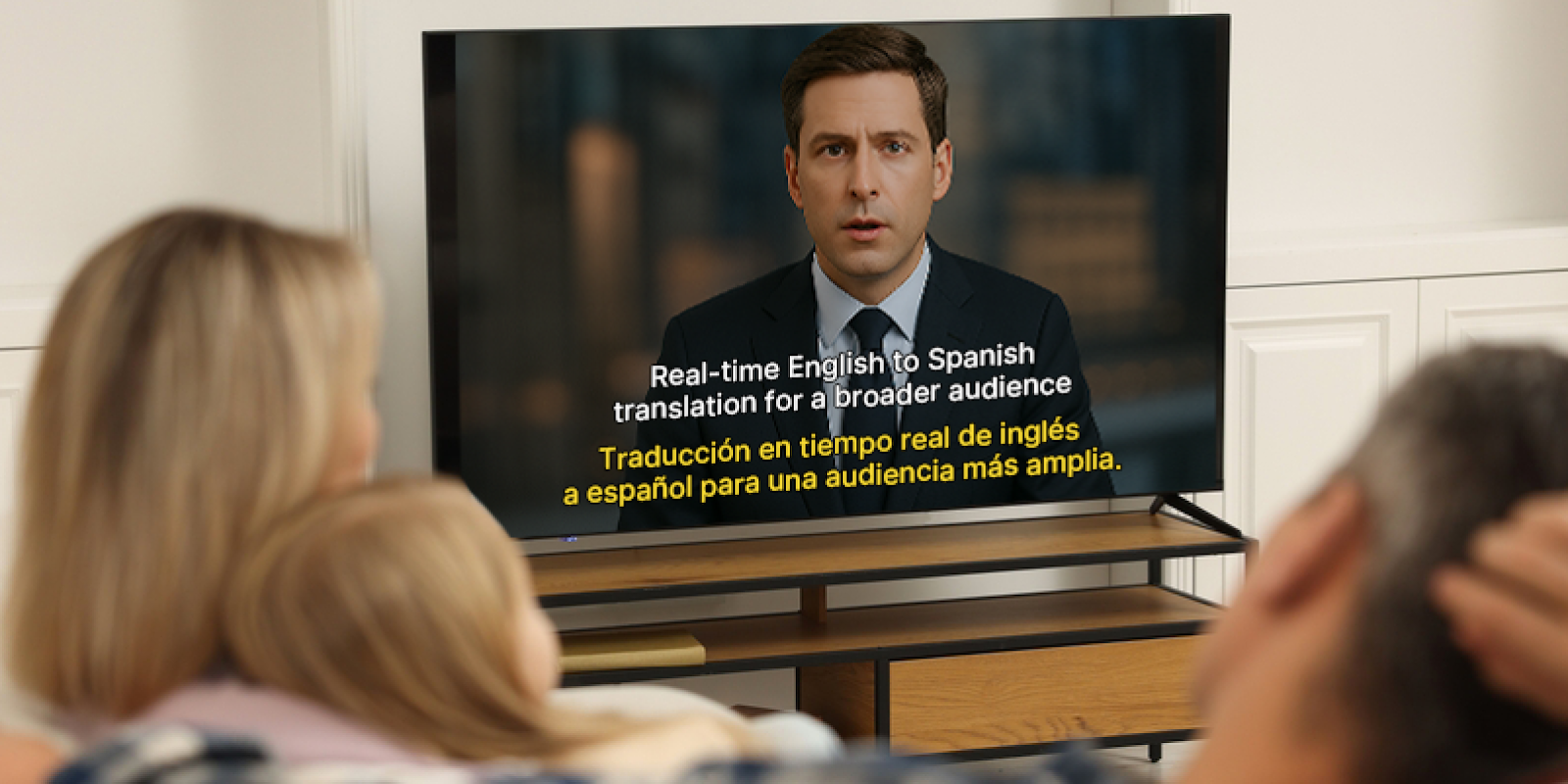

In a recent study from Ampere Analysis, 54% of internet users in those markets reported watching international (i.e., non-English) TV and movies “very often” or “sometimes” — up from 43% five years ago. Likewise, 66% of 18-to-34-year-olds in those markets reported watching international content regularly. And, according to the report, the most popular way to consume that content was through subtitles.

These findings underscore just how important subtitles and captions have become. Delivering properly localized subtitles is now essential for making content accessible to a broader audience. Without a solid subtitling and captioning solution, broadcasters and video service providers risk being left behind in the race to secure viewers and keep them happy.

The Complexity of Implementing Proper Captions and Subtitles

International content presents a huge monetization opportunity for video service providers, but many say that the complexity of localized captioning makes it hard to capitalize on that opportunity.

Ensuring synchronization between audio and text is critical, as captions must match dialogue precisely to maintain clarity and viewer engagement. Synchronization is especially tough for multilingual or fast-paced content, where timing discrepancies can happen easily. Manual captioning is labor-intensive and error-prone, requiring multiple reviews to meet guidelines for accuracy, completeness, and display metrics.

Additionally, captions must be carefully placed to avoid obscuring important on-screen visuals, often necessitating frame-by-frame inspection or advanced object detection. Translating humor, idioms, and cultural references and nuances further complicates the process. Literal translations can fail to capture the intended meaning, requiring nuanced adaptation so that audiences can relate.

Finally, complying with varying regional accessibility and legal standards adds another layer of complexity. It can take up to 10 hours to caption one hour of video content manually, and even then, there’s no guarantee the captions will be compliant.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Automated Captioning for the Win

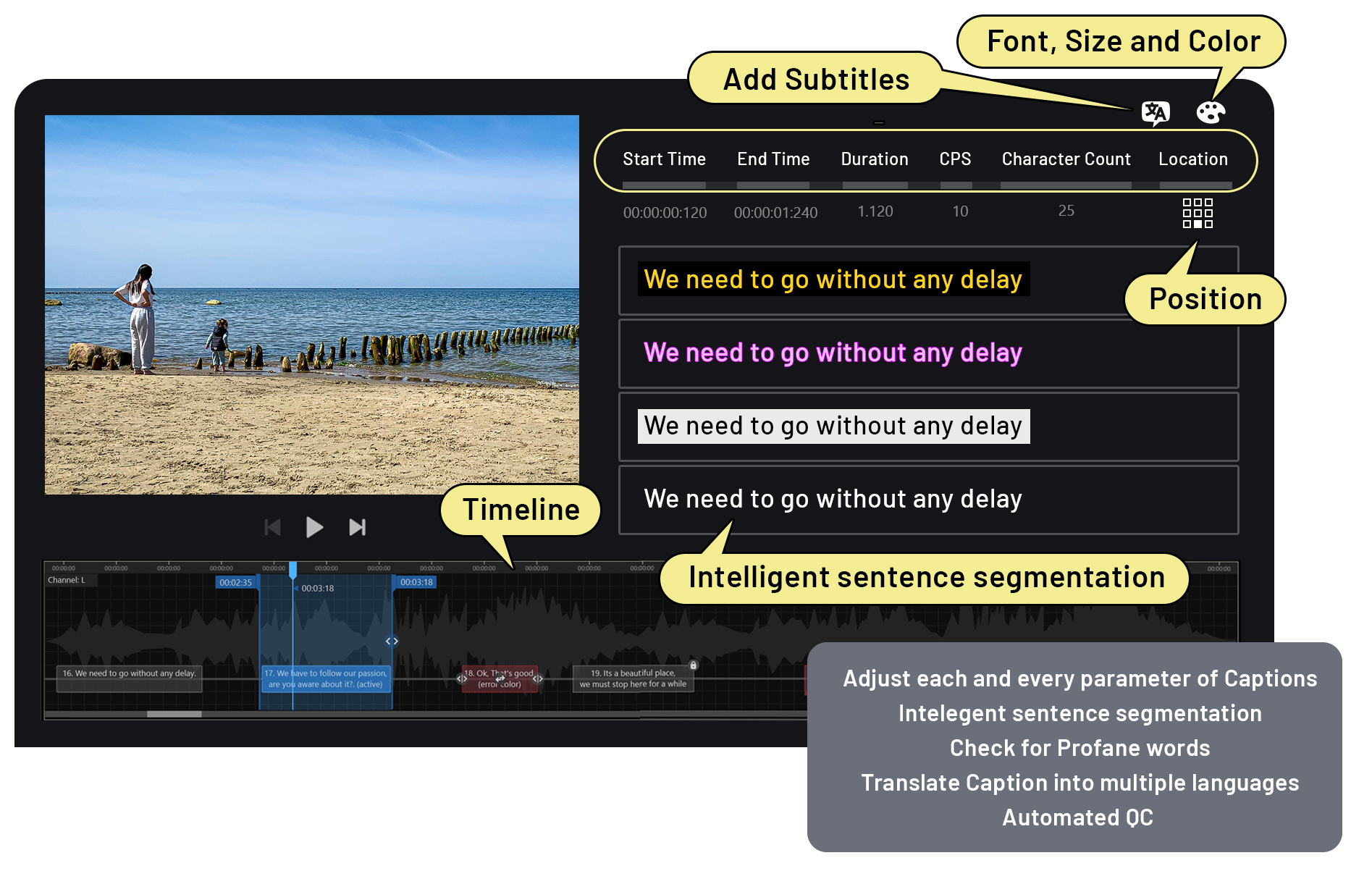

Automated, software-based captioning tools streamline the process by converting spoken language into text (speech to text), segmenting it appropriately, and ensuring captions are accurately timed and placed within the video frame. Modern systems are designed to maintain high standards of accuracy and synchronization, and they often include features for multilanguage support, error correction, and compliance checks. Such characteristics make these systems essential for today’s global and fast-paced video streaming environment.

When it comes to captions and subtitles, cultural resonance and compliance with local standards can determine a title’s success."

These tools also perform comprehensive quality control checks, such as verifying reading speed, display duration, and caption segmentation, and can automatically adjust placement to avoid blocking important elements like scoreboards or faces. This level of precision ensures captions meet legal requirements, such as those set by the FCC in the U.S. or Ofcom in the U.K., and adhere to best practices for accessibility.

By automating much of the workflow, automated captioning systems significantly reduce captioning time and effort vs. manual processes. That 10-hour captioning task can now be completed in real time or near-real time, cutting production time by 40% to 50% and labor costs by 30% to 40%.

Automated captioning systems also consolidate tasks such as transcription, review, and compliance checks within a single interface, minimizing errors and streamlining collaboration between automated processes and human reviewers. This efficiency is crucial for delivering high-quality, accessible content quickly across multiple languages and formats.

How AI Improves the Process and Elevates the Viewer Experience

The latest captioning tools use AI-driven technologies such as automatic speech recognition (ASR) and natural language processing (NLP) to deliver superior results. ASR rapidly transcribes spoken audio into text, even in challenging conditions with background noise or diverse accents.

NLP further processes this text, interpreting context, idioms, slang, and tone to produce captions that are not only accurate but also natural and easy to read. These technologies also enable features like automatic speaker identification, punctuation correction, and the grouping of words into meaningful segments for improved readability.

AI and machine learning advancements have transformed captioning by enabling adaptive learning and multimodal analysis. For example, computer vision can detect faces, on-screen text, or important visual cues, allowing the system to reposition captions dynamically to avoid covering critical information.

Machine translation models, now enhanced with large language models, provide more context-aware and culturally appropriate translations, ensuring captions resonate with local audiences. Multimodal AI can also identify nonverbal cues, such as laughter or gestures, and incorporate them into captions, resulting in a richer and more immersive viewing experience.

As these systems process more data, their accuracy and adaptability continue to improve, reducing the need for manual intervention and ensuring consistent quality across diverse content.

Cultural Sensitivity: It’s About More Than Translation

When it comes to captions and subtitles, cultural resonance and compliance with local standards can determine a title’s success. Today's mainstream translation engines excel at converting languages. As sophisticated large language models become fully integrated into readily available translation engines and captioning tools, they will be able to natively incorporate deep cultural sensitivity too.

That means AI-driven captioning solutions are poised to go far beyond simple translation. These future systems are expected to use contextual understanding to capture the subtleties of language — such as humor, sayings, and regional expressions — that are essential for truly authentic localization.

The aim is for these advanced solutions to one day adapt captions to align with local customs, regulatory requirements, and viewer expectations, ensuring content is not only accessible but also culturally relevant and accurate. This includes adjusting translations to preserve the original intent and tone, moving beyond literal word-for-word rendering.

As these more nuanced AI capabilities mature and become integrated into common tools, human expertise will remain indispensable. Human reviewers will play a vital role in guiding, validating, and finessing AI-generated captions, ensuring they accurately reflect the necessary subtle nuances and meet cultural appropriateness standards.

By combining the emerging power of AI for cultural adaptation with diligent human oversight, the next generation of captioning tools will be better equipped to help global audiences experience content as intended, fostering inclusion and engagement across linguistic and cultural boundaries.

Conclusion

Advanced captioning tools enable providers to meet diverse audience needs, ensure accessibility, and deliver culturally relevant content efficiently and accurately for every viewer everywhere. They expand the reach of regional content and boost international connectivity through video, making them essential for success in the global streaming market.

Anupama Anantharaman, Vice President, Product Management at Interra Systems, is a seasoned professional in the digital TV industry. Based in Silicon Valley, California, Anupama has more than two decades of experience in video compression, transmission, and OTT streaming.