The Case for Automating Closed Captioning

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

HAMILTON, N.J.—Without closed captioning, the deaf and hard of hearing cannot fully appreciate the video they’re watching. When closed captioning is displayed onscreen, viewers can read text of the dialogue while watching the video. And for international video distribution, foreign language captions and subtitles are essential for viewer accessibility.

In fact, this service is so important that a recent U.S. law—the Twenty-First Century Communications and Video Accessibility Act (CVAA)—stipulates that any video that’s been broadcast on television must have captions when it streams over the internet. And the FCC’s closed-captioning quality rules require non-live programming to have captions that are accurate, complete, in sync with the dialogue and properly positioned on-screen.

When broadcasters need to distribute a high volume of video to domestic and international markets—and IP outlets including over-the-top services, websites and mobile devices—they need a smart, automated captioning workflow that ensures regulatory compliance without quality compromises.

GLOBAL DISTRIBUTION

“Many broadcasters today want to deliver their video content internationally so it can be enjoyed by the broadest possible audience, and closed captioning is the key to making that happen,” said Giovanni Galvez, product manager of captioning products for Telestream, in Nevada City, Calif. “Where there are language barriers, foreign language captions and subtitles are a must.

“To expand viewership across global markets and ensure accessibility to everyone—whether they’re watching via TV, online or mobile—means broadcasters must properly caption or subtitle all iterations of their content for multiplatform, global distribution,” Galvez added. “Considering the scope and complexity of this task, broadcasters can save considerable time and money by using an automated solution that integrates precision captioning and subtitling as part of their existing media processing workflow.”

Telestream’s new Timed Text Flip feature leverages the company’s Vantage 7.0 media processing platform to automatically process high-quality subtitle and captioning input files—created by the customer or a third-party service—and add them to associated media in accordance with today’s broadcast, internet and accessibility mandates.

Galvez said that Timed Text Flip runs on the same automated Vantage workflow that many broadcasters already rely on in their daily broadcasting operations. And multiple processes—such as burning in subtitles, and offsetting captions to match the media following frame rate conversions—can run in parallel, with the finished files automatically landing on servers or other designated destinations for delivery.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

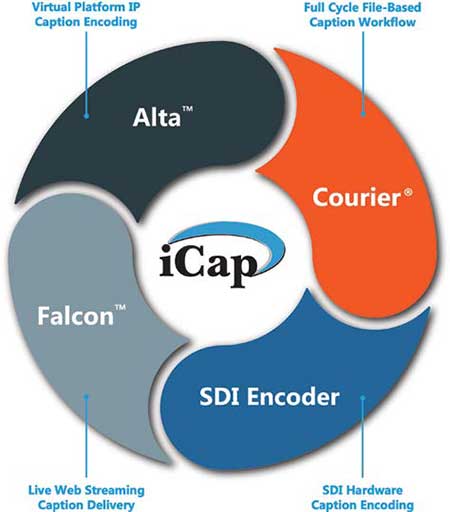

EEg’s icap alta system is intended for closed captioning of live programming delivered via an IP workflow, with support for 4K, IP and cloud environments.CAPTIONING IN IP

As the volume of video to be captioned grows—and the rules mandating quality increase—broadcasters must manage these pressures while transitioning from SDI to an all-IP infrastructure. EEG’s iCap Alta system is intended for closed captioning of live programming delivered via an IP workflow, with support for 4K, IP and cloud environments.

“Our goal is to enable a seamless transition from the hardware-driven caption insertion we’ve relied on with SDI to a software-based closed-captioning encoder for the IP playout and distribution workflow,” said Dave Watts, marketing manager for EEG Enterprises, in Farmingdale, N.Y. “With iCap, the SDI/IP transition is just a matter of switching from our iCap SDI hardware encoder to Alta’s virtualized encoder. Since our iCap product line is cloud-driven, when a broadcaster switches from an SDI to IP workflow with Alta, their settings, account activity and closed-captioning assets that they’ve built up over time in their iCap account are preserved and readily accessible.”

The iCap product line includes the Falcon live iCap encoder that routes captions to streaming services, including Wowza Media Systems and You-Tube Live Events. It offers “pay as you go” service on demand and connects online content producers to the iCap captioning cloud. iCap products offer instant online access to a global network of certified captioners as part of EEG’s end-to-end interoperable technology network.

AUTOMATED LIVE CAPTIONING

Live, real-time captioning typically involves contracting a third-party captioning service at a cost. ENCO’s Encaption 3 is a hardware/software solution that employs a speech recognition engine to fully automate closed captioning in near real time. It does not require any voice training and produces captions with a high degree of accuracy.

“For live newscasts, it can acquire the correct spellings of important terms, like names and local places, in advance by checking the news rundowns coming from the newsroom computer system through an IP MOS-based interface,” said Ken Frommert, general manager for ENCO in Southfield, Mich. “And in breaking news or weather emergencies, when captioners might not be readily available, the system is always ready to go for a fraction of the cost of live captioners. “Since TV shows cannot air without captions, this system makes it possible to go ahead and deliver the content, which benefits all viewers.”

Encaption 3 delivers live captions as a serial data stream directly to the broadcast encoder. It can also record a file of the live captions for future use, such as editing and repurposing of captions in post for rebroadcasts and multiplatform distribution.

Volicon’s observer media intelligence Platform provides tools to monitor and measure closed captions to ensure compliance.QUALITY ASSURANCE

Digital Nirvana’s MonitorIQ media management platform has a software-based component that captures and stores the off-air digital broadcast signal along with its closed captioning. It also serves as a search engine that makes recorded video content searchable based on associated metadata, including its closed-caption data.

“Users can search on any keywords, such as names, places or other terms, to find where those words are mentioned in the video,” said Dan Wasilko, director of sales for the Fremont, Calif.-based company. “For example, local news teams or producers of magazine-style shows can search video they’ve aired—or that multiple networks and channels have aired—by using the closed-caption data in off-air video recordings to see what’s been reported on a particular subject.”

MonitorIQ also has technical components that verify the presence of closed captioning, either during live broadcasts, or while media is streaming online. If the closed captioning is lost, it promptly alerts the on-duty operator.

Digital Nirvana also offers professional closed-captioning services, such as taking video shows that have aired lived and revising their captions so they’re 100-percent accurate when they’re rebroadcast or streamed online. At th NAB Show, the company launched a new cloud-based closed-captioning service that’s available on-demand anywhere video is created and distributed. The service includes pop on and roll-up captioning for all technology platforms, and supports multiple HD and SD video and caption file formats. The service also targets non-broadcast customers, such as the educational, corporate and government markets that also need to caption video programs they’ve created for online and social media distribution.

PROOF OF COMPLIANCE

To avoid potentially hefty fines and penalties, broadcasters must comply with all laws, mandates and quality rules related to closed captioning. With continuous recording of a broadcaster’s on air signal, Volicon’s Observer Media Intelligence Platform provides tools to monitor and measure closed captions to ensure compliance.

“This recorded media of the live broadcast provides undisputed evidence of exactly what went to air, including the closed captions,” said Gary Learner, chief technology officer for Volicon, in Burlington, Mass. “This proof can serve as an affidavit confirming regulatory compliance. Without this proof, it becomes hearsay. Broadcasters can check the system remotely to verify that closed captions are present, and it issues alerts, by a variety of means, such as texts, emails and alarms, to indicate when closed captioning has not been detected for a certain period of time, depending upon the user-configurable settings,” Learner added.

Volicon Observer also enables monitoring and compliance verification of subtitling, secondary audio tracks and foreign language captions, all of which are vital to broadcasters worldwide.

CUSTOM WORKFLOW

Dalet Digital Media Systems developed and integrated a custom workflow for CPAC (Cable Public Affairs Channels) that automates the process of adding English and French closed captioning to programming. As a 24/7 bilingual TV service in Ottawa, Ont., CPAC delivers voluminous programming to over 11 million Canadian homes via cable, satellite and wireless distribution.

“We needed a closed-captioning solution that could manage [content] from different types of workflows, including news and magazine-style shows, live and studio production, and long-form programming,” said Eitan Weisz, senior manager of operations, CPAC. “For each of these workflows, we needed to manage and maintain caption data, especially after the content has been edited or when it’s passing through another system.”

Dalet’s AmberFin advanced media and processing platform automates insertion and extraction of caption data. It also leverages Dalet MAM to manage the workflow with an emphasis on maintaining captions as metadata. Among its many automated processes, AmberFin detects ST436 data and extracts caption information to a standard SCC caption file. It also generates TTML (Timed Text Markup Language), a standardized XML structure that represents captioning information as timecoded metadata that can be cataloged, searched and modified.

According to Dalet Sales Director Frederic Roux, with TTML, “the user can directly view and search it as well as perform basic editing and correction of the caption information. CPAC now has rich automated metadata created in the Dalet system and tagged to all our content through this captioning process.”