Subtitling for multi-platform

If the subject of global subtitling standards and technologies wasn’t already complicated enough for traditional linear TV broadcasting, then designing subtitle solutions for new IP streaming and on-demand video services certainly creates some extra challenges. Whether you are a content owner, a content aggregator, broadcaster or a systems architect, there are some new pieces to the subtitling puzzle.

In general terms, when we talk about multi-platform, we mean the distribution and consumption of video content on an ever-growing selection of broadcast platforms and consumer devices, including digital TV, hybrid TV, desktop PCs and the mobile/handheld sector.

Challenges

The first challenge is to realize that as you progress along this list of video consumption technologies, technical standards become increasingly fuzzy. Digital TV is mature and well-defined (largely by global region). Hybrid TV mixes a standardized broadcast function with a less-defined “DTV over IP” broadband connection, whereas desktop and mobile video consumption is largely defined by Internet connectivity and supplier-specific video streaming implementations.

When planning video services, your geographic location will go a long way in determining whether subtitling becomes a necessary component in any new multi-platform system. In some countries, regulators are requiring content distributors to adhere to the same hard-of-hearing subtitling mandates on IP Internet-delivered content as for traditional broadcast TV. In other regions, the provision of single- or multiple-language subtitles on all platforms and services will enable service providers to maximize the number of potential paying customers or viewers, as is the case for public broadcasters. Operators are increasingly deploying “closed” subtitling or captioning solutions, where the viewer chooses to display subtitles rather than “open” subtitles, which are burnt onto the video signal for all to see.

The second challenge is to understand how subtitles and closed captions are transmitted as part of digital, hybrid and streaming video services. Broadly speaking, digital and hybrid TV services conform to the norms for subtitle carriage in digital TV in each geographic region. In the USA and parts of Asia, captions are transmitted as U.S.-style 608/708 closed captions. In Europe, subtitles may be in either Teletext or DVB bit-map subtitle format, while DVB subtitles are used in the rest of the world since they are image-based and, therefore, immune to region-specific character sets. There is less subtitle format standardization on the IP streaming platforms. In some cases, closed captions are carried within the digital program stream. More often, however, subtitles are stored as a separate file on streamer servers to be accessed, frame by frame as required, by the streamer client application running on the consumer device.

In an ideal world, subtitle content for digital TV, hybrid and IP streamed, would be created at the same time. In reality, though, much of the traditional broadcast content is already subtitled. Thus, the challenge becomes one of how best to repurpose the original subtitles for re-use on secondary platforms. Often, video is edited for either duration and/or adult content, or for new ad slots and, in some cases, even for video frame-rate changes. The simplest and most obvious solution (repurposing and checking the subtitle file manually against the revised video) is time-consuming, tedious and expensive.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Emerging technology

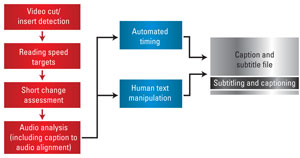

Emerging technology is bringing the option of semi or even full automation to the subtitle repurposing process. Speech analysis of the program audio makes it possible to scan both media and subtitle files to produce either quick pass/fail feedback or a more detailed report on the correlation between the video, audio and subtitles. (See Figure 1.) Typically, the analysis will look for the correlation of speech to subtitles (which also serves to filter out incorrectly matched media and subtitle files), the number of subtitles that display across scene changes (an indication of poor subtitle practice), and the subtitle word reading rate. Additional checks against profanity word lists may also be carried out.

Figure 1. Speech analysis allows the scanning of both media and subtitle files to produce quick feedback and detailed reports.

In cases where the broadcaster has control of any video editing of content carried out before it is committed to secondary platform transmissions, it should be possible to harness the Edit Decision List (EDL) file that lists any video scenes or frames cut from original content. The automated subtitle repurposing process described above may also be used, with the corresponding EDL file, to remove all subtitles associated with the cut video frames. The same principle can also be applied to video material that has been extended for commercial break purposes.

Where more complex repurpose editing takes place on video for secondary platform use, emerging technologies are able to analyze the edited program audio and compare this to the original subtitle text. Such subtitle re-sync tools have the potential to remove significant time and cost from the process of generating repurposed subtitle content for use on IP streaming services.

Repurposing subtitle files leads to the third major challenge in the processing of subtitles for multi-platform media operators — the understanding and management of subtitle file formats. When content repurposing begins, it will be necessary to maintain master subtitle files in each of the primary regional subtitle formats (which include closed caption files, Teletext subtitle files and DVB subtitle files), as well as in the target secondary streaming server subtitle file formats. These are most commonly a derivation of either DFXP or EBU-TT, both of which are an XML schema for “timed text” and include time-coded text and presentation metadata for each subtitle.

No “one fits all”

There is no “one fits all” subtitle file management process that suits all media broadcast operations. However, bringing automated subtitle file conversion processes to media management is becoming a necessity for larger multichannel operations. Subtitle file conversion can occur in a number of subtitle operations.

Subtitle workstations will generally support the export of subtitle files to a selection of formats, file-based subtitle encoding tools offer file conversion, and, increasingly, third-party Digital Media Asset Management (DMAM) platforms provide at least some basic subtitle file conversion capability.

Deciding when to “bind” subtitles to each video asset is an important step in building subtitle management into modern media facilities. Traditionally, subtitles were either encoded to the master video tapes using edit suite hardware — a form of “early binding” — or they were encoded into the video signal at time of transmission — a form of “live subtitle binding.” The transition from tape to video has created a new opportunity to early-bind subtitles to video using new subtitle video encoding software tools.

Both early and live subtitle binding have their advantages and disadvantages. Early binding gives time for QC staff or automated processes to double-check the presence and integrity of pre-encoded subtitles. Live binding, however, is a better understood mechanism — one that provides for late arriving video and subtitle content more efficiently.

New opportunity

The arrival of new-generation, generic, IT server-based media broadcast server platforms offers new opportunity for both early and live subtitle binding. Subtitle files may either be pre-encoded into video files before they reach the broadcast server, or the subtitle files may be merged at time of preview or transmission by the video server itself. Subtitle encoding software typically supports all common video file and wrapper formats, including MXF, MOV and other specific video file implementations. (See Figure 2.)

Figure 2. New platforms mean a new workflow for subtitling, one that expands insertion options from early to live settings.

As previously described, the early binding of subtitles to video files allows time to QC the subtitled media. Operators can typically browse “transmission ready” video files and manually check that subtitles are present, that they are aligned with the video content, and that they are in the correct languages. On the other side, the deployment of automated subtitle QC tools can make this process more thorough, less resource-intensive, and can add value such as checking against profanity dictionaries for unsuitable content.

The final challenge is to ensure that any pre-encoded or time-of-air generated subtitle streams are in a format that is supported by the various targeted service platforms and consumer devices. In most cases, downstream video compression systems will be architected to convert the broadcast video signals, with encoded subtitles and captions, into the various video and streaming formats required. These systems will be capable of extracting any subtitle streams and transcoding them in real-time into the format necessary — DVB subtitles, for example — for each platform. For any on-demand video services, processes need to be in place to transfer the appropriate subtitle files to the streaming platforms.

There is no doubt that the provision of hard-of-hearing and language subtitling has become a significant piece of the multi-platform broadcasting puzzle. Great care and thought need to be applied to designing the most efficient means to generate and broadcast pre-prepared and live subtitles for linear and on-demand content. There are consequences for the subtitle content when and if video is edited for each platform, and decisions need to be made as to how best to repurpose original subtitle files for edited video. File conversion systems need to be provided that address the needs of each service delivery platform, and consideration given as to what combination of early and live binding of subtitles to video file processes makes most sense for each facility.

Summary

In conclusion, subtitling for multi-platform video services is a complex topic, indeed. However, emerging technologies in areas such as voice-to-text “re-speaking” software for live subtitling, automated resynchronization and QC of repurposed subtitle files, and early binding techniques to encode subtitle data into video files, all contribute greatly to reducing the time and human resources required to provide such subtitle services.

—Gordon Hunter is head of sales at Softel.