CCD and CMOS

After two decades use, we are all familiar with basic CCD technology. During this period, the most recent development has been CCD sensors that support HD. CMOS sensors, like CCDs, are made from silicon. However, as the name implies, a complementary metal oxide semiconductor manufacturing process is used. Today CMOS is the most common method of making processors and memories. More on that later. First, we'll look at SD CCDs.

Interlaced scanning

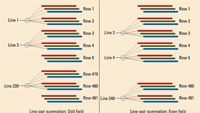

Obtaining interlace video from a CCD employs a process called line-pair summation, which increases sensitivity by one-stop. The process may be implemented by a circuit external to a CCD or within a dual-line CCD. In the former case, all rows are read out each field time. In the latter case, alternating fields of odd or even rows are read out each field-time.

In either case, a two-line window slides down through the rows in increments of two lines. The window starts with the top row for odd fields and the second row for even fields. Each pair of rows within the window is added, thereby increasing sensitivity by +6dB. (See Figure 1.)

The window acts as a filter that reduces vertical resolution by about 25 percent, thereby minimizing interline flicker and interline twitter. The filter creates an interlace coefficient of about 0.75, which means a 480-row CCD can output only about 360 lines of effective vertical resolution.

Progressive scanning

When progressively scanned video is required, a CCD is read out at the frame rate. However, some dual-line CCDs can output all rows if they are clocked at half-speed (for example, 30Hz rather than 60Hz). These CCDs nicely support cameras that record 60i and 30p video.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Progressive scanning does not employ line-pair summation; therefore, progressive video has a 1.00 interlace coefficient. Without line-pair summation, sensitivity is not increased.

HD CCDs

An SD, dual-line CCD with a resolution of 720 × 480 outputs 173kb per readout. At 60i, the bandwidth required is 10Mb/s. An HD camera uses CCDs that output at a significantly higher data rate. For example, at 60i, a 1440 × 1080 CCD requires a bandwidth of 47Mb/s.

CCDs running at very high clock rates consume a great deal of power. Small CCDs — typically those under 2/3in — cannot easily dissipate heat produced by high-power consumption. When, for example, a camera captures full HD at 60i and 30p, plus 720p60 and 720p30, one option is to use 1920 × 1080 chips running at 60Hz. Unfortunately, each chip requires a whopping 125Mb/s bandwidth. One solution: CMOS sensors that consume far less power and thus generate far less heat.

CMOS sensors

Most CMOS imagers use active pixel sensor (APS) technology, which requires at least three transistors per pixel. Each pixel incorporates a photodiode to collect light, a reset transistor, a row select (column bus) transistor and an amplifier transistor. (See Figure 2.)

An image capture begins when a trigger is sent to all reset transistors within an addressed row to prepare the row's photodiodes to capture light. Upon the reset trigger, the transistor closes and pulls up the photodiode to VDD, thereby clearing any photodiode charge. All photodiodes in a row are reset at the same instant. The reset begins an integration period. During this period, the amount of light falling on a photodiode determines how much charge accumulates in its potential well.

The integration period ends when a signal is sent to all row select transistors in an addressed row. Row select transistors pass the accumulated photodiode charges onto column busses. Each amplifier transistor acts as “source follower” that allows a photodiode charge to be sampled as a voltage.

All photodiodes in a row are simultaneously connected to all column busses. Each column bus terminates in a bus transistor that charges a sample-and-hold capacitor. The capacitor stores the voltage while a column waits for its turn to be output. When it is time to output a column, transfer transistor passes the stored voltage to an operational (OP) amplifier.

It is not possible to instantaneously output each row after sending it down the column busses. The time required to read out all pixels in a row is called row readout time (RRT). Rows, of course, are read out from a chip in a top-to-bottom sequence.

Adding additional ports to a CMOS sensor reduces RRT by simultaneously outputting multiple samples from a row.

Exposure control

When a camera operator sets a shutter speed, he or she is setting the integration period. For example, with a shutter speed of one-sixtieth second, the integration time is 16ms.

A CMOS chip's top-to-bottom exposure process operates much as a vertical focal plane shutter does in a modern film camera. (See Figure 3.) A curtain (red) is released to start its downward travel at the beginning of the exposure time.

When the first curtain has completed its travel, the film frame is fully exposed. When the exposure time ends, a second curtain (blue) is released to begin its downward travel to close-off the film.

When a film camera is set to a short exposure time, the first curtain will not have traveled far before the second curtain starts chasing it down the frame. The narrow traveling slit formed by the gap between the two curtains exposes the film.

When a scene contains little motion, the difference in time between exposing the top and bottom of a frame creates no harm. However, when the scene contains motion, the time difference between the top and bottom exposure is captured on film as a rolling shutter artifact.

Because a CMOS sensor's rows are processed — reset and output — with an offset equal to RRT, a CMOS sensor also exposes each frame in a top-to-bottom pattern. The row exposure offset creates a rolling shutter skew that matches the direction of the object's movement. (See Figure 4.)

The row exposure offset also is responsible for wobble (a stretchy look when a camcorder is subjected to sudden motion), partial frame exposure (from flash cameras) and fluorescent flicker (bands of shifting scrolling color or flickering dark lines).

Noise reduction

The inherent design of CMOS chips is responsible for many types of picture noise. Dark current noise is one type of fixed pattern noise (FPN). Each photodiode has its own unique level of charge even when there is no light falling on it. Therefore, a matrix of photodiodes has a matrix of noise values. FPN is also introduced by tiny differences among amplifier transistors. These amplifier differences introduce errors as photodiode charges are converted to voltages.

FPN noise can be attenuated by a noise reduction technique called correlated double sampling (CDS). CDS requires two readouts of each photodiode. The first readout is performed immediately after a row is reset and is a measure of a pixel's noise. The second readout occurs after the integration period and is a measure of a pixel's noise plus signal.

At the bottom of each column, one sample-and-hold stores the noise voltage. After the integration period, a second sample-and-hold for a column stores the signal-plus-noise voltage.

To output each column, every column is addressed one-by-one. The two stored signals are fed to an OP amplifier. Each OP amplifier subtracts the noise voltage from the signal-plus-noise voltage, thereby yielding a signal voltage.

Image readout

A CMOS device has the unique capability to employ both row and column addressing to create a window within the photodiode matrix. A small window can be used to capture slow-motion video. For example, a 960 × 360 pixel window within a 1920 × 1080 sensor requires less than 17 percent of the entire sensor be read out, allowing the readout to occur up to 6X faster.

For a CMOS camera to support interlace video, its chips are clocked at the field rate. Then:

- An external circuit performs line-pair summation that converts each frame to a field. Through this process, sensitivity is increased +6dB, and the interlace coefficient drops to 0.75, thereby minimizing flicker and twitter.

- Cameras that employ vertical pixel-shift or vertical interpolation can simply discard alternate fields and record the field not discarded. Line-pair summation is not required because the image is inherently slightly soft. Sensitivity is the same for both interlace and progressive operation.

All column signal voltages are loaded into an analog shift register where pixel information is shifted to output port(s). In this design, analog-to-digital (A/D) conversion is external to the CMOS chip. (See Figure 5 on page 46.) To decrease image noise, internal A/D conversion can be employed. In this design, a CMOS chip's output path is digital rather than analog. (See Figure 6.)

Sony Exmor technology employs a massive amount of circuitry between each column bus OP amplifier and the chip's output port(s). (See Figure 7.) Each column has its own A/D converter. From this point forward, the data path is digital — not analog — thereby reducing image noise.

Key CCD/CMOS differences

The obvious difference between these technologies is that CMOS sensors inherently have no vertical smear. Therefore, when using CMOS-based cameras you can shoot just as you would were you shooting film. However, the latest CCD chips significantly decrease the appearance of vertical smear. (See Figure 8.)

Clearly, rolling-shutter artifact is the Achilles' heel of CMOS technology. This artifact can be eliminated by implementing a global shutter. However, this requires an additional transistor within each pixel that decreases a chip's fill factor — thereby reducing its sensitivity or forcing a reduction in the number of pixels.

CMOS sensors typically offer a maximum exposure time of one-fiftieth or one-sixtieth second. To obtain greater sensitivity, as is done with CCDs, video gain can be increased. When even greater sensitivity is required, some CMOS cameras offer the option to specify a number of accumulated frames. Frame accumulation defines total photodiode integration time as a multiple of the current interframe interval.

CMOS accumulated modes do not suffer from increased image noise, but can have significant blur on moving objects. The image in Figure 9 was shot using 16-frame accumulation.

CCDs typically obtain super high sensitivity by engaging hyper gain. The result is a significant increase in image noise. Motion, however, remains clear. (See Figure 10.)

These high sensitivity modes are quite different and support different shooting goals.

Forecast

Both CCD and CMOS sensors will be used for years to come. Moreover, both technologies will employ multiple implementation strategies to meet performance goals and price points.

Steve Mullen is owner of Digital Video Consulting, which provides consulting services and publishes a series of books on digital video technology.

All photodiodes in a row are simultaneously connected to all column busses. Each column bus terminates in a bus transistor that charges a sample-and-hold capacitor. The capacitor stores the voltage while a column waits for its turn to be output. When it is time to output a column, transfer transistor passes the stored voltage to an operational (OP) amplifier.

It is not possible to instantaneously output each row after sending it down the column busses. The time required to read out all pixels in a row is called row readout time (RRT). Rows, of course, are read out from a chip in a top-to-bottom sequence.

Adding additional ports to a CMOS sensor reduces RRT by simultaneously outputting multiple samples from a row.

Exposure control

When a camera operator sets a shutter speed, he or she is setting the integration period. For example, with a shutter speed of one-sixtieth second, the integration time is 16ms.

A CMOS chip's top-to-bottom exposure process operates much as a vertical focal plane shutter does in a modern film camera. (See Figure 3.) A curtain (red) is released to start its downward travel at the beginning of the exposure time.

When the first curtain has completed its travel, the film frame is fully exposed. When the exposure time ends, a second curtain (blue) is released to begin its downward travel to close-off the film.

When a film camera is set to a short exposure time, the first curtain will not have traveled far before the second curtain starts chasing it down the frame. The narrow traveling slit formed by the gap between the two curtains exposes the film.

When a scene contains little motion, the difference in time between exposing the top and bottom of a frame creates no harm. However, when the scene contains motion, the time difference between the top and bottom exposure is captured on film as a rolling shutter artifact.

Because a CMOS sensor's rows are processed — reset and output — with an offset equal to RRT, a CMOS sensor also exposes each frame in a top-to-bottom pattern. The row exposure offset creates a rolling shutter skew that matches the direction of the object's movement. (See Figure 4.)

The row exposure offset also is responsible for wobble (a stretchy look when a camcorder is subjected to sudden motion), partial frame exposure (from flash cameras) and fluorescent flicker (bands of shifting scrolling color or flickering dark lines).

Noise reduction

The inherent design of CMOS chips is responsible for many types of picture noise. Dark current noise is one type of fixed pattern noise (FPN). Each photodiode has its own unique level of charge even when there is no light falling on it. Therefore, a matrix of photodiodes has a matrix of noise values. FPN is also introduced by tiny differences among amplifier transistors. These amplifier differences introduce errors as photodiode charges are converted to voltages.

FPN noise can be attenuated by a noise reduction technique called correlated double sampling (CDS). CDS requires two readouts of each photodiode. The first readout is performed immediately after a row is reset and is a measure of a pixel's noise. The second readout occurs after the integration period and is a measure of a pixel's noise plus signal.

At the bottom of each column, one sample-and-hold stores the noise voltage. After the integration period, a second sample-and-hold for a column stores the signal-plus-noise voltage.

To output each column, every column is addressed one-by-one. The two stored signals are fed to an OP amplifier. Each OP amplifier subtracts the noise voltage from the signal-plus-noise voltage, thereby yielding a signal voltage.

Image readout

A CMOS device has the unique capability to employ both row and column addressing to create a window within the photodiode matrix. A small window can be used to capture slow-motion video. For example, a 960 × 360 pixel window within a 1920 × 1080 sensor requires less than 17 percent of the entire sensor be read out, allowing the readout to occur up to 6X faster.

For a CMOS camera to support interlace video, its chips are clocked at the field rate. Then:

- An external circuit performs line-pair summation that converts each frame to a field. Through this process, sensitivity is increased +6dB, and the interlace coefficient drops to 0.75, thereby minimizing flicker and twitter.

- Cameras that employ vertical pixel-shift or vertical interpolation can simply discard alternate fields and record the field not discarded. Line-pair summation is not required because the image is inherently slightly soft. Sensitivity is the same for both interlace and progressive operation.

All column signal voltages are loaded into an analog shift register where pixel information is shifted to output port(s). In this design, analog-to-digital (A/D) conversion is external to the CMOS chip. (See Figure 5, previous page.) To decrease image noise, internal A/D conversion can be employed. In this design, a CMOS chip's output path is digital rather than analog. (See Figure 6.)

Sony Exmor technology employs a massive amount of circuitry between each column bus OP amplifier and the chip's output port(s). (See Figure 7.) Each column has its own A/D converter. From this point forward, the data path is digital — not analog — thereby reducing image noise.

Key CCD/CMOS differences

The obvious difference between these technologies is that CMOS sensors inherently have no vertical smear. Therefore, when using CMOS-based cameras you can shoot just as you would were you shooting film. However, the latest CCD chips significantly decrease the appearance of vertical smear. (See Figure 8.)

Clearly, rolling-shutter artifact is the Achilles' heel of CMOS technology. This artifact can be eliminated by implementing a global shutter. However, this requires an additional transistor within each pixel that decreases a chip's fill factor — thereby reducing its sensitivity or forcing a reduction in the number of pixels.

CMOS sensors typically offer a maximum exposure time of one-fiftieth or one-sixtieth second. To obtain greater sensitivity, as is done with CCDs, video gain can be increased. When even greater sensitivity is required, some CMOS cameras offer the option to specify a number of accumulated frames. Frame accumulation defines total photodiode integration time as a multiple of the current interframe interval.

CMOS accumulated modes do not suffer from increased image noise, but can have significant blur on moving objects. The image in Figure 9 was shot using 16-frame accumulation.

CCDs typically obtain super high sensitivity by engaging hyper gain. The result is a significant increase in image noise. Motion, however, remains clear. (See Figure 10.)

These high sensitivity modes are quite different and support different shooting goals.

Forecast

Both CCD and CMOS sensors will be used for years to come. Moreover, both technologies will employ multiple implementation strategies to meet performance goals and price points.

Steve Mullen is owner of Digital Video Consulting, which provides consulting services and publishes a series of books on digital video technology.