Routing switchers

Effective routing of video and its associated audio is one of the most critical functions in modern broadcasting operations, and routing technology has become considerably more complex in the past 10 years. When specifying a routing switcher, systems engineers face numerous challenges related to the simultaneous use of multiple video and audio formats on a variety of physical interconnections. The adoption of HD video formats and increasingly dense audio formats has increased the overall complexity of these multiformat systems, which are also expected to accommodate audio and video processing. Internal router processing creates system and operational efficiencies by simplifying control, and a flexible input/output arrangement allows easy reconfiguration between uses.

Today’s complex routing environment

A decade ago, when broadcast signals were primarily in SD with associated discrete AES stereo audio signals, routing was relatively simple. All it took was a separate router for each signal type, two control levels and an occasional need to synchronize the signals.

The emergence of HD broadcasting has opened the doors to massive improvements in picture quality as well as the home audio experience through surround-sound technology, but at the same time has created a requirement to manage and route many more audio channels. Now, each video signal typically requires a stereo track, a surround track and sometimes different language or regional audio tracks. In fact, it’s not out of the question to expect an 1152 x 1152 video router to support a 36,864 x 36,864 embedded audio matrix.

Embedding the audio tracks in the video signal simplifies signal transport, but the two signal types still need to be separated for production applications. The resulting physical connections can include AES on coaxial cable or balanced-pair cable with multiple channels of audio in multiplexed audio digital interface (MADI) streams (typical for audio consoles).

With the emergence of Dolby E as the de facto compressed audio format in professional surround-sound applications, many video feeds contain embedded Dolby E. This reduces the number of physical connections but introduces additional complexities since Dolby E is data and not audio, and therefore can’t be routed or mixed without additional signal processing. Also, no part of a Dolby E packet can be changed without loss of at least a video frame’s worth of audio. Dolby E must never be sample-rate converted or subject to sample slips.

On a basic level, a router passes all signals from input to output transparently, but it’s not that simple in modern production environments. Most large routers come equipped with hybrid capabilities that enable routing of video and audio within the same chassis and accept a mixture of embedded and discrete audio signals. However, with smaller routers, architectural and input/output flexibility surmount the ability to route multiple signal formats.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Overlaying these complexities is the commercial pressure to reduce the costs of installation, operation and maintenance. Fiber cable is lighter, less lossy and is now available at a lower cost than copper coaxial cable, but fiber transmitters and receivers at each end remain more costly than their coax counterparts, at least for now. These are the factors that keep systems engineers up at night as they plan and purchase routing equipment to deal with the migration from SD to HD and then 3-D and beyond.

Coaxial cable vs. fiber

The cost of low-loss coaxial cable is as much as 10 times the cost of single-mode fiber, and with today’s 3G data rates, coax cable runs can’t exceed 100m unless they’re boosted by distribution amplifiers, which take up space and add to the capital and operational expense. Increasing volumes of fiber in the broadcast market and the introduction of small-form-factor pluggable (SFP) fiber transmitters and receivers, available for as little as €60, have reduced the cost of implementing a fiber interface. The high cost of fiber termination equipment and the lack of highly skilled labor have been the only true barriers to fiber’s adoption.

However, to make an informed choice of physical interconnection, it’s important to understand the unique challenges of broadcast video signals.

Networking uses data coding techniques, which in electrical signals maintain direct current levels close to a 0DC offset. Video uses a non-return-to-zero, inverted, scrambling algorithm that can result in long runs of logic 0 or 1, the so-called pathological signal. This situation changes the DC level of a signal in the electrical domain and, unfortunately, has a similar effect in the optical domain. Optical transmitters attempt to maintain an average optical power output using a system called average power correction (APC). This is done by monitoring the output power and adjusting the laser bias current as required. An uneven mark/space ratio, as seen in pathological signals, affects this biasing and results in transmission errors.

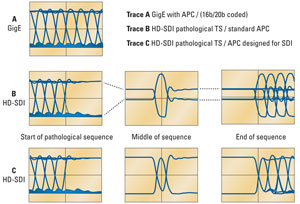

Figure 1 shows three traces from an optical oscilloscope connected to the output of a laser transmitter. Trace A is a GigE data stream with roughly a 50/50 mark/space ratio that demonstrates excellent stability with APC.

Figure 1. Laser TX optical output traces

Trace B shows the effect of an HD-SDI pathological test signal applied to a similar laser transmitter with a standard APC circuit as used in the Ethernet transmitter. The extended string of 19 “data low” bits followed by 1 “data high” bit fools the APC circuit into thinking that the temperature has abruptly changed or that there has been a rapid degeneration of the laser diode. The APC system kicks in, rapidly adjusting the laser bias current and resulting in large transients and “overshoots” at its output. (See Figure 1, Trace B, middle of sequence.) Even worse is an extended string of 19 data-high bits followed by one data-low bit. This time, the occasional low bit passes below the laser’s threshold current and causes the laser to exhibit relaxation oscillations, as well as turn-on delay. (See Figure 1, Trace B, end of sequence.) The transients and overshoots in the middle and at the end of the pathological sequence are so pronounced that the vertical gain of the oscilloscope must be reduced by a factor of 4 to fully display them.

A possible solution might have been to simply increase the timing window of the APC system. In reality, the solution requires more complex “burst mode” techniques to properly overcome the problem. (See Figure 1, Trace C.) Trace C shows what happens when the same HD-SDI signal is applied to a transmitter with a new APC circuit designed for low-mark-density applications. High signal fidelity is restored.

A similar situation occurs in receivers, where a bias circuitry is affected by excessive runs of 0s and 1s. Most manufacturers in the broadcast market use a mechanically coded SFP cage, which prevents Ethernet SFP parts from being fitted to video products, specifically to prevent this problem from occurring by inadvertent use of networking SFP modules.

Consequently, it is now common for video to be transported over a mixture of coaxial cable and fiber.

The ideal router enables individual channels to be changed between inputs and outputs and between coaxial cable and fiber. The ability to replace inputs with outputs (or vice versa) offers the most flexibility, allowing routing systems to grow as required and not be limited by fixed rear-panel arrangements or frame architecture.

De-embedding inputs and embedding outputs

Another important capability for a multiformat router is the ability to support different timing scenarios ranging from video signals that are asynchronous to one another and audio to Dolby E sources that are asynchronous to the video and to one another, with similar scenarios for outputs. The router must be capable of routing signals asynchronous to one another transparently and creating synchronous signals as required. For example, there are a couple of choices if several audio feeds are needed to make up one output consisting of video with embedded audio output. The router can frame-synchronize the video and sample-rate convert the audio feeds to a local reference on the inputs, and then embed them on the outputs. This is probably the cleanest solution, but frame synchronizers and sample rate conversions must handle Dolby E transparently.

Or, the system can route asynchronous signals to the outputs, and embed them on the outputs. This choice requires output cards to accommodate any combination of untimed signals and to re-embed audio and Dolby E correctly, with no loss of data. Here, the system may call for frame synchronizers on outputs to retime a number of feeds to, for example, a playout area. In this case, frame synchronizers need to be on the outputs.

Channel independence

A modern routing system must have the flexibility to change physical build, signal format, sample rate and timing with the minimum of effort or cost. Channels must be fully independent to enable this adaptability.

Fixing groups of signals to one reference or one format severely restricts the flexibility of a routing system. If this is not the case, inputs may need to be doubled up to provide an SD block and an HD block in the router, thus increasing cost, effectively reducing the router capacity and adding to the system’s complexity.

If Dolby E is to be embedded into video, and the two signals are asynchronous, it must be realigned to the video, and synchronism must be maintained by adjusting guard band samples. This could happen with any audio signal, so each channel must operate independently from all others.

Signal processing

Today’s most sophisticated hybrid routers go beyond simply routing signals. As the heart of the broadcast operation, larger routers should offer some type of processing. Building processing into the router has the potential to replace many frames of modular products, resulting in huge space and weight savings, as well as reductions in installation costs and ongoing power requirements. But processing is needed for both inputs and outputs.

Looking ahead

Once installed, routers are often in use 24 hours a day, for many years. Replacing one is a complex logistical operation, so it’s wise to think to the future and ensure the equipment will support new technologies as they emerge.

The capacity to route 32-channel audio is one requirement that exists today. In fact, one major European broadcaster regularly deals with four stereo and four surround tracks for each video signal, totalling 32 mono channels per video. In this case, the audio is embedded as part PCM and part Dolby E. But there are also large benefits of a non-Dolby system in the form of reduced latency in the decode-encode stages, and video frame delays are not needed to maintain lip synchronization. Therefore, it appears that a router should have the capacity to de-embed 32 channels of audio and the ability to route all of these signals.

One day, large-scale monolithic routers will be superseded by networking. Until then, will we see a world in which IP-streaming inputs and outputs are options and routers include BNC SFP, or registered jack RJ45 inputs and outputs?

And finally, just as we come to grips with 1080p, it’s becoming likely that we will soon be dealing with 4K. This is currently defined as multiple 3G signals, but will 4K eventually become a single fiber interconnect? Flexible architectures with individually replaceable modules mean that this type of change can be accommodated in chassis on the market today. Since the chassis is the difficult thing to replace, it’s imperative that the router be designed and built to accommodate such developments.

In summary

We have considered a range of challenges facing systems designers when choosing a routing system. There are many applications and specific requirements, so it’s difficult to pinpoint the exact configuration needed for each application. In this article, we have highlighted the possible scenarios that may exist, both in physical interconnects and in formats and timing of signals.

—Alan Smith is project manager for routing at Snell.