M&E Faces up to the Promise and Challenges of Generative AI

Recent hype illustrates the promise and peril

This year has witnessed an explosion in the use and discussion of artificial intelligence (AI). It is, of course, something that has been around since the 1950s but since the end of last year, coverage has ranged from the serious to hysterical, particularly over its latest manifestation, generative AI (Gen AI).

This more evolved form of AI can create different kinds of content, from text and images to audio and synthetic data, which is more realistically human instead of something clearly produced by a machine. At the forefront of this is ChatGPT (generative pre-trained transformer), which, although only launched in November 2022, has already radically changed the direction of automated speech, text and image creation.

Fast and Precise

As is often the case with “new” technology, many of the features of AI had been available to broadcasters and media producers in the last decade.

“We’ve been implementing AI in our tools for years, so it’s not something we’ve jumped on with ChatGPT,” says Andre Torsvik, vice president of product marketing at Vizrt. “AI is used to make computers do what they are best at, which is being fast and precise. Human beings can find that difficult to do, with, for example, outdoor keying on a sports field. An AI can react much faster and the end result is a much better key, you don’t see flickering or ads on top of players.”

The most common applications for Gen AI in broadcast include:

- image generation and video synthesis

- automated production

- assisted video editing

- creation of metadata for automatic “logging”

- subtitling, captioning, segment-specific searches and re-use of media assets

- automatic upscaling of content to higher resolutions; and

- encoding/decoding of audio and video streams

Simon Forrest, principal technology analyst at Futuresource Consulting, comments that AI is capable of assisting artists and producers to create content more quickly, enabling “faster iteration, more exploration and delivering media assets that approach a harmonized composition.”

Another assistive application of AI comes in the form of improved archive searches. This has been a particular area of research at the Fraunhofer Institute for Intelligent Analysis and Information Systems (IAIS). Dr Christoph Schmidt, head of Fraunhofer IAIS’s Speech Technologies Unit, explains that it goes beyond keyword techniques, with Gen AI and natural language processing providing “improved ways to find relevant archival content to produce new programs.”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Efficient and Dynamic

In addition to reducing the number of repetitive tasks performed by operations staff and allowing creatives to do their jobs faster and better, Peter Sykes, strategic technology manager at Sony Europe, sees AI as “making the whole media supply chain as efficient and dynamic as possible, helping analyze and then address resource allocation and process steps to optimize them, leading to better business decisions.”

Sony established an AI division in 2020, focusing on projects in the areas of imaging and sensing, gaming, gastronomy (robotic manipulation of food and cooking utensils) and AI ethics.

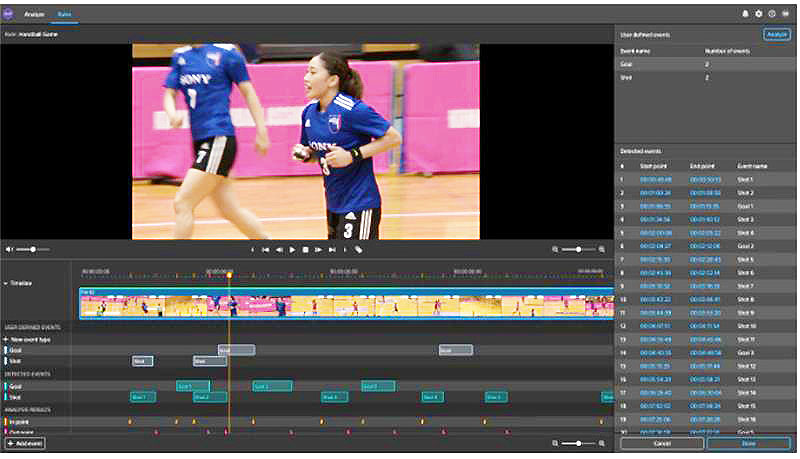

For broadcasting, Sony offers its A2 Production system, which, among other features, can identify sports highlights using automated logging and scene detection features. It is also applying AI to software-defined networks through the VideoIPath media orchestration platform developed by its Nevion subsidiary.

Ross Video implements AI across two of its main business groups: robotic cameras and newsroom systems. Both are working on integrating AI into their product lines, with robotics using a more proprietary-style technology built into its products while news is relying on third party AI engines.

Jenn Jarvis, product manager for newsroom computer systems at Ross Video, says this gives customers a choice of which engine they want to use and a framework for how they integrate it into a product. “We’re also now looking at the content creation side, which is part of the newer aspects of AI we’re still exploring and seeing how it fits into a news workflow,” she said.

For robotics, Karen Walker, vice president of camera motion systems, observes that the person managing shot selection will still have some work to do in keeping the presenter in frame or in focus.

“But the next thing for AI—and a lot of people have come out with this—is a ‘talent tracking’ application,” she says. “You don’t have to have any human intervention so its possible to set up pre-sets and where you want the talent to be in that shot. This is done independently of the talent and can be configured for different presenters. It takes away some of that manual intervention and I think it’s where AI has benefits, in taking out some of the mundane tweaking.”

Reducing Tedium

Removing, or at least easing, the amount of dull but necessary elements in live production by employing AI is now a realistic proposition. This is illustrated by Rob Gonsalves, engineering fellow at Avid, who gives the example of Open AI’s Whisper speech-to-text model.

“It can be applied for live transcription and real-time translation of multiple languages within a broadcast feed, toggling through as many as 100 languages simultaneously,” he said.

Avid has also tested both OpenAI’s CLIP model and the generic GRoIE ROI (region of interest) extractor as part of research into auto-framing.

“This followed the area of a shot that is of the greatest semantic interest,” Gonsalves explains. “The traditional way to enable search is to manually annotate media assets with metadata tags describing what is in the shot. Using AI object or facial recognition can now automate the scanning and annotation process. Semantic search does something similar but, by creating embeddings into clips, it allows an editor to conduct a free-text search for scenarios.”

Sepi Motamedi, global industry marketing lead for professional broadcast at NVIDIA, comments that “live production, particularly for sports, takes tremendous advantage” of AI. “It is used in super-slow motion replays to localize advertisements seen on the pitch, to quickly generate highlights from the game, to deliver an added layer of data through telestration [which involves pitch calibration and player tracking] and, of course, camera tracking.”

Among the first developers to begin applying AI to broadcast applications from the outset was Vertitone. Founded in 2014, the company offers an enterprise AI operating system platform, aiWARE, along with engines for ChatGPT, audio, biometrics, speech, data and vision.

Gen AI won’t replace humans but it will replace the humans who are not using AI.”

Paul Cramer, Veritone

“We realized there was an opportunity in the media and entertainment markets to begin to index the world’s audio and video content,” comments Paul Cramer, managing director of media and broadcast at Veritone. He adds that once material is indexed, Gen AI can be used to “create a new personalized experience for the consumer” who is looking for custom content. This could be in the form of news footage tailored for a viewer with an interest in, for example, space exploration.

From Broad to Niche

As AI is adopted more widely throughout the media sector, it is being used for both very niche and quite broad applications. Moveme.tv illustrates a highly specialized use: its search platform is designed to help viewers match films to their mood through the use of descriptive words and emojis. Founder and chief executive Ben Polkinghome says the ultimate aim is to enable people to create “their own hyper-personal entertainment channels.”

On a wider broadcast level, BBC’s R&D department initiated its AI in Media Production program in 2017 with a prototype video editing package that automatically selected and assembled shots into a finished piece. This work continues today and was expanded last year with a new data set for “Intelligent Cinematography” to assist in framing and editing. BBC R&D is due to announce its position on Gen AI soon but could not give any more details before TV Tech went to press.

AI is now exploited by all types and sizes of media creators and organizations, both new and old. Video marketing platform Vimeo announced in June it was making a Gen AI-powered “creation suite” that simplifies the process of making videos. The package includes a script generator, teleprompter and text-based editing system that automatically deletes filler words and long pauses.

On the more traditional side, the All England Lawn Tennis Club is using the Gen AI capabilities of IBM’s Watson platform to produce commentary for video highlights of the 2023 Wimbledon championships on its app and website.

Despite the innovative spirit of this, AI has caused discomfort among media professionals, both in terms of jobs being potentially lost and the risks of the technology being misused. Major news outlets including “The New York Times” and NBC News recently voiced concern over how Gen AI could not only make journalists redundant but enable unscrupulous types to produce fake but believable stories.

The FCC has its own working group on AI and among the topics it’s initially focused on has been using the technology to improve its services including using AI to manage spectrum more efficiently. The commission is hosting a joint workshop this month with the National Science Foundation “to discuss the possibilities and dangers AI presents for the telecommunications and technology sectors.”

In the U.K., media regulator Ofcom, while acknowledging that Gen AI offers benefits such as synthetic data training for better safety technology, has similarly highlighted the dangers of bogus news and other media content. Ofcom is currently monitoring the development of Gen AI to see how its positive aspects can be maximized and also what threat the more negative ones might pose.

As for the human impact, Karen Walker at Ross Video observes that “a lot of creative things have to be done by humans—people are going to work with AI side-by-side.”

Veritone’s Paul Cramer concludes: “Gen AI won’t replace humans but it will replace the humans who are not using AI.”

Kevin Hilton has been writing about broadcast and new media technology for nearly 40 years. He began his career a radio journalist but moved into magazine writing during the late 1980s, working on the staff of Pro Sound News Europe and Broadcast Systems International. Since going freelance in 1993 he has contributed interviews, reviews and features about television, film, radio and new technology for a wide range of publications.