The Practical Side of HDR

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Hello, everyone, welcome back. In my last posting, we examined the various scientific (SI) units used to measure many of the properties of light; in reality, most of those related to brightness or luminance. We needed this data in order to make sense of the various specifications that we come across in our daily lives as media professionals, but in particular I wanted to define exactly what is meant by the term “nit” (a colloquialism for “candela per square meter,” if you recall). The reason for this is that specification for brightness in today’s monitors is most often described in terms of nits.

As is often the case, some of the parameters of a transmitted signal were actually defined by dated technology. For example, we still have drop frame timecode, which arose from the creation of the NTSC color television standard, even though we have moved on significantly since the technology which mandated such a complex solution. That historical perspective is also responsible for what we would now consider to be “Standard Dynamic Range.”

THE UPPER LIMITS

To date, moving images distributed to the various platforms has been constrained to an upper limit of 100 nits. That is because 100 nits is approximately the limit of illumination that was achievable from a standard CRT display. Try buying a new, CRT based, television set today!

Despite the obsolescence of CRT as the ubiquitous display technology, the (SDR) programs are still calibrated to fit in this limited range. In order to be able to do that, the extremes of the signal – those close to black and close to white – end up being crushed down and up respectively in order to maximize the linearity of the end-to-end system in its midrange.

[Read: HDR: What Is It And Why Do We Need It?]

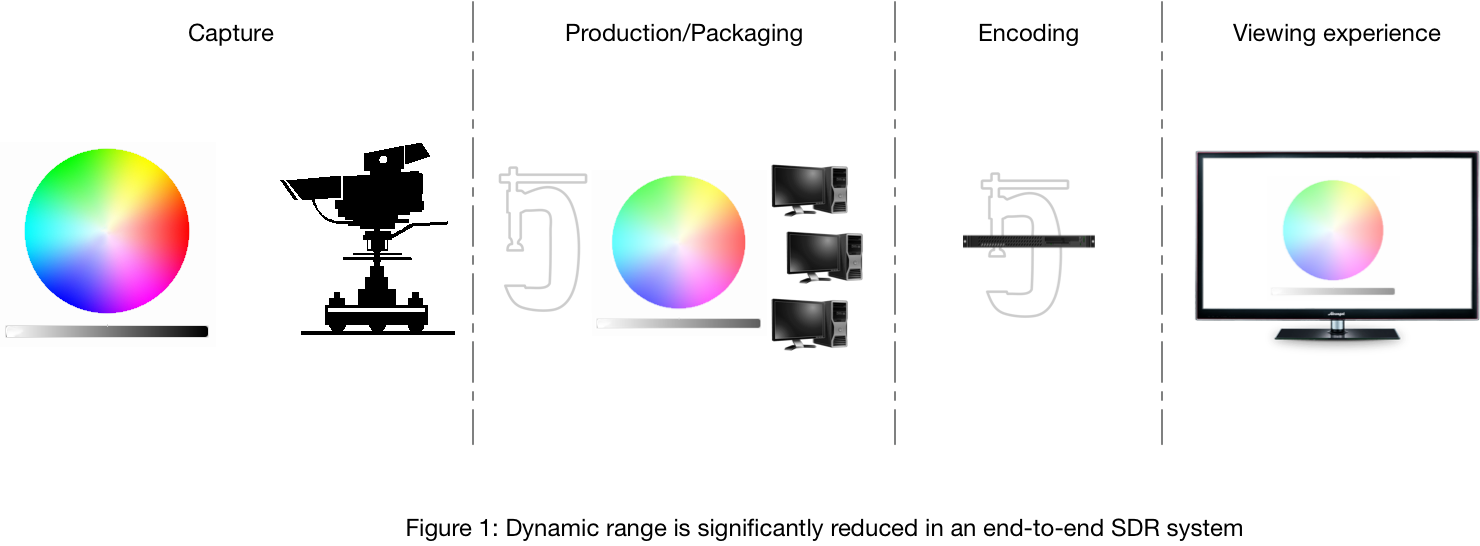

Modern flat panel displays, on average, offer a maximum light output of 500 nits, and manufacturers are already offering new sets that boast 1,000 nits. But—and here’s the important part–the majority of the video that will be delivered to them is SDR and has been conformed to fit into a 0 - 100 nit range. Thus, most of the fine detail in those extremes is lost forever. The images, when captured, are actually already raw HDR, but that dynamic range is crushed at several stages of the production and delivery process (Fig. 1).

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

QUALITY IN, QUALITY OUT

There is a very important point here – once that detail has been crushed, you can’t get it back. So even if you display it on a 500-nit screen, you are not getting HDR. Let me be perfectly clear: HDR does not just mean brighter whites. Setting the white point of an SDR image to 500 nits on your screen does not gain you extra detail. Think about this in audio terms: you can put an MP3 signal into a very powerful, very expensive high-fidelity audio system and crank up the volume, but the quality of the audio doesn’t get any better – it just gets louder!

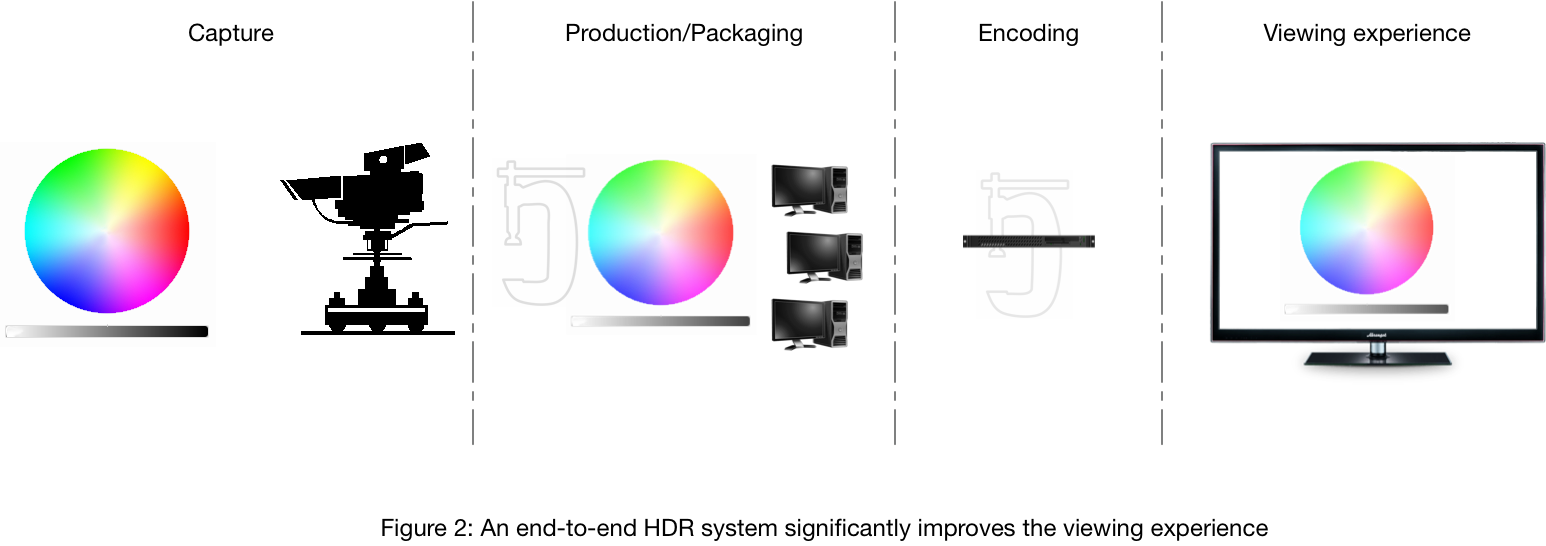

In order to provide you with a higher fidelity (i.e. HDR) image on your screen, the delivery system has to possess a higher dynamic range from the point of ingest all the way to the point of display. (Fig. 2 acknowledges that we live in the real world; there is a practical limit to how much resolution – spatial (pixel count), temporal (frame rate) and HDR/WCG we can deliver to the mass audience within reasonable economic restraints. HDR is an attempt to deliver the most faithful imagery we can currently deliver, for the greatest impact on the customer experience.)

We must acknowledge that HDR is currently in its infancy. There are compromises in each of the display technologies available today. LCD screens are transmissive – they require a backlight in order to produce a viewable image. You can certainly increase the brightness of the backlight to produce white levels in excess of 1000 nits, but LCDs have a fixed contrast ratio internally, so increasing the level of the backlight also increases the light level produced by “black” pixels.

Manufacturers have come up with several schemes to modulate the backlighting in areas which are supposed to be black, with varying degrees of success. OLED screens are emissive, and do have the extended dynamic range capabilities, but struggle to produce the higher light levels, especially over long periods of time.

This last point is taken into account in all of the proposed HDR standards – metadata provided with the signal lets the set know just how bright the average image is and will also specify just how bright the brightest pixel in the entire program is, so that a display can adjust its behavior in order to protect itself from premature burn-out.

We will discuss these proposed standards in a future posting, but first we’ll examine just what is meant by “Wide Color Gamut” and discuss the various standards currently in use in both standard dynamic range HD (i.e. today’s signals) and in the proposed HDR formats.

Paul Turner is founder ofTurner Media Consultingand can be reached atpault@turnerconsulting.tv.