Amazon Automates Media Analysis for Rekognition Video

Specifically works for black frames, end credits, shot changes and color bars

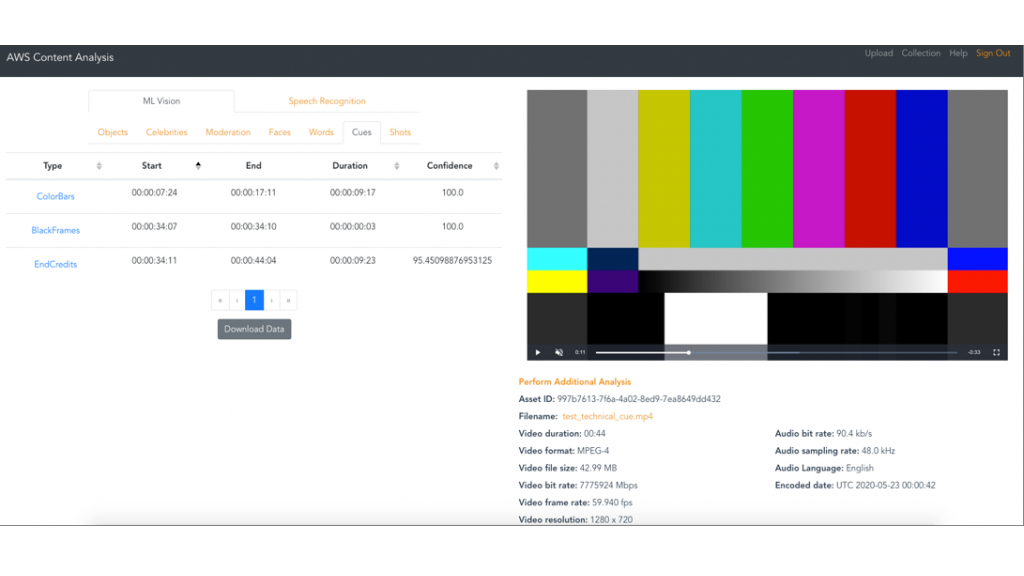

SEATTLE—Amazon has added new features to its Rekognition Video machine learning analysis service that allows it to automate media analysis tasks, specifically the detection of black frames, end credits, shot changes and color bars, using fully managed, ML-powered APIs.

With these features, users can execute workflows such as content preparation, ad insertion and add “binge-markers” to content at scale in the cloud. Examples of this include a short duration of empty black frames with no audio to demarcate ad insertion slots or end of a scene, as well as identify the start of end credits to implement interactive viewer prompts like “Next Episode.”

In addition, the ability to detect shot changes from one camera to another can enable the creation of promotional videos using selected shots, generate high-quality preview thumbnails and insert ads without disrupting the viewer experience. SMPTE color bars can also be detected so that they can be removed from VOD content, or to detect issues such as loss of broadcast signals in a recording.

Amazon says that the Rekognition Video provides the exact frame number when it detects a relevant segment of video. It handles various video format rates, such as drop frame and fractional frame rates. Utilizing the device allows for the automation of tasks or a reduced review workload for human operators, says Amazon. The company only charges for the minutes of video analyzed.

Amazon does describe Rekognition Video as a device that detects people and faces. Facial recognition technology is the focus of a new bill being proposed in the Senate and House that would ban the use of facial recognition technology for law enforcement because of systematic and bias issues. Amazon had already announced that it was putting a one-year moratorium on law enforcement using its Rekognition technology.

For more information, visit aws.amazon.com.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.