Video and signal conversion, part 1

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

In previous articles, I have covered multiviewers, routers and some specific topics about AC-coupling capacitors, the evolution of SFP modules and fiber-optic theory. One important aspect I haven’t covered yet is conversion.

In a perfect world, interfaces and standards would interact fluidly and perfectly. Unfortunately, this is not the case, and with history as a guide, new advances in technology will perpetuate this situation. A key component requirement is that an SFP enable absolute interoperability. A simple SFP cage allows easy interchange between multiple modules that bridge a wide number of standards.

This article is dedicated to the function of conversion, a broad topic. There are many options for these functions. There are rack-based products, card-based products, dongle products and, of course, SFP products.

Because this topic has great depth, it will be divided into two parts. This first section includes an introduction to signal conversion as opposed to format/video conversion. We’ll look at techniques to convert between electrical and optical signals. This article will also review basic video standards conversion techniques.

In part two, we’ll examine implementations of graphic format converters; VGA, DVI, HDMI and others; as well as the familiar up/down/crossconversion systems.

Diversity

There are so many options for converter features, performance and packaging that it is impossible to describe all the functions and details in one article. A quick market survey would indicate that users prefer modular, or glue, products. While they can require time to reconfigure cables and firmware settings, they are easier to upgrade than rack-based products and usually cost less as well. As requirements change for video production and processing, the features for conversion can change as well. Upgrading to better features and functions is a never-ending process; nothing is ever perfect.

Optical converter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

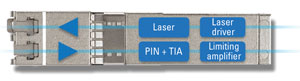

The optical converter is usually targeted towards video signals running at 270Mb/s, 1.5Gb/s and 3Gb/s, and soon 6Gb/s and 11.88Gb/s (10G-SDI), but they can support data rates from 15Mb/s to 11.88Gb/s depending on configuration of the internal signal processing blocks. (See Figure 1.)

Figure 1. Electrical-optical transceiver–converter. Images courtesy Embrionix

This is the simplest optical-to-electrical and electrical-to-optical converter. The basic blocks for the electrical-to-optical converter are:

- Laser driver. Electrical digital signals feed the laser driver generating output currents that bias the laser for the correct average power output and modulate the laser to generate the digital 1s and 0s. The video pathological signal has a large DC component, so it is crucial to have a laser driver that supports this unbalanced power pattern. (See Figure 2.)

- Laser or LED. This device converts electrical currents into photons, or light. Optical output power is in direct proportion to the current level. An article covering the detailed physics of this conversion will be published later in Broadcast Engineering.

Figure 2. Pathological signal (See “Understanding blocking capacitor effects,” Broadcast Engineering magazine, August 2011)

For the optical-to-electrical converter, the blocks are:

- Photodiode. It receives the photons and converts them to an electrical current output.

- Trans-impedance amplifier (TIA). It converts the photodiode current to a voltage output, which feeds a limiting amplifier. The photodiode current is small; it might only be in the pico-amp range. The TIA converts this current to a voltage and amplifies the signal, but the gain is designed so TIA output is distortion-free. The high-gain limiting amplifier converts the low-level TIA output into digital 1s and 0s.

This processing is necessary to ensure distortion-free operation even with the video pathological signal. To reduce jitter, advanced optical converters integrate reclockers just before the laser driver or right after the limiting amplifier. This reduces jitter in order to achieve the best signal quality and the lowest possible bit error rate.

Diagnostic functions such as jitter measurement, or eye diagram analysis, can be included along with image and signal processing capability. These advanced features are available as options in some SFPs, providing engineers nonintrusive, real-time signal performance monitoring and status at the edge of their signal distribution network. Engineers can view all the signals in the studio and know exactly the point where any failure might occur.

Video standards converters

Video standards converters cover a broad range of formats: NTSC, PAL, interlaced and progressive, and computer graphics formats in addition to various signal transports such as DVI, HDMI, USB, DisplayPort and Thunderbolt. And even this list is a subset of existing equipment. Technology advances and the convergence of the worldwide video market have made it necessary to repurpose video assets that could have originated from any part of the world, from a video camera or a computer, for an application that is going to a different part of the world, being viewed by a different monitor, being stored on a computer disc, or any combination thereof.

One good example is the NTSC or PAL composite video baseband signal (CVBS) to digital decoder, sometimes called a video A/D converter, or simply a converter. The CVBS format has been used for more years than other current formats, decades before SDI, or any other digital format. The original digital transition started nearly 20 years ago, but some studios and production facilities still have large numbers of CVBS feeds, signals and tape assets.

Today, nearly every video signal is distributed digitally to the final viewer. Video production is digital, and playout is digital. But these legacy CVBS signals and tape assets must be converted to digital for compatibility with the new digital infrastructure.

What parameters are important in an analog to digital converter? Based on the application, some are more important than others. Typically, choices are made for resolution (8, 10 or 12 bits), linearity (differential gain and differential phase), chroma, luma, gain, hue and brightness are important in nearly every application to preserve the original picture quality. A long or faulty coaxial cable can seriously degrade CVBS signal picture quality as can poor filters and converter chips. Today, digital transmission for video creates far fewer picture artifacts than analog modulation did. But, accurate anti-alias filters, linear converters and little or no differential delay between luma and chroma are still requirements for high-quality on-air pictures.

Today’s semiconductor technology provides really small converters. For example, one SFP for CVBS includes two independent converters in a single SFP package. (See Figure 3.) These fully featured converter chips integrate more features into less space with less power than some modular products and card-based converters.

Figure 3. CVBS A/D and D/A converter with SDI I/O

The basic blocks are:

- A\D converter. This critical block includes analog signal filtering. Resolution, linearity and analog noise are also controlled with this chip. A poorly designed semiconductor has a negative impact on overall performance. Composite NTSC or PAL conversion is still a common function. While component RGB signals are still used, computer graphics formats have rapidly diminished their use. Simultaneously, DVI, HDMI and other digital video-specific transports have replaced the three coaxial cables of RGB systems with a single cable, albeit with multiple twisted pairs inside.

- Image processing. This block could accomplish functions such as audio de-embedding, audio analysis, video analysis, time base correction, etc.

- SDI serializer. This block converts the parallel data from the A/D to serial data. It receives 10-bit or 20-bit video words as well as necessary synchronization and ancillary information such as audio data, or signal-specific metadata such as TRS, EAV and SAV. It adds framing data and converts the parallel data to serial. As the serial data is clocked out, a data scrambler is used to reduce DC content, and then NRZI channel encoding is used to ensure that data is invertible.

- D/A converter. This block receives the digital data in parallel in addition to video timing and framing signals. It converts it to an analog composite or component signal. It could use 8, 10 or 12 bits of resolution. The video D/A conversion, or encoding, is far easier to implement than the A/D, so this block is less critical than in the decoder.

- SDI deserializer. This is the opposite of the serializer. It receives the serial signal, recovers framing, deserializes the data and outputs the data in parallel words (10 or 20 bits). The sophistication and miniaturization of semiconductors allows this block to de-embed audio signals, provide output sync signals, analyze for CRC errors, extract other metadata and provide status flags and information about the video signal itself.

This concludes part one of this series. Be sure to visit the Web version of this article as it links to previous digital video signal tutorials. The second part of this article will appear in the April issue of Broadcast Engineering.

—Renaud Lavoie is president and CEO of Embrionix.