Putting the IOPS Where They Count

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

A performance measurement commonly used to benchmark hard disk drives, solid state drives, and storage area networks is called Input/Output Operations Per Second, or IOPS. Each type of storage device can have its own particular set of benchmark metrics and in some cases they can vary dramatically depending on the application which they will support and the physical makeup of the device.

It goes without saying that every millisecond spent in a process will impact overall net system performance. In our highly software-centric world, designers strive to achieve the fastest processing while simultaneously minimizing every aspect of latency possible. This can be accomplished through the effective use of software applications and by harmonizing the proper components into a complete package (as a “system”) that meets the expected targeted utilization requirements.

As mentioned in past articles, much of the IT-world centers on high volume transaction processing for data that is typically “structured” data which is organized, consistent and predictable. With more and more “video” media activity, that model is changing and systems are now required to manage different forms of data, otherwise known as “unstructured data.”

TRANSACTIONAL VS. BATCH PROCESSING

Processing media assets is fundamentally almost the reverse of the processing encountered for transactional activities. Transaction processing manages small sets of highly organized data-bits which are divided into individual, indivisible operations. Each “transaction” must succeed or fail as a complete unit; and can never be only partially complete.

Transactional processing, those supporting banking, ATMs, order processing and financial activities, continue to look for increased performance and capacity. Most storage performance benchmarks are therefore centered on transactional processing activities. It’s no wonder that users can now find solutions that achieve, for example, 1 PB (petabyte) of flash storage providing 22 million IOPS with tens of thousands of read/writes—all in a single rack.

In the IT world, batch processing—the opposite of transaction processing—handles requests that are cached (prestored) and then executed all at the same time. Batch processing typically occurs unattended, for example, the reconciliation of all the bank’s ATM transactions for a given day are compared against all the customer’s bank records and are then reported at a time period somewhere outside of normal business hours. Conversely, transaction processing is interactive, requiring a user input such as depositing money into a checking account or ordering airline travel where flight times, travel locations, seat selections, must occur from user responses.

LOTS OF IOPS NEEDED

Depending upon the application, these activities can require a lot of IOPS; which can be throttled or adjusted based upon priorities, loading and overall system demand. However, the system must be designed to handle the peak demands by providing sufficient IOPS to meet the application’s activities. In transactional activities, the emphasis on storage metrics can be very predictable and consistently managed, except for failures or overly high demands system wide.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Handling media files and content requires a different set of processing concepts; even though in certain conditions there are finite elements of transaction-like activities still occurring such as for metadata inputs or reports management.

Generally, users of magnetic disk and solid-state storage systems are seldom concerned with the details of processing speeds and latency for individual storage devices. However, if you’re building a spec server for continual operations where high volumes of transactional or batch activities are necessary, these storage performance metrics become more relevant.

When the system requirements are elevated to a higher order RAID system (e.g., RAID 5, 10, 6, etc.), the aggregate performance influence associated with IOPS, disk rotational speed and latency become key factors. And now with increased solid-state storage integration, there are added factors related to read/ write performance that are considerably different from rotating magnetic disk storage.

FIGURING UP THE IOPS

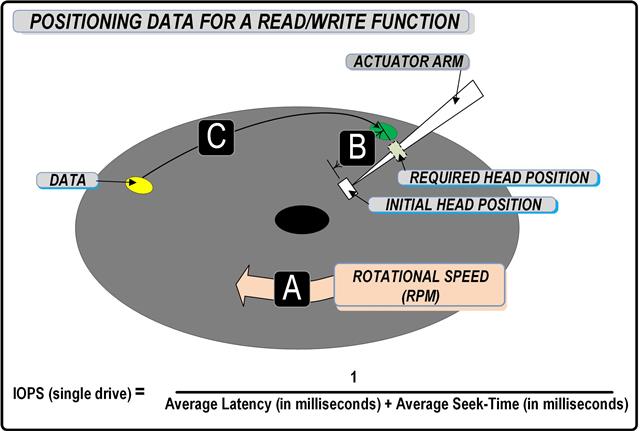

Disk and solid-state storage devices have a theoretical maximum IOPS value based upon certain key factors. For magnetic spinning disks, these factors are: rotational speed, average latency and average seek time (Fig. 1). The formula for computing IOPS equals 1/(average latency in milliseconds plus the average seek time in milliseconds). Since most enterprise class storage systems employ several drives arranged as arrays, you then multiply the IOPS for a single drive times the number of drives to obtain the total IOPS figure. For example, if a single drive is capable of 180 IOPS, then 10 drives in a JBOD configuration would be 10 x 1,800 = 1,800 IOPS; assuming this is a RAID 0 configuration, which incidentally would be considered unusual in this level of system.

Fig. 1: Disk and solid-state storage devices have a theoretical maximum IOPS value based upon certain key factors. For magnetic spinning disks, these factors are: rotational speed, average latency and average seek time.

When you up the RAID level to, say RAID 5, other factors will then impact the performance figures. When data is striped across drives (typical for levels above RAID 0), each write-action becomes a multiwrite operation which must occur to each drive in the array. This places a significant impact on the raw IOPS figure. For RAID 1 and RAID 10, there will be a two-to-one IOPS penalty. In RAID 5, there are four IOPS per write-action; and in RAID 6 (with double fault tolerance protection), there will be six writes per write-operation.

For read-activities, in configurations less than RAID 5 or 6, there is no performance impact and the array essentially yields a 1:1 ratio for each read operation.

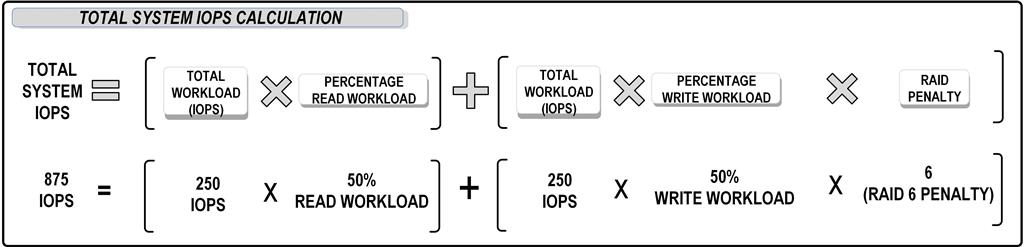

When drive systems are configured, the balance (or ratio) of reads and writes must be considered, along with the RAID penalty associated with the input/output operations (IOPS). For example, if your specific application requires 250 IOPS in order to process a 50 percent read and 50 percent write workload—and you want RAID 6 for resiliency—you’ll need to resolve the array so that a total of 875 IOPS could be delivered (see the calculation example in Fig. 2). One spec that impacts this performance is spindle rotational speed. Typically there are three choices in spindle speeds depending upon the disk drive: 7,200 RPM, 10,000 RPM or 15,000 RPM. Having selected a drive specified with at least 250 IOPS, the sample calculation shows the total IOPS target (875 IOPS) necessary for this specific application. The calculation is based upon the number of spindles (i.e., the quantity of drives at a specific rotational speed), the drive IOPS, and a RAID 6 configuration.

Large storage systems with dozens to multi-hundreds of drives are not unusual for today’s growing storage systems. This is not just for capacity purposes, but also for IOPS. The range for a complete system can in fact be quite broad: anywhere from between 20,000 to multi-million IOPS. However, in Tier 1 storage implementations, where a set of enterprise applications are enabled on both virtual and physical servers, IOPS performance may be well below 100K IOPS. Typically, the numbers will range between 30 to 40K IOPS.

It is easy to see why systems tend to employ higher RPM, enterprise-class SAS drives when looking for peak performance in a minimal footprint.

Fig. 2: When drive systems are configured, the balance (or ratio) of reads and writes must be figured, along with the RAID penalty associated with the input/output operations.

DOES IT REALLY MATTER?

Some storage professionals may claim “IOPS don’t matter”; and they suggest you don’t need to consider the benchmarks and stress test statistics in designing arrays to meet bandwidth. If this statement were entirely believable, then you’d probably not see any performance impediments between the various drive systems provided by most high-performance storage system providers. However, this statement just doesn’t seem to make sense, and when you dig further you’ll probably find that what is really inferred is that the consistency in the methods and benchmarks used to state “performance” are just that: only benchmarks. This is why storage manufacturers and vendors who provide systems for specific applications—media or transactional workflows—will almost always evaluate not just the individual HDDs and the arrays which are composed of those drives, they will brutally test them using their specific applications for the environments in which the arrays will be deployed.

There is much more to these metrics than space permits and with SSDs there are an entirely new set of dimensions which we find will change with age and the number of erase cycles. In a future installment, we’ll take a look at how flash memory and SSDs stack up in the IOPS perspective. Some of the statistics may indeed amaze you.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.