The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

That broadcasting has become more complex with the advent of OTT services is an understatement. Playout is no longer the final point of quality control. Going further down the content delivery chain, CDN edge points, targeted ad-insertion, multi-language support, and event-based channels require the expert scrutiny of broadcast engineers. The need to manage a more complex ecosystem with an ever-growing list of logging and compliance requirements has become a priority for content owners and regulators alike.

Yet the sheer scale of the problem defeats most customers. This is compounded by the fact that there is almost no point in trying to monitor those streams back in the facility.

It's a challenge that requires a fresh approach to monitoring.

OUTMODED MODEL

While traditional detection methods such as time and date searches and predefined metadata remain valid and are used widely, newer and more sophisticated software-based techniques such as digital watermarking and fingerprinting, combined with the increasing use of computer-based automation have to be considered viable alternatives.

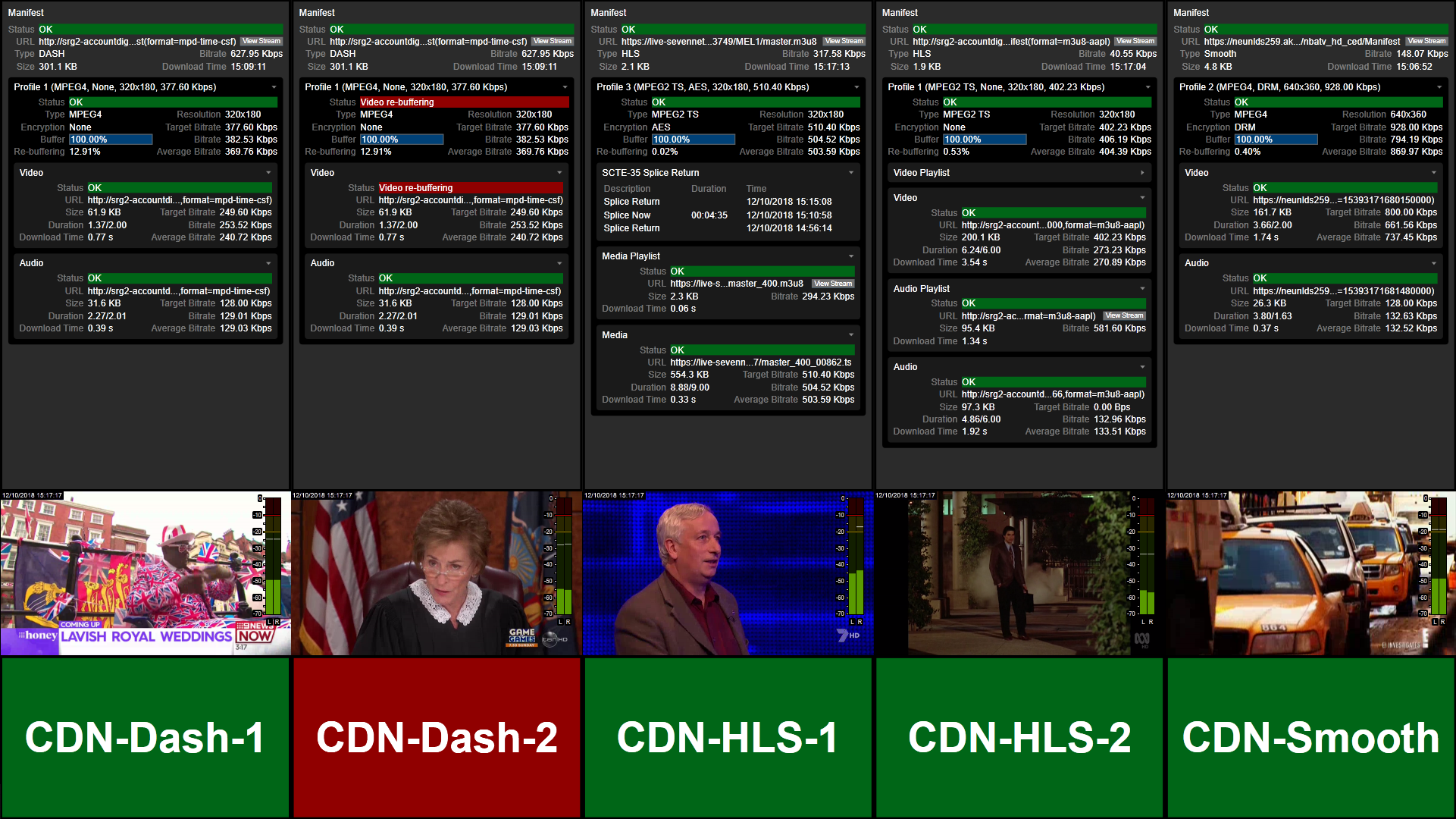

When it comes to monitoring live channels over multiple OTT streams and ABR profiles, it is no longer practical for display panels to mirror all the possible video outlets. There is a wide choice of devices and delivery outlets that need to be supported and viewers expect the same level of service, no matter how they are accessing content. The days of tracking one channel in one format and resolution are long gone. Today, each mezzanine file could be processed into HLS, MPEG-DASH and SmoothStreaming protocols, each carrying various bitrates and resolutions. Humans will struggle to visually monitor so many different profiles simultaneously.

This is exacerbated by an outmoded model in which monitoring is done on signals leaving the central broadcast center or facility from equipment housed in local servers and storage. This remains the norm for many operations but there is an increasing shift towards off-premises, cloud-based working, with storage and data management overseen by a third-party provider.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Some are using tablets and phones in MCR, thinking they are monitoring their CDN originating streams, where they are actually so far down the chain that it’s more an exercise of monitoring the in-house internet connection. Sarcasm aside, the number of hops between the origin and where they are monitoring assumes a perfect connection and no disruption anywhere in the path. This does of course not reflect the truth of the situation and one has to look at another, better, approach.

MONITORING THE RIGHT CONTENT

Considering the CDN provider operates at packet level only and does not alter the image payload, pictures will be the same once reaching edge locations and on to the final viewing device. Though content may be correctly streaming from the playout encoder, an edge location may experience its own issues, which could be local or originating from within the CDN. Issues such as local blackouts, bandwidth discrepancies and re-buffering may not be immediately apparent to OTT streams downstream of the CDN.

Also, what happens if national and regional feeds deviate? Or when the wrong program, or an incorrect version, is played out? What happens if the wrong graphics or tickers are mistakenly overlaid on a live broadcast? These issues are becoming increasingly critical for comparing traditional linear services with OTT representations and are very difficult to pinpoint by only looking at OTT playout.

The key is to monitor the right content in the right place at the right time.

As a new approach, a software-based solution is placed nearest to the CDN, in the cloud or data center, where ingress is free, fast and reliable and only a short hop from the origin. The software performs packet level analysis and real-time monitoring, then sends the detected events back to the MCR or the facility via a very low bandwidth path for operators to visualize the status of all streams in great detail.

It means ABR streams are monitored and logged not only from playout, but also from various edge locations. It means on-premises monitoring of outgoing playout streams is linked to cloud-based instances monitoring and analyzing CDN edge streams for unified visibility of all activity.

Engineers are provided with analysis data for all streams without having to backhaul streams from the remote sites. Operators can reconcile and compare originating transcoder outputs to CDN edge points using both traditional broadcast and data-centric panels. Customers greatly reduce bandwidth consumption and therefore costs, by not having to send payloads back to master control.

By having cloud and on-premises facilities working in tandem, the monitoring and detection of stored media assets and live broadcasts can both improve the efficiency of modern broadcast workflows and reduce operating costs. In short, this model rethinks OTT workflows, makes it more meaningful whilst enabling reliable, large scale and real-time monitoring at low cost.

Keeping up with so many OTT streams can be daunting, which is why having a unified system for monitoring compliance and identifying issues across all traditional and OTT playouts is critical. To address the complexity, Mediaproxy LogServer enables operators to log and monitor outgoing ABR streams as well as Transport Stream and OTT stream metadata including event triggers, closed captions, and audio information, all from one place.

It is the key to surviving the multiformat game of the future.

Erik Otto is the CEO of Mediaproxy.