When Did Entertainment Become More Fiber than Fun?

The reliance on streaming media grew considerably in the last decade but thrived even more with COVID-19 lockdown

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Delivering high-quality home entertainment used to be much simpler than it is today. Whether it was watching cable television, purchasing DVDs or heading to the movies, the consistent delivery of this content was never really questioned. However, the proliferation of streaming media, ushered in by Netflix, fundamentally changed the way people around the globe consumed entertainment as well as the telecommunication infrastructure required to support and maintain a seamless user experience. For example, when data isn’t transferred fast enough it leads to the buffering issues that cause consumer headaches.

Consider in 2019 that Netflix alone accounted for 12.6% total global downstream internet traffic, and says it consumes 3 GB per hour per device for HD and 7 GB per hour per device for Ultra HD content. Multiply that by the company’s millions of subscribers and that’s a considerable amount of data that must be processed near-instantaneously at all times to keep viewers happy. Netflix is just a singular example of OTT subscription-based streaming services that have taken the world by storm—from Disney+, Hulu, Amazon Prime and many others.

The reliance on streaming media grew considerably in the last decade but thrived even more with COVID-19 lockdown orders that keep consumers at home and glued to their streaming subscriptions to weather the health crisis. According to a survey from the Consumer Technology Association, 26% of participants said they tried a new video streaming service during the first weeks of the COVID-19 "shelter-in-place" orders. Supporting the level streaming media around the world is a lot more complex than broadcast and requires an ever-growing telecommunication infrastructure capable of handling increasing data speeds and capacity in data centers by pushing more information faster across fiber cables. In fact, the demand has become so significant in just a few years that data centers are running out of physical space and rely on cutting-edge technology components, such as optical transceivers, that plug directly into existing infrastructure to continue building better networks without the need for additional square footage.

MOVING TO THE CLOUD

As streaming media continues to become the preferred method of digesting content, OTT services must scale their business at rates that most companies can’t support with their own infrastructure, especially as the standards of content quality continue to rise. A few years ago, consumers were content with HD video, which quickly became Ultra HD, and now it’s 4K with 8K on the horizon.

Better quality viewing means much larger file sizes, and the growth in subscribers and business operations adds significant strains on even the largest data centers. This is where public cloud providers, such as AWS and Azure, help companies support their growing data needs. The cloud provisions on-demand availability of computer system resources, especially data storage and computing power, without direct active management by the user.

Following the Netflix example, transitioning from a DVD rental platform to a streaming service with 182M subscribers around the world (and constantly growing) was an enormous undertaking that required the company to migrate their own data centers to Amazon Web Services (AWS) public cloud. Essentially, Netflix shed their IT infrastructure for the operational side of its business but retained its own content delivery network (CDN) with servers residing inside the Internet Service Providers (ISP) data centers. A content delivery network is a series of proxy servers, caching servers and their data centers that deliver content to different geographies, which then is handed off to the respective ISPs, such as Spectrum or AT&T, and sent to consumer devices.

Hyperscale operators, such as AWS and Microsoft, are essential for many of these streaming services to handle the massive influx of data. They provide companies more agility and flexibility to scale operations and support rapid growth by removing the need to procure and manually upgrade servers and equipment. Many of these data centers are massive, with Range International Information Group being the largest at 6.3 million square feet of space. As big as these dedicated buildings filled with racks of telecom equipment are, they’ve reached a point where there isn’t enough space to add servers, computers and storage to keep up with growing data requirements. This is where optical fiber products are essential in order to continue adding speed and capacity in the space that already exists.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

EXPANDING DATA CENTER CAPACITY AND SPEEDS

Although it can be misleading in name, public cloud providers still have physical data centers they must operate so their customers don’t have to. Despite their large sizes, most square footage is accounted for already by the servers, computers and storage. Thus, cloud providers and data center operators must rely on equipment that can improve speeds and capacity of data throughput they can push over existing fiber.

Data centers must ensure information moves between servers to and from the outside world, ideally without noticeable latency. Until recently, the standard in center data-transfer speed were 10 to 100 gigabits-per-second (Gbps). Now that video streaming accounts for nearly 80% of all internet bandwidth, new higher speed interconnects are being created to allow for 400Gbps speeds across the fiber to improve both the speed and capacity of data throughput. Think of fiber as a freeway, and these technologies allow for more lanes and a faster speed limit. There is specific equipment that supports data center interconnect (i.e. connecting multiple data centers in different locations) and data center intra-connect (i.e. improving speeds between racks and servers within a data center).

However, there are several significant new challenges data centers face as they move toward 400G speeds to meet their customer’s needs. First, producing more speed also means an increase in power consumption, which leads to higher operational costs and more complex technologies required to handle those speeds. Secondly, 400G is still maturing as a technology, so the “quality of life” benefits and interoperability of various optical equipment aren’t at the same level as they are for 100 and 200G.

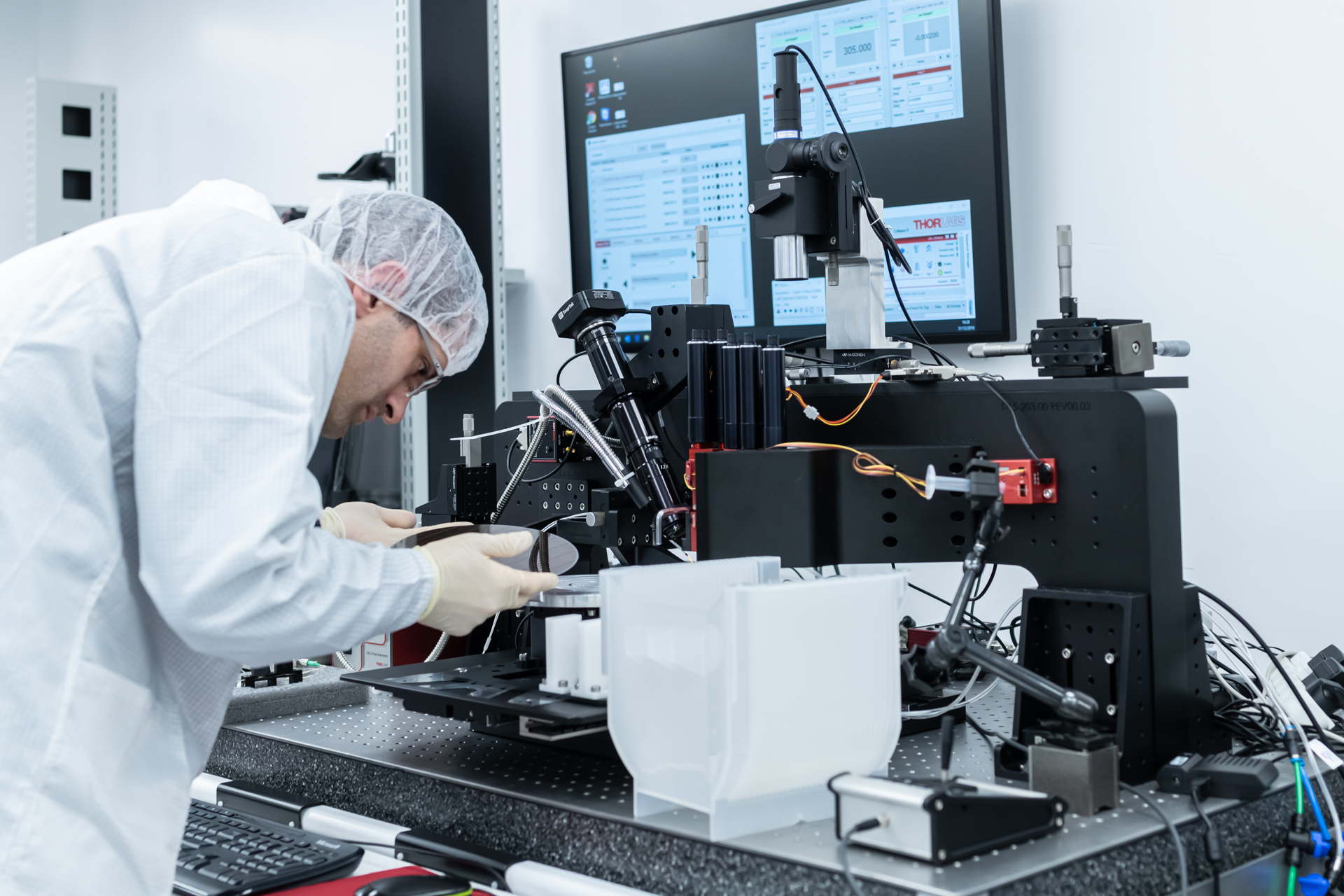

For both challenges, hot-swappable optical transceivers have been developed to suit the needs of data centers to reduce capex and opex costs and improve scale. In some cases, transceivers have reduced power dissipation of 400G speeds by up to 30% for optical products. Armed with new technologies, 400G will help allow data centers to support entertainment streaming needs such as 4K video, reduce delays and buffering in live-streaming, among other important use cases that are here today.

Video streaming is becoming the dominant way for people to consume media, but in order to support the demands of higher-quality content and a growing user base, the future of this delivery method comes down to the rate of adoption for 400G technology in data centers and networks that require large amounts of bandwidth to stream data or operate large networks.

Data center operators are now left to evaluate suppliers that are able to provide low-cost, high-speed and reliable optical transceivers to enable a more efficient infrastructure, and meet the demand for seamless video streaming.

Tony Musto is the U.S. Operations lead and SVP of Sales & Marketing at DustPhotonics, which develops 400G optical modules for data center and enterprise networks interconnect operating at high-speed data rates. DustPhotonics multimode optical fiber technology ideal solution for data center interconnects. It incorporates a revolutionary light engine that enables reduced power consumption, higher reliability, and superior module performance. The innovative optical packaging design results in improved sensitivity and efficient coupling. The new module enables data centers and high-performance computing environments to address growing needs for higher bandwidth at lower cost and power per gigabit.