Managing Media Production Workflows at Home

Current environment may alter how production and studio facilities operate for years to come

The process of keeping sound or heat from spreading between spaces is a well-understood practice for the building and acoustical trades. Media facilities have used those techniques in their studios, control rooms and edit spaces. Mandated environments surrounding the coronavirus are impacting news and live production workflows, altering how they produce and deliver their live and prerecorded show in ways they’d not previously comprehended.

Working remotely isn’t new. Reporters have worked from hotel rooms for years. Technicians administer datacenters virtually and the cloud is certainly the foremost example of how to accomplish features and functions in an absentee domain without a doubt.

Yet what is occurring now may, or already has, altered how production and studio facilities could be for years to come. Newly adopted workflows now produce similar outcomes without the huge physical footprints, costs and overhead currently required for large-scale operations. Such changes could forever disrupt how news programming is created and delivered.

WHAT’S BEEN HAPPENING?

TV production operations live heavily on the physical side. Think of the elements needed to produce a regular live news or entertainment program when most of the human elements are in the same building. Now imagine that 50–75% of those elements are being “disassociated” physically from one another. Imagine when one studio changes to multiple studios—and they’re not located in traditional spaces, they’re now in people’s homes.

Major news organizations usually have control rooms seating 12–15 production personnel composed of tech managers, directors and others. Editorial spaces have in excess of 200 desktops and a dozen-plus craft edit suites. Audio needs editing and live-to-air spaces. Still, 3D and animated graphics need large displays with high-performance compute stations. Add a myriad of web-producers, writers and researchers and you have an assortment of people who must all commute, share spaces and consume resources every day.

Support-wise there are ingest managers, archivists and MAM wranglers. Add studio and grooming personnel, field reporters and technicians and a gaggle of remote equipment to produce “live and breaking news” whenever needed. Numbers quickly grow towards a hundred or more. Many work functions demand physical people attached to physical gear, fixed to cables that attach to dedicated equipment housed in a complex (usually enormous) central equipment room (CER).

Until cloud technologies, nearly every studio function and show activity was centered around similar infrastructure support spaces. Even when productions moved somewhat “out of house” or some processes extended into the cloud, elements basically required tablets, cell phones and laptops connected through high-end CPU workstations. Network connections, software management, storage, MAM and archive, ingest and playout, local and distance support… and operations grew more complex and specific.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Operations are steeped in human interaction from conception to delivery. Yet that’s now changing, fast and furiously.

Once most functions were branded to discrete, dedicated devices, which were connected to an internal LAN or an SDI-router. They likely functioned using KVM-like technologies to control or manage the relatively nearby components found in the central equipment room. Multiviewers of enormous proportions governed the images, sound, and metering interpretations.

More recently, organizations have embraced new features using virtual machines and/or cloud-based technologies to supplement, administer or orchestrate functions previously associated entirely around “on-prem” physical, dedicated hardware. Operations and workflows, which were likely candidates for change included graphics, acquisition, storage, playout, asset management and some distant/occasional use activities such as an EFP remote or a feed from another location. Each had a consistent and structured agenda based upon managed workflows governed by functions attached to connections to/from the devices.

Previously such workflows were relatively straightforward. Dedicated people sat at dedicated machines and moved files, images or operational commands using a mouse and keyboard. Communications depended upon dedicated intercom stations with multiple channels that could be easily selected from a panel. Essentially, this was a local “one-to-one” operational workflow, exemplified by activities like a remote feed for a live broadcast.

When addressing real-time images or sound, operators used a router panel on a dedicated “network” that was associated internally to a fixed format and flow. To stream images to another location involved workflows with another set of encoders, gateways and networks connected between internal or external locations.

With the recent world changes and in under 60 days, many of the traditional one-to-one production activities have become “dematerialized,” i.e., taking the physical association of hardware/software and moving it to a position that could be connected to or from anywhere else. Such new workflows would become the most significant challenge a broadcast network enterprise would face since portability became the norm for content collection and transmission.

Dematerialization is not new and was headed in those directions for some time. We just hadn’t expected this to occur in such a risky, isolated environment. Change has demanded instantaneous scaling that dissected the studio talent and put them in locations they’d not expected before.

Production workflows have taken segments of local facility-based operations and immediately associated them into cloud and cloud-like productivity. Operators and talent both are now connected through internet associations using cloud support products such as IaaS, MyCloudIT, XenApp Express and AWS Workspaces.

Even before the COVID-19 crisis forced operations into isolation, a standard PC from an off-site location would connect via AWS Workspaces to a secure high-resolution, compressed PC-over-IP client such as Teradici’s “PCoIP.” For real-time operations, users need a highly responsive computing experience. By employing advanced display compression, end users can associate with on-premises or cloud-based virtual machines to emulate an alternative to their once “local” computers. Essentially, the users work directly and remotely on their servers, but from afar at home or other locations.

PLUS: WRAL Keeps News Staff, Viewers Connected

Virtual workspace architectures will now compress, encrypt and transmit only the pixels (instead of the data) to software clients, including mobile clients, thin clients and stateless endpoints. For graphics, the coupling of the endpoint engines and compositing means that artists can see what they are creating with minimal delay and no reduction in image quality.

EVOLVING WORKFLOWS

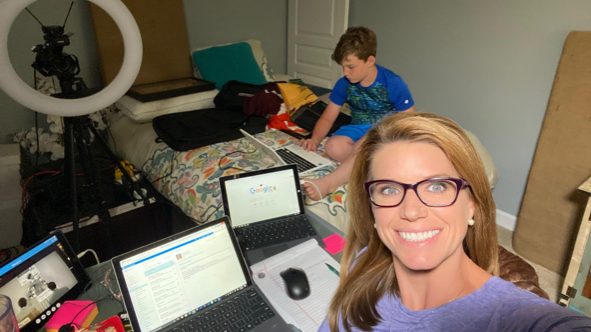

The first week of this new REMI-like production model was assembled with cell phone and laptop cameras, usually functioning in dual capacity. Once a comfort level was achieved, week two moved into locations adding (or adapting) 42-inch and above displays to put rolling backgrounds behind close-ups. Images were fed live from laptops played via VNC connections or files. Audio processing and equalization soon followed as consistent levels needed to feed into local encoders before sending the signals across a public internet connection.

By week three, viewers begin to see “home-lighting,” two (or more) “sets” from the same home and multiviewers, prompters from PCs or equivalent making up the new workflows.

Making sure that the right amount of connectivity and the highest level of quality with the least amount of lag are prompting personnel to place PCoIP-devices at every endpoint. Once called “thin-clients” or “zero-clients,” PCoIP capabilities have taken signal transmissions as “direct connections” from laptops direct to CER-based high-performance graphics or video servers or feeds between make-shift home studios and primary control rooms at the network’s studios. Most will use card-based zero clients that outperform software-based applications on desktops.

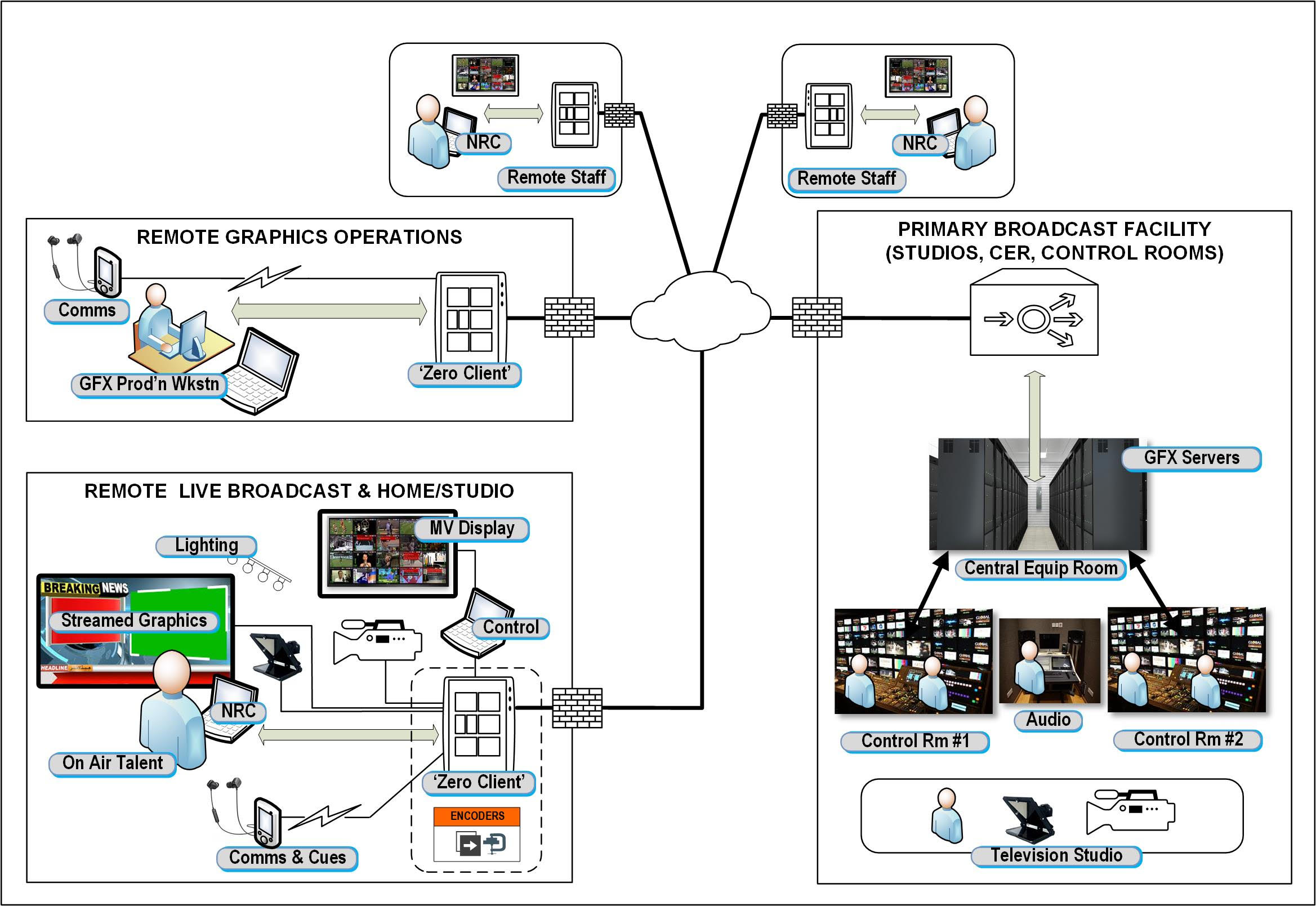

Fig. 1 is a simplified, high-level overview showing how remote home/studios, graphics designer/operators and staff can work in quarantine or isolation. During broadcasts, two studio control rooms and a separate audio room keep the reduced facility staff spaced apart.

Control room multiviewers are replicated, encoded and sent to the remote sites as compressed video—allowing each remote operator, talent or artist to see the same content as is in the live control rooms at the studios.

Operators must have the same user experience at remote home studios as when at the studio facility. Pushing the MAM to the edge via a web browser lets producers (editors) cut proxies or push cut items either to editing or direct to control room for playback. Using core-native floating licenses, i.e., the “bring your own license” option, through to remote editing and VXF is now commonplace.

KEEPING CONNECTED

Where is the biggest headache? The missing elements in “the COMMs,” according to network managers I’ve spoken with. Getting an approximate level of studio intercom-like functionality is the nemesis of daily productions, so far. Cell phones with earbuds have limited functionality, yet that’s what’s needed for the least amount of latency between home and main studios.

Getting IFB to the remote home studio adds another tier of complexity. Multichannel and multi-access IP-based communications become essential when operating in several simultaneous locations. Intercom, using “virtual link” products (e.g., VLink) is a good start; yet substituting a tablet-but-ton-set for a physical button-control-panel is not an ideal workflow. Expect to see multiple updates in these product lines.

Link-bandwidths make all the difference when spinning up multiple activities into a VPC or when interconnecting studios and CERs between major metropolitan locations. Having 10G or higher connectivity, while a luxury for many, is essential at a network level. Security services also play important parts that limit network access. Products with adaptive MFA (multifactor authentication) permit flexibility when adding sites at the peak of a breaking story.

Firewall rules (set by the appropriate user, application, port-set and IP addresses) need to be extensible to gain appropriate access on a tier or tailored workspace.

WHERE DOES IT GO FROM HERE?

Given the social distancing paths certain to be here for many months, these new workflows change the dimensions of how technologies, like bonded-cellular, will migrate. Many clever enterprises are rapidly changing the production domain and these adaptations may become the links to a new way of designing and outfitting studios. Only time will tell.

Karl Paulsen is a SMPTE Fellow and frequent contributor to TV Technology.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.