AOIN Produces 'Extended Reality' Scenes for 'America's Got Talent'

Pandemic restrictions required a different workflow to be adopted

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

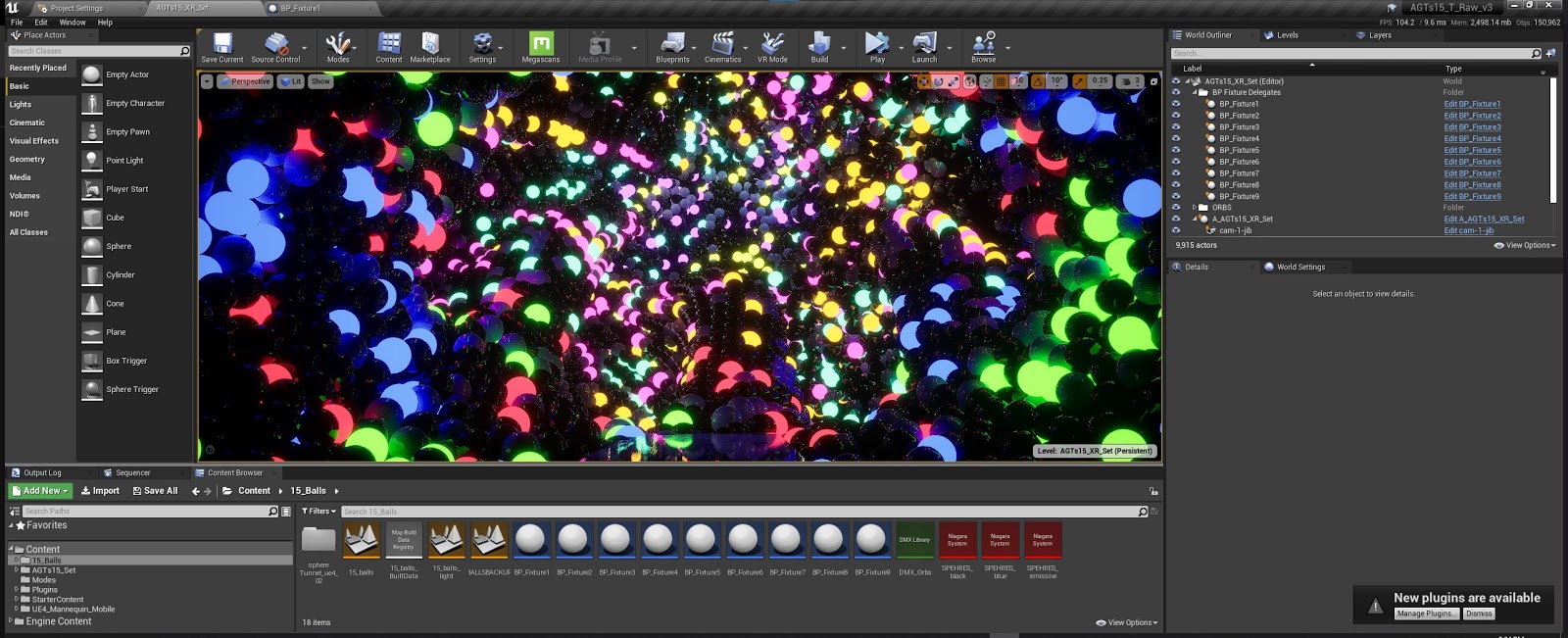

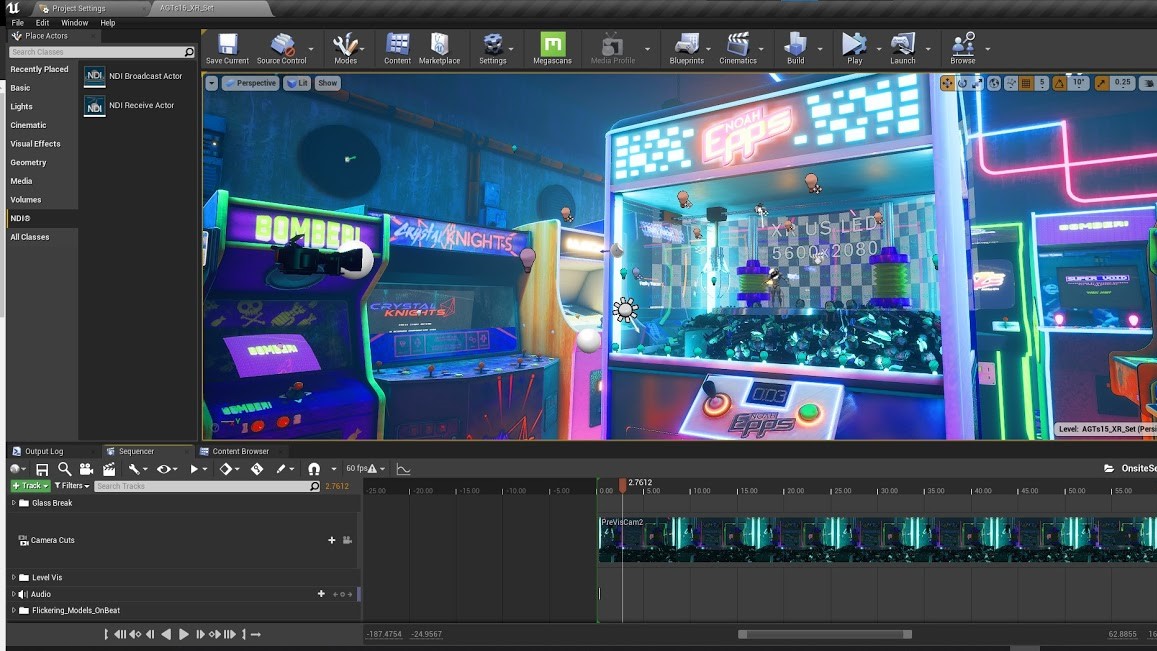

SAN FRANCISCO—I was fortunate enough to spend the better part of the pandemic working with some of the most talented and forward-thinking creatives in live television production on season 15 of “America’s Got Talent” on NBC. My company, All of it Now (AOIN) was hired by Fremantle to work with the show’s Screens Producer Scott Chmielewski, DMDS7UDIOS, to create and build live XR (Extended Reality) scenes for some of the season’s top contenders.

There were a lot of vendors involved in bringing this very, very ambitious show to life. DMDS7UDIOS ran everything live on set, my team at AOIN together with creative teams at Gravity in Germany and XITELabs in Los Angeles produced XR content for the season’s signature performances. This was no small task, as each of the season’s seven episodes featured two XR performances, and in many of these performances we managed the initial integration and technical handoff of assets to the DMD team who drove the onsite programming, calibrations, integrations and real-time rendering out to a state-of-the-art 60-foot wide curved LED stage.

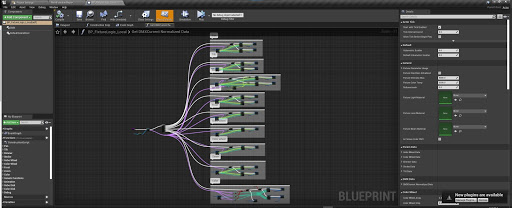

What made the creation of this much digital content manageable was having multiple design teams on board working in parallel in Unreal Engine. With source control, we were able to create branches for each designer to work in independently, and then merge their respective changes with the larger team whether the assets or scenes were coming from Gravity, XITE or AOIN. Source control provided a clear path to produce this quantity and quality of content in the timeframe we needed to hit repeated weekly deadlines.

Each week we would receive creative direction for each XR performance in the form of mood boards and concepts that came in from creative producer Brian Burke and Harriett Cuddeford from the talented Syco Entertainment team. We then had three days to submit our first iterations, and the remainder of the week to refine our scenes for Friday ingest and onto the live performances, just one week later.

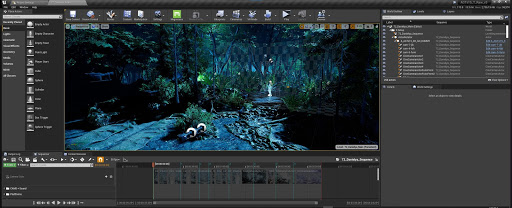

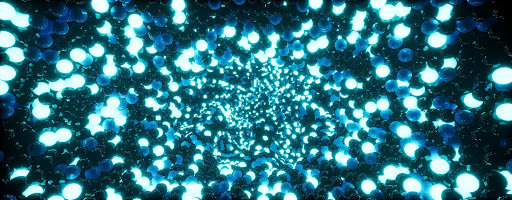

These were ambitious visuals. Rather than a few months for each scene, we had five days and we were constantly figuring out how to reverse engineer the initial creative concepts to maximize assets we could pull from the Unreal Marketplace and Quixel Megascans to synthesize into environments that would look great on television. The Megascans in particular played beautifully on screen—the forest and foliage tools gave us a level of realism in performance visuals that would have otherwise been impossible. One of my favorite performances of the season, Noah Epps in week four, used the Decagon arcades from the Marketplace assets.

The hardest part about this show is that it’s live and performance-based, and there are constant on-the-fly changes. In some cases we had to adapt some scenes meant to be shot in XR, or performers would be swapped for use in a different CG scene. The teams were able to make both minor and major adjustments to scenes in a short amount of time, and sometimes even repurpose scenes from XR to rendered videos for non-XR performances. The tools in Unreal Engine gave us the flexibility to render out scenes dynamically and have the option of outputting real-time or rendered content.

The sheer volume of content required from this production made it practical to have dispersed creative talent. Our team in San Francisco could be working on butterflies, and XITE’s team on modeling, then handing off to Gravity’s team in Germany to work on trees and foliage, all in the same world for a given performance. The collaboration was extended with the show’s creative directors who sat in on daily review sessions where we would share Unreal Engine scenes on Zoom calls, and could iterate in real time without having any of the creative direction get lost in translation.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

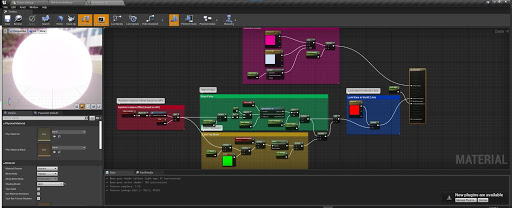

Onset, the DMDS7UDIOS team worked with director Russ Norman to capture performances using four XR cameras and Stype Red Spy Fiber systems for camera tracking. Florian built virtual cameras and other helpful assets into an Unreal template so that he could give us an accurate camera plot that allowed us to block everything in advance, and know how to place assets in scenes in a more thoughtful way. This tool was very useful and avoided a lot of potential issues by the time it came to live rehearsals. The XR performances were shot on a 60-foot wide curved LED stage, which along with a highly reflective floor, posed certain creative challenges. We had to take the real-world reflections and the virtual world reflections into consideration on shoot day, and Scott was able to use live DMX data to control hundreds of virtual DMX fixtures and effectively manipulate lighting in virtual environments.

The sheer scale of each of these XR performances, and the ability to create these fantastic worlds, makes this season stand out. The “AGT” team wanted to try this out a few years ago, but the technology wasn’t quite there yet to make this a practical option. Two years later we came back, and amidst the social distancing requirements due to the pandemic, performances were spread across multiple timelines, which made the production timeline more conducive to working in XR. It was a thrill and an honor to get to work under the brave creative supervision of Brian Burke and Harriet Cuddeford who came up with incredible concepts to build into XR performances.

This was our first full season episodic series done entirely in Unreal Engine for the XR performances, and we would have struggled to complete the XR portions of this show in any other way. We were heavily dependent on the ability to support multiple designers and automate their creative flow with source control, along with having access to premium pre-built assets in the Marketplace. We also needed the ability to roll back when a producer said “we liked what you did yesterday, can you revert to that version?” or if a performer would want to go in a different direction creatively on the day of live rehearsals.

Working in Unreal gave us the unique flexibility to avoid being locked into too many creative variables while delivering scenes that transport the viewer in a whole new way. Producing live XR content in a real-time game engine feels like what surely will be the future of performance-based television programming.

Danny Firpo is the co-founder of All of It Now Productions.