The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

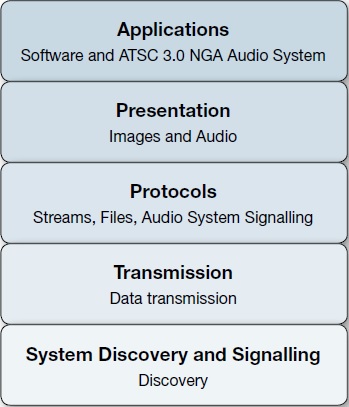

Fig. 1: Simplified ATSC 3.0 layer model

IP transmission will begin making inroads into North American broadcast facilities starting this spring, even if they have no plans to implement an IP-based infrastructure, thanks to the finalization of ATSC 3.0 specifications and the introduction of compatible consumer products at this year’s CES. ATSC 3.0 is an IP-based transmission standard designed on a five-layer stack (see Fig. 1) akin to the seven-layer OSI network stack that allows easy technology replacement and substitution. This month we’re looking at what needs to be done to prepare existing infrastructure to handle the Next Generation Audio (NGA) formats coming in ATSC 3.0.

The audio system chosen for North American implementations of ATSC 3.0 is Dolby AC-4, which has three Audio Element Formats: channel-based, object-based and scene-based audio. Channel-based audio is essentially what we have now with mono, stereo and surround formats, though with the addition of height channels it also serves as the base for immersive audio mixes. Audio objects consist of audio signals and positional metadata for use in immersive mixes or for audio program customization.

Scene-based audio is a sort of soundfield snapshot from a high-order ambisonic source. All audio components get rendered, then encapsulated, along with video, into an HEVC H.265 stream for broadcast and a synchronized MPEG-DASH stream for broadband. The system is designed so that delivered audio can be played back anywhere, from home theaters to handheld mobile devices to headphones, because decoders adjust playback parameters for the end user’s speaker configurations and devices. This end device rendering may finally mean the end of downmixing.

Controlling all of this and making it function properly requires lots of metadata, so those of us who designed our infrastructure around static metadata will have to rethink and likely rework it.

Some things about the new system are familiar, though with updates. The system sample rate is 48 kHz, with support added for 92 and 192 kHz. Dynamic Range Control remains in the system and loudness management is still LKFS-based. Welcome additions to loudness management include a feature that verifies whether metadata parameters and content measurements match, and there is now an optional leveler.

IMMERSIVE AUDIO

Immersive audio in AC-4 begins with 12 audio channels in a 7.1+4 configuration with speaker locations designated as Left, Center, Right, Left Side and Rear, Right Side and Rear, LFE, Upper Left and Upper Rear Surround, and Upper Right and Upper Rear Surround. Upper channel speakers in this format are placed above their lower channel counterparts to provide height imaging.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Setting up a 5.1 mix room for this configuration requires the addition of six more speakers and will likely require replacement or modification of the current monitor controller. Outfitting a dedicated production control room for 7.1+4 is certainly possible, but doing so in a mobile unit raises a host of concerns including whether any ambience in the height speakers will be distinguishable from the ambience bleeding through the truck walls.

More interesting than immersion, and possibly more challenging, are the uses and potential configurations of object-based audio and customization. One of the most discussed uses for customization is modification of the dialog track to allow swapping of the primary dialog track with one or another language, listening to a secondary commentary track, or turning the dialog level up, down or off.

Other uses include providing assistive services such as descriptive video or audio versions of emergency notifications. Audio objects are also meant to be primary and secondary components in immersive audio mixes. Providing this array of options means being able to create enough mix minuses, submixes and stems, and have enough paths to move them through the facility.

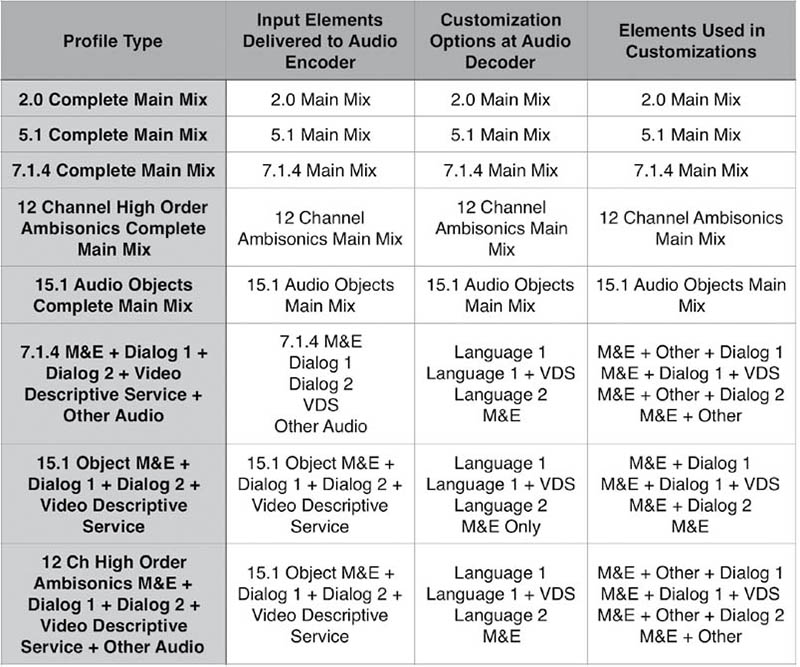

Fig. 2: ATSC 3.0 example broadcast operating profiles

ASSESSING OUR FACILITIES

A look at some suggested ATSC example broadcast operating profiles gives us an idea of the feeds required to provide these services to consumers (see Fig. 2).A 15.1 channel M&E with two dialog tracks and one video descriptive service tosses 19 input elements at the audio encoder just for one stream, 11 more than a 5.1+2 mix.

ATSC 3.0 allows multiple simultaneous streams, so the element count could get quite high, depending on what profile is used for each stream.

This information helps us assess whether current production facility technologies will handle the workload, and, in fact, most remain useful to some degree. All infrastructure paths still seem valid with AES the most limited and embedded SDI good for feeds up to 16 channels wide. MADI, with 56–64 channels per link, should be sufficient for most current broadcast productions, but moving beyond 64 channels in one path means moving to some form of AoIP.

Speakers may need to be added and metering will need to be updated for immersive mixing and monitoring, not just in the audio mix rooms, but also in editorial rooms and QC stations. QC audio monitoring may be awkward given the potential output options available, so it may be the time to consider building a separate audio QC room.

Monitor controllers will need to be assessed to see if they can handle the mix and submix options, and room size and room acoustics should be reassessed once speakers are added for immersion. Digital audio consoles with internal routers may be the Swiss Army knife for solving signal flow and feed issues for production since they can be outfitted to directly interface with most types of facility I/O, including AoIP, though additional DSP processing and I/O may need to be added to the console system, and the monitor section will need to be updated to monitor objects and immersive mixes.

The most challenging part to making all of this work may be proper metadata authoring, control and QC. AC-4 is highly metadata-dependent and it seems unlikely we’ll simply be able to provide a static metadata product to the consumer, certainly not if other broadcasters deliver the value-added product this new system can deliver.

Of course, there is currently no pressure for any facility to make drastic or wholesale changes right away or move to full immersive audio with multiple languages out of the gate, but now is certainly the time to start planning.

Jay Yeary is a broadcast engineer and consultant who specializes in audio. He is an AES Fellow and a member of SBE, SMPTE, and TAB. He can be contacted through TV Technology or attransientaudiolabs.com.