The early days of HD brought lots of complaints about lip sync errors. That’s a condition where, typically, the audio appears ahead of the visual movement of the mouth. Most viewers won’t detect lip sync errors until the offset is greater than about two or three frames. The threshold is affected by whether the audio is leading or lagging the video. One NTSC frame equals 33ms. Professionals may detect errors of one frame. However, once the viewer detects the error, she is much more sensitive to the annoyance, so we’ve got to fix it.

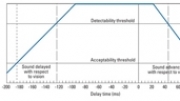

The ITU-R BT 1359 RP says the detection threshold for lip sync errors is about +45ms to –125ms (audio early to audio late) and that the threshold of acceptability is about +90ms to –185ms. While we’ve progressed from where it seemed that every evening newscast had at least one example of lip sync error, to where it problem is far less prevalent, the causes can still confuse the smartest engineer.

The ATSC says lip sync should be in the range of +15ms to –45 ms, measured at the input to the DTV encoding device. The EBU recommendation R37-2007 specifies a range of +40ms to –60ms measured at the output intended to feed the transmitter. (Emphasis added.) Note the key differences, both in terms of deltas and the points of measurement.

The culprit

Digital processing equipment requires a different processing time for video and audio content, primarily due to the disparate bandwidth of the digital data. With baseband SD video sampled typically at 13.5MHz and audio at 48kHz, any equipment processing the signals will produce a delay in the signals that is different for video and audio. Process HD video and throw in compression too, and the potential for problems increase. While good equipment design can minimize the problem, what is also needed is a good way to measure and correct it — ideally back to zero — before broadcast or recording. And, with today’s multichannel, minimal-staff playout centers, all this has to happen automatically.

Early solutions were to simply add delay circuits in audio consoles. That allowed the audio mixer to readjust the offset to rid the program of lip sync errors. That turned out to be a major workflow factor as you needed a warm body to “ride gain”, so to speak. Embedded audio solved much of the problem and unless the local station does a breakaway to separate A/V signals, viewers seldom see/hear the error.

Digital pesticide

There are many tools available that can be used offline to measure and correct A/V sync error, but what is really needed is an unobtrusive way to manage it on live signal paths. Several solutions are available, but let’s focus on two: fingerprinting and watermarking.

With watermarking, an encoder analyzes the envelope of the audio signal, and from this generates a watermark that embeds timing information into the corresponding video. At a downstream point, the video and audio are analyzed, the watermark is decoded, and a corresponding measure of any intermediary A/V sync error can be produced. As such, the encoding must be done at a point with known (preferably ideal) A/V sync. A key point to keep in mind is that watermarking alters the image. The watermark may or may not be highly visible. Think of watermarking as a video tattoo. If the tattoo is large and on your forehead, everyone can see it. If, on the other hand, the tattoo is small and under your belt line, few will notice.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Fingerprints on the other hand do not alter the image. A fingerprint can be in the form of audio or video data. To generate a video fingerprint, the video is decoded and some algorithm that looks at specific characteristics is applied. A numeric value is generated from that process. A fingerprint can be based on a single frame or a group of frames.

The fingerprint can be packaged as metadata and stored with content as files, streamed concurrently with the content, or even sent over an entirely separate path. Downstream, a similar fingerprint can be generated, and a delay processor can use the two fingerprints to regenerate an A/V signal with the original (correct) timing relationship.

Courtesy Miranda." />

Table 1 summarizes key differences in watermarks and fingerprints.

Additional information on A/V timing, as well as fingerprinting and watermarking, can be found here and here.