Predicting Disk Drive Failures

In the early part of 2003, a Berkeley research study estimated that more than 90 percent of all new information produced in the world is being stored on magnetic media, with most of this data retained on spinning disks. With this statistic growing exponentially, everyone who is rightly concerned about the integrity of their own data, and for the media onto which that data is retained, should be prepared for the inevitable.

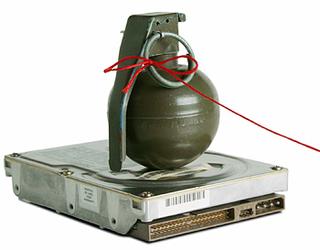

Hard drives are mechanical devices and as such will eventually fail. But what constitutes the "failure" of a hard disk drive? When should you replace a drive? And is there sufficient information to predict the eminent failure of a drive such that you can prevent disaster before the captured data is no longer recoverable?

FAILURE PATTERNS

One definition is that a drive is considered to have failed if it was replaced as part of a repairs procedure and excludes drives that were replaced as part of an upgrade. Regardless, from an end user's perspective, a defective drive is one that misbehaves in a serious or consistent enough manner in the user's specific deployment scenario that it is no longer suitable for service. A failure may not be a huge issue if the entire storage system is properly protected, through RAID or complete mirroring, as in such a situation a complete loss of data is quite remote.

As for what constitutes a drive failure, there is very little published on the failure patterns of hard disk drives. When selecting a drive, we should look to hard drive performance relevant to the matter of quality and reliability; but we often only rely on the predicted MTBF (mean time between failures) or published life expectancy—in terms of hours—provided by the drive manufacturer. MTBF is meant to represent the average amount of time that will pass between random failures on a drive of a given type, and ranges between 300,000 to 1.2 million hours for many of today's modern drives. This number is only part of the equation.

©iStockphoto.com Much of the quoted statistics on life expectancies are based not on empirical data (i.e., information obtained by means of observation, experience or experiment), but through data that is either derived from relatively modest sized field studies or from extrapolation during laboratory generated "accelerated aging" experiments. As a benchmark for a typical model of disk drive, the probability of failure is less than 1 percent per year (per a University of California San Diego report in 2001), and this is getting better every year.

SMART SIGNALING

Since 1994, hard drive manufacturers have been building self-monitoring technologies into their products aimed at predicting failures early enough to allow users to backup their data. Referred to as SMART (Self-Monitoring and Reporting Technology), its output is a 1-bit signal that can be read by operating systems and third-party software. SMART uses attributes collected during normal operation or during offline tests to set a failure prediction flag.

Each drive manufacturer develops and uses their own set of attributes and algorithms for setting this flag. Some of the attributes that make up the SMART signaling include a count of the track-seek retries, any reported write faults, the reallocation of sectors, high (and low) temperature and even head fly heights.

Each of the fault attributes are collected and stored on the hard drive's controller memory, but generally are not reported until a certain threshold is obtained based upon the manufacturer's algorithms (otherwise, end users would potentially be returning drives on a routine basis). When a collective combination of faults is reached, a SMART flag is triggered.

The obvious question is how do you know if the SMART technology is actually being reported? Moreover, is it possible to monitor the health of your drives and set your own thresholds? A disk monitor utility is one possible answer often employed as a background task in many high-end servers and workstations. This utility software monitors the health status of the hard drive, and is designed to prevent and predict drive failure before disaster.

For example, if the drive reports that excessive error correction is required, this could be caused by drive media surface contamination or possibly a broken drive head. SMART can flag this issue to third-party software whereby an alert can then be raised before a problem gets worse. Most mission-critical video server platforms monitor these flags, tracking and compiling historical data on all the activities of the drives and their controllers, and then report them through their own diagnostic systems either locally or via a contractual service agreement or other external monitoring system. SMART utility software is available for installation on user platforms that do not employ their own monitoring and health applications.

Other hard drive metrics to be aware of are the manufacturer's stated drive service life and its minimum expected number of start/stop cycles. These specifications become applicable in different ways depending upon how the particular drives are implemented.

For modern hard disks, you can expect the service life to be about three to five years, a usually conservative number that is almost always greater than the manufacturer's stated warranty period. For planning purposes, especially for drives used in mission-critical high performance applications, you should expect to replace the drive well within its service life period. If the environmental characteristics where the drives operate are very good—factors such as proper cooling, good power regulation, and continued steady use without external or manual power cycling—then use the larger number. If you're in a mobile unit, i.e., an ENG/DSNG environment, use the smaller one.

PREPARATION CAN DECREASE FALLOUT

An additional specification, stated relative to the drive's service life, is the minimum number of start/stop cycles that the drive is designed to handle throughout its service life period. This number is valuable when drives or workstations are powered down during non-work periods or when set to a power save mode.

In a counter argument to the start/stop cycle spec, some drive manufacturers may employ a different principle to protect the life of the heads and the media surface. So called "head load/unload technologies"—which augment the start/stop cycle spec to a minimum number of load/unload cycles—is a technology from IBM whereby the heads are lifted up and away from the drive surface using a ramp located at the edge of the drive surface. Here the heads are forced up this ramp and parked before the drive spindle is allowed to spin down.

Obviously drives employed in constant 24x7 operations, such as a video server platform or station automation system, will have an entirely different set of operational parameters than those of the less utilized graphics workstations or diagnostic PCs. For these applications, it is more likely to already have many, if not all, of these parameters specifically tailored to the requirements of the systems they're deployed in. Nevertheless, knowing what the anticipated life expectancies are, and what might indicate a "drive-failure," should help in planning for the inevitable with the least amount of fallout.

Karl Paulsen is chief technology officer for AZCAR Technologies, a provider of digital media solutions and systems integration for the moving media industry. Karl is a SMPTE Fellow and an SBE Life Certified Professional Broadcast Engineer. Contact him atkarl.paulsen@azcar.com.

Get the TV Tech Newsletter

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen is the CTO for Diversified, the global leader in media-related technologies, innovations and systems integration. Karl provides subject matter expertise and innovative visionary futures related to advanced networking and IP-technologies, workflow design and assessment, media asset management, and storage technologies. Karl is a SMPTE Life Fellow, a SBE Life Member & Certified Professional Broadcast Engineer, and the author of hundreds of articles focused on industry advances in cloud, storage, workflow, and media technologies. For over 25-years he has continually featured topics in TV Tech magazine—penning the magazine’s Storage and Media Technologies and its Cloudspotter’s Journal columns.