Spectrum Repacking Looms for TV Broadcasters

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

In its National Broadband Plan made public last month, the FCC proposed to make 500 MHz available for broadband within the next decade; and 300 MHz for mobile use within five years.

In the plan’s executive summary, the commission said it would initiate a rulemaking procedure to re-allocate 120 MHz from the broadcast television (TV) bands. As this is a policy document, it does not clarify which 120 MHz of TV spectrum should be re-allocated or whether such re-allocations would be on a nationwide basis or on some other basis. It also recommended the FCC take steps within the next 10 years, to free up a new contiguous nationwide band for unlicensed use.

Before details of the plan were announced, the Consumer Electronics Alliance and CTIA-The Wireless Association proposed to the FCC a plan whereby broadcasters would not lose coverage or bear the costs to convert their transmission facilities. This plan would “painlessly” provide 100 or more MHz of spectrum for broadband at no net cost to broadcasters or over-the-air TV viewers.

So how would this work?

FALLBACK PLAN

The FCC Broadband Plan makes specific reference to this proposal in Chapter 5, p. 94. It is one of the commission’s possible fallback positions in case not enough broadcasters volunteer to return their channel to the FCC.

The financial part is quite basic; the auctioning of the taken spectrum would generate billions of dollars for the U.S. Treasury. Part of the proceeds would be used to compensate broadcasters for their transition costs. Let’s leave the financial matters right there and look at the proposed technical proposal.

It is very simple: repack the broadcast spectrum so that 120 MHz (20 channels) are surplus to the needs of incumbent broadcasters, (there is no suggestion of which channels would be “surplus”). If the TV broadcast spectrum is compressed, it appears that many broadcasters would have to shift to another channel. This is called “repacking,” which means reducing the distances between DTV transmitters on the same channel and on adjacent channels. Reducing the distances between transmitters on the same channel would increase DTV-DTV interference if each transmitter continued to operate at its present power and height of its antenna. I do not suggest that repacking would be needed everywhere, but in the densely populated areas it would be necessary. Consumer Electronics Alliance and CTIA-The Wireless Association do not advocate any reduction of broadcast coverage, so they propose that local networks of low-power transmitters, called Single Frequency Networks (SFN) replace the existing transmitter topology involving one high power transmitter radiating a strong signal from an antenna at a great height. This is not to be confused with DTS which involves having a main transmitter and some gap filling on-channel repeaters and is already approved by the FCC.

What is wrong with repacking and replacing the high power transmitter, and its tall tower with SFNs distributed throughout the present coverage area of stations where interference would otherwise result in the loss of coverage? Nothing—if it works. European broadcasters transmit their DTV signals with multiple carriers, but their modulation scheme is COFDM. In North America, we have a single carrier DTV system based on 8-VSB modulation. Tens of millions of ATSC receivers have been sold to the public within the last few years and none of these could receive a COFDM modulated DTV signal.

DTV FIELD STRENGTH

Why would it not work with our ATSC signal? The only way an SFN can reduce co-channel interference compared to the present single high-power, high-tower scheme is to lower the DTV field strength throughout the present coverage area—but it cannot be lowered at the edge of coverage. In other words, the average DTV signal level margin (excess SNR above 15.2 dB) will decrease within the coverage area except near its perimeter.

So what is wrong with reducing signal level margins? In a paper published in IEEE Transactions on Broadcasting in September 2001 issue, Drs. Oded Bendov and Yiyan Wu, and others, including myself, wrote that the FCC predicted DTV coverage of DTV signals was unrealistically low: 41 dB µV/m at 615 MHz, for example. The minimum field strength required for reliable DTV reception is about 10 dB higher than the FCC planners predicted. Our 2001 paper explained why and it has never been refuted.

In June 2009, most broadcasters were surprised when they discovered that their DTV coverage was less then predicted. This was especially true for DTV channels in the High VHF band, and less so in the UHF band. This difference is due to the fact that UHF broadcasters in many cases had already maximized their DTV facility. Their field strength at the edge of their NTSC coverage area was well above that required for SNR, which is 15.2 dB. VHF broadcasters had not maximized—in many cases that would have resulted in co-channel or adjacent channel interference.

If the signal level margin for most viewers is going to be decreased, DTV reception will suffer due to the increased interference. This interference is not due to other DTV signals. It will be self-inflicted due to multipath created by multiple transmitters operating on the same frequency throughout the same community.

In 1995, the Advisory Committee on Advanced Television Systems devised a test plan followed by the Advanced Television Test Center to test the prototype ATSC DTV system. Multipath testing was conducted with the DTV signal power at the receiver at –27.89 dBm. At this D level, receiver generated noise is negligible (–99 dBm), and no sky noise was present within our laboratory, and no interference was present other than a single echo (the undesired U).

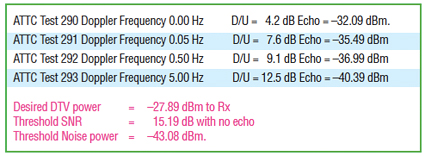

Fig. 1: Multipath Test Results at the ATTC in 1995 The data in Fig. 1 shows that while an echo within the range of the receiver’s adaptive channel equalizer causes less interference than “white noise” of the same power, it introduces a certain amount of noise. Test # 290 shows that a static echo (Zero Doppler Frequency) is less harmful than “white noise” of the same power, although it does lower the SNR. An echo at –32.09 dBm prevents reception of a DTV signal of –27.89 dBm. White noise power of –27.89 dBm – 15.19 dB = –43.08 dBm would have done the same. So one static echo is about 10 dB less effective than white noise in jamming DTV reception. This was due to the adaptive channel equalizer in the prototype receiver. Recent data suggests that receivers built in 2005 show this sensitivity to echoes within the time range where the receiver’s adaptive channel equalizer is effective.

DYNAMIC ECHOES

Now for dynamic echoes. The Doppler Frequency is greater than zero and the threshold echo power is seen to decrease as the Doppler Frequency increases from almost zero Hz to 5 Hz. This 1.8 µS echo at –40.39 dBm is nearly as bad as white noise at –43.08 dBm. In other words, dynamic echoes are noise-like even when their delay is a small part of the delay range over which the adaptive channel equalizer “kills ghosts.” They don’t “kill them,” they only weaken them.

It can be shown that at Channel 14, the dynamic echo from a vehicle moving at 70 mph towards or away from the receiver has a Doppler Shift of +/–98 Hz. For Channel 51 it is 146 Hz which is the maximum Doppler Shift frequency except for aircraft landing or departing.

What this means to broadcasters is that dynamic echo from a moving vehicle can act like white noise. The adaptive channel equalizer cannot track the rapid spectrum changes caused by such dynamic echoes. In my next column, I will show more recent data comparing static echo and dynamic echo rejections of DTV receivers from 2004 and 2005 (the information arrived too late for this issue). I will explain why COFDM modulation as used in Europe and Asia does permit SFN, but our 8-VSB modulation does not. Stay tuned.

Charlie Rhodes is a consultant in the field of television broadcast technologies and planning. He can be reached via e-mail atcwr@bootit.com

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.