Examining the Evolution of Archives

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Karl Paulsen

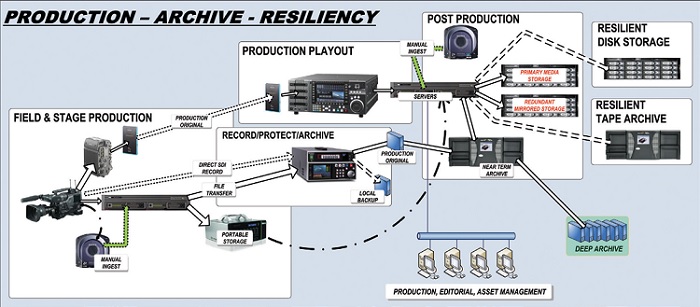

In the broadcast, media and entertainment space the term “archive” is mired in a variety of meanings. Since the onset of storing media on physical media, how and what constitutes an archive continues to evolve. Once associated only with the long-term preservation of an asset, the archive is now influenced by the content, context, usage, environment and even the organization by which the archive assets are associated. Click on the Image to Enlarge

Alternatively, users may capture encoded files to a server or for RAW files, directly to an array of drives specifically configured to capture high resolution images. These methods are applicable when capturing high-quality video as RGB (4:4:4) or as JPEG 2000 (J2K). Captured files are replicated to LTO or shuttled to a storage repository where a disk-based master and an LTO archive are created.

The definitions are broadened by the user, leading to confusion in the application of the storage technology, metadata, and even applications that make the archive useful. By example, the Library of Congress associates the term archive principally with the long-term preservation of those assets registered into the copyright domain. The LoC’s enormous archive consists of all forms of film (nitrates, slides, 16mm, 35mm) mediums, video, audio and even posters that promote copyrighted motion pictures.

Previously the LoC managed assets in their original format. Each asset was catalogued and associated with metadata for search, retrieval and recovery purposes. Today, assets are captured into a digital archive that essentially extends the life of all assets well into the next 100 years going forward. The LoC also attempts to maintain at least one piece of the original equipment from which to recover legacy content, yet another “archive” unto itself.

LONG-TERM PRESERVATION

This whole physical preservation dilemma makes for a dynamic facing the long-term preservation of both physical and electronic media. In principle, we must continue to ponder how we preserve the myriad formats being created and stored electronically on hard drives, optical disc, digital tape drives, and even that venerable save all “the cloud.”

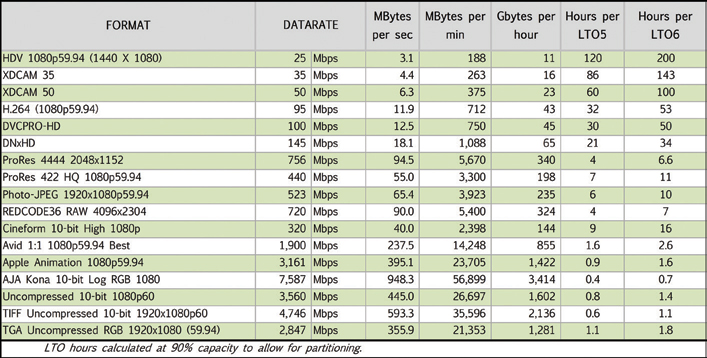

Table 1: Relative hours of archive storage capacities for LTO5 and LTO6 digital linear tape based upon various common high-definition Image formats from HDV through motion picture production at 1K (1920 x 1080), 2K and 4K scanning resolutions.

Today, digital media assets, even those now being received at the LoC, are being preserved as files. The issues of format determination and preservation become increasingly complicated as technologies in video compression broaden. Fortunately, today’s compression technologies enable higher quality and better consistency. However, as we improve the packing of higher resolutions into lower bit rates, we must remain conscious of the other extreme: that is, how do we retain the highest quality for selected assets and still allow them to be recovered years to decades from now?

As the sunset of videotape approaches we turn to alternative mediums onto which we create low bit rate, highly compressed video archives. Data rates typically reserved for high-bandwidth, high-capacity videotape or disk media can now be captured directly to linear digital tape (e.g., LTO), transported and replicated with minimal (inconceivable) bit error issues. The acceptance of LTO as a digital asset collection medium lets originals become “the archive” with little more than a registration process into a digital asset management system.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

However, like conventional videotape, there are issues with the storage of original digital content on any linear tape media. The continuous recording of high-resolution images at 1080p60 uncompressed and above is impacted by the physical length of the linear media (see table). LTO’s limitations in spanning a single file across multiple tapes constrict its ability to extend record times beyond the physical limits of the LTO-media. Users then return to conventional solutions, like those associated with videotape recording.

Instead of using a single linear tape, field recordings are made to an alternating series of two or more digital linear tape decks, overlapping every other tape as each tape reaches the end of its capacity. This can double the recording hardware necessary and assumes someone is available to change the tapes.

Metadata (e.g., timecode/stamping) is then used to perform a virtual splice, which is managed as an offline process, but effectively creates a spanned set of data across two or more physical tapes.

Fig. 1: Representations of multiple storage systems and media transport paths typically used in field origination and post-production that are supported through resiliency, redundancy and archive.

Non-volatile memory (NVM) Flash memory is making headway into video production. However this still requires specialized hardware sometimes configured with integral disk drives that essentially mimic other external HDD methods. These recorders can export their files on removable NVM for transport or may temporarily backup and/or extend the storage capacity beyond the recorder’s integral HDD capacity in an emergency situation. Nonetheless, this storage form factor still requires content be transferred to another medium (hard drives or digital tape) for post-production and archive purposes.

THE RESILIENT ARCHIVE

Beyond the acquisition and ingest processes, the next departure of the archive term emerges.

Organizations are often charged with protecting their assets in the near term (during the production/post-production process); the mid-term (as finished versions are prepared for distribution or broadcast); or long term (as original content or program masters).

Enterprise policies establish what is retained, how and in what format (i.e., the video compression format and the physical media format), for how long it is retained (sometimes driven by legal or other corporate directives), whether or not encryption and watermarking is required, and where it is retained (onsite, off-site, replicated locally, placed in the cloud, etc.).

By contrast, the term “resiliency” is yet another form of archive which enables an organization to continue working should a service fail due to unforeseen circumstances including scheduled maintenance. Methodologies for resiliency, also called disaster recovery (DR), vary as widely as archive methodologies. For archives and DR, the recovery process is assumed to be less time sensitive versus the availability of an asset stored in a resiliency structure.

Resiliency usually occurs at tier one or tier two levels (see figure). It is often structured as a “mirror,” i.e., an identical image in the original format stored on an identical “B” system of hardware. The hardware may be a replica of a complete end-to-end post-production editing system consisting of content/production asset management, high performance storage and an archive-engine. A less intensive solution is to replicate all working content onto a redundant storage platform having the same storage performance as the primary “A” system, but without the editing capabilities. In the latter case, all content is continually written to both A and B storage systems, but is managed only by a single production asset management (PAM) system. While this methodology protects the content, it provides no resiliency to the actual editing process regardless of how many copies of the content exist; and is not intended to act as a long term archive.

One last model is the hybrid archive whereby both local and cloud-based storage solutions may be employed. For any number of pros and cons, a total reliance on the cloud for short- or long-term storage (including preservation) of high-quality, high-value moving image assets is still in flux. Thus, many organizations, while looking into the cloud (as a commercial storage accessory) for a portion of their archive solution still depend on a local archive and resiliency solution as the primary resolution to business continuity.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his current book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.