Embedded Audio in a High-Def Video Signal

This month we'll wind up our series on the structure of AES audio with a discussion of embedded audio in a SMPTE 292M high-definition signal.

The SMPTE standard 299M-2004 describes how 24-bit digital audio and its control information are formatted to fit into the ancillary data space of SMPTE 292M serial digital video bitstream.

AUDIO DATA PACKET

With HD video, the audio data packets are multiplexed into the color-difference (Cb/Cr) horizontal ancillary (HANC) data space in the first or second line where the audio sample occurs. After a switching point, the standard specifies that the audio data packet must be delayed one line to prevent possible data corruption.

When there are multiple audio data packets in a single HANC block, these packets must be contiguous with each other.

Unlike SD embedded audio where the AES aux bits had to be stripped from the AES signal and placed in their own HANC packet, and where the AES parity bit wasn't used at all, HD embedded audio contains the entire AES word—preamble, aux bits, audio sample word bits, V, U, C, P bits—in a single HANC packet. (Recall that V, U, C, P stands for validity, user data, audio channel status, and parity bits, respectively.)

Formatting for embedded audio in the HD HANC space follows the SMPTE 291M standard for television, "Ancillary Data Packet and Space Formatting."

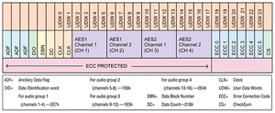

Fig. 1 shows the audio data packet structure. The ancillary data flag (ADF), data identification (DID), data block number (DBN), data count (DC), user data words (UDW) and checksum (CS) bits are required per SMPTE 291M.

(click thumbnail)

Fig. 1: Structure of an audio data packet. Reference: SMPTE 299M The AES audio data for one AES channel (one-half of an AES pair) fits into four user data words. Four AES channels (two AES pairs) fit into one HANC audio data packet. These four audio channels comprise one group. Up to four groups can be accommodated for a total of 16 digital audio channels (eight AES pairs). Each group is identified by its unique data identification word (DID).

The SMPTE standard stipulates that all audio channels in a given group have the same sample rate, sampling phase, and be either all synchronous or all asynchronous. Also, audio data for each channel in a group is always transmitted, even if less than the four are active. For inactive channels, the standard states that the value of the audio data, V, U, C, and P bits is set to zero.

Refer to SMPTE 299M for the detailed breakout of the UDW bits used for AES audio. We'll just note here that bit 3 of UDW2 (audio channels 1 and 2 of a group) and UDW10 (audio channels 3 and 4 of a group) is used for the status of the Z flag, which corresponds to the start of an AES block.

Error correction code words (ECC) 0-5 are computed and used to correct or detect errors in the previous 24 bits (from the first ADF word through UDW17).

SMPTE 299M doesn't place a restriction that the AES formatted data be used for digital audio only. The standard provides an appendix with guidelines for handling non-PCM audio formatted per SMPTE 337-2008, "Format for Non-PCM Audio and Data in AES3 Serial Digital Audio Interface." The AES channel status can indicate whether PCM or SMPTE 377 data is transmitted in an AES bitstream.

The guidelines recommend SMPTE 337M data be sampled at 48 kHz synchronously, and that no sample rate conversion be used. Sample rate conversion, as well as other types of PCM digital audio processing (like gain changes, equalization, cross-fading, sample drops and repeats), will corrupt the data altering it so that it won't be able to be recovered properly.

AUDIO CONTROL PACKET

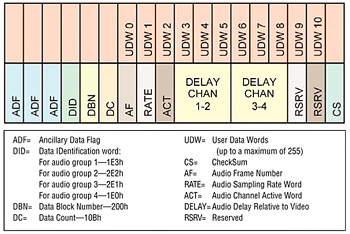

As in SD embedded audio, the audio control packets provide information about audio frame numbers, sample rate, the amount of audio processing delay, and active audio channels.

According to SMPTE 299M, "an audio control packet shall be transmitted once per field in an interlaced system and once per frame in a progressive system in the horizontal ancillary data space of the second line after the switching point of the Y data stream." As the audio data packets, the audio control packets are formatted per SMPTE 291M.

Fig. 2 illustrates the structure of an audio control packet. The functions of these parameters are similar to those for SD, and were discussed previously. Details on bit-level functions and parameter assignments are found in SMPTE 299M.

EMBEDDED AUDIO HINTS AND TIPS

Fig. 2: Structure of an audio control packet. Reference: SMPTE 299M Embedded audio has in many ways made facility design and installation easier and less costly, especially for network transmission or station master control.

One coax cable contains one video channel with all of its associated audio channels. Where the audio doesn't need to be broken out, this means one set of jackfields, one routing switcher level, a lot less cable than if the audio channels were wired separately, less manpower for installation and less cable cost.

Many routing and master control switchers, video servers and tape machines can accept embedded audio as is. On some equipment, this may be an option.

For video processing equipment that accepts embedded audio, what does it do with the audio before, during, and after processing? Are video processing delays considered when audio is re-embedded?

For equipment that doesn't accept embedded audio, check what it does to the HANC data. Does it strip it when processing the video? If so, you lose embedded audio.

Video and audio control rooms typically have to handle both embedded audio (routing switcher feeds) as well as audio-only sources (microphone feeds, local audio servers). It's a good idea to analyze all inputs and outputs to see if embedded audio within a studio environment makes sense.

The cabling and installation advantages of embedded audio fade away when racks of de-embedders and embedders with their associated jackfields need to be installed. Watch out for audio-to-video delays when any kind of processing is inserted into either path.

Mary C. Gruszka is a systems design engineer, project manager, consultant and writer based in the New York metro area. She can be reached via TV Technology.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.