Breaking Down the File System, Pt. 1

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Karl Paulsen

File systems, at the broadest of perspectives, have continually evolved functionally and dimensionally over the past several years. Once confined to disk drives and computer software applications that stored and obtained data from those drives, there are now many variations in the types, uses and functions for today’s file-system architectures.

Sorting out the enormous growth in unstructured data has driven file system solution providers to up the ante in what a file system must do and how it does it. The proliferation of an all-file-based medium has created new and useful adaptations that have resulted in growth horizontally—as in “scale out” (or bandwidth)—and vertically— as in “scale up” (or capacity)—perspectives.

A decade ago, the overall size of a file system might consist of 20,000 to 50,000 files that occupied the space of a few hundred megabytes to a few gigabytes. Today, modern file systems must deal with many millions to billions of files and may indeed scale from a few sub-petabytes to hundreds of petabytes in storage capacity.

Accessibility to these files and the manipulation of the file sets continues to be a daunting task regardless of the type of file system. And the so-called “enterprise-class file systems” have become hugely dependent upon the tracking and processing of the metadata that associates directly with the file system as it describes the data structures on the disk arrays and through the network.

LOCAL, SHARED AND NETWORK

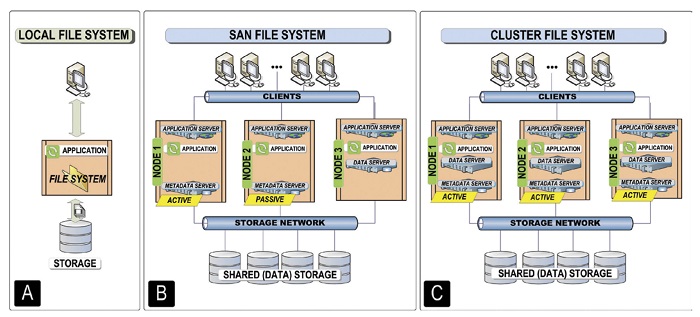

Fig. 1: Typical file system categories File systems, in general, can be categorized in three grouping types: the local file system; the shared file system; and the network file system (Fig. 1). The local file system is the simplest and the most familiar to most users. The shared file system has consistently been subcategorized as either the “SAN File System” or the “Cluster File System.”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Local file systems are usually individual (and typically separate) file sets that are colocated on the server and are independently associated with an application that attaches to a singular storage entity (Fig. 2A). A drawback to isolated local file systems is that data sharing, among each file system and its application, is not available—essentially making each local file system an island.

With that said, there are two fundamental methods available when considering data sharing: Scaling up, a capacity increase in the application server and the storage system; and scaling out, the incremental increases in individual file servers or applications that share data on a common pool addressed through a “storage network.”

GLOBAL BY DESIGN

Shared file systems are considered global file systems by design. Data access is logically separate and physically separated. Shared file systems utilize a metadata server that intervenes between the client (the workstation, PC or server) and the access requests (for data storage, for metadata delivery and for data access). A shared file system may be either asymmetric (as in a SAN file system) or symmetric (as in a cluster file system).

The SAN file systems’ asymmetric approach will use a collection of application and metadata/data server sets—arranged into nodes (Fig. 2B). Nodes consist of application servers, the application itself (it may be a Web server) and various flavors of data and metadata servers. Clients connect directly to the nodes via a sub-network. Each node collects and distributes computational data through a storage network that shares a common pool of storage, i.e., the “shared data.”

In the SAN file system, typically one node will have a single metadata server that is active. Another node may have a secondary metadata server that is passive. The remaining nodes include data servers that attach to the storage network comprised of the fabric (e.g., a Fibre Channel or Ethernet switch) and the shared data storage arrays (the “SAN”).

Nodes can be geographically distributed, with the internodal separation limited by the capacity and structure of the SAN itself. A SAN files system is considered homogeneous— that is, the network is comprised of computers using similar configuration and protocols (e.g., Windows or Mac OS, but not necessarily both). The SAN file system’s scaling ability is limited by its metadata server capacity.

The cluster, another form of a shared/ global file system, is symmetric and appears similar to the SAN file system except that each node is a complete, nearly identical structure, which includes the application server, the application(s), an active metadata server and a data server (Fig. 2C).

Fig. 2: Peripheral system components and their respective file system architectures

Each node is connected, as in the SAN file system, to a storage network fabric and a shared data pool or array. By including a metadata server per node, performance efficiencies are obtained as each node can work independently or groups of nodes can communicate in parallel for faster throughput or load balancing. Cluster file systems are typically homogeneous, with its scaling constrained by its internal communications.

NETWORK AND DISTRIBUTED FILE SYSTEMS

The network file system can be narrow or wide in perspective, operating as a proxy file system over a LAN or WAN. This file system enables the sharing of files located on a file server; which uses a network protocol (e.g., NFS, FTP, CIFS) to support one to many clients (i.e., workstations, PCs, laptops).

The network file system consists of two or more sets of application and file system clients that connect to the file system service through the network protocol components.

There are numerous renditions of network file systems, spanning wide areas, utilizing LANs and employing protocol-specific optimization based around the file system type or protocol. Such wide area file systems can be tuned for application-specific purposes using storage and network algorithms, file-awareness differencing and many more data compression methodologies.

Distributed file systems (DFS) are yet another form of the network file system. DFS methodologies are used for managing the file infrastructure of a computing, network server or storage platform. Such network file systems can be distributed file systems or distributed parallel file systems.

In our next installment, we will look further into DFS as well as other aggregation approaches that can be used in media-centric computer/servers and storage architectures.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about storage topics in his most recent book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.