Transforming Live Streaming With a New Generation of Formats and Encoders

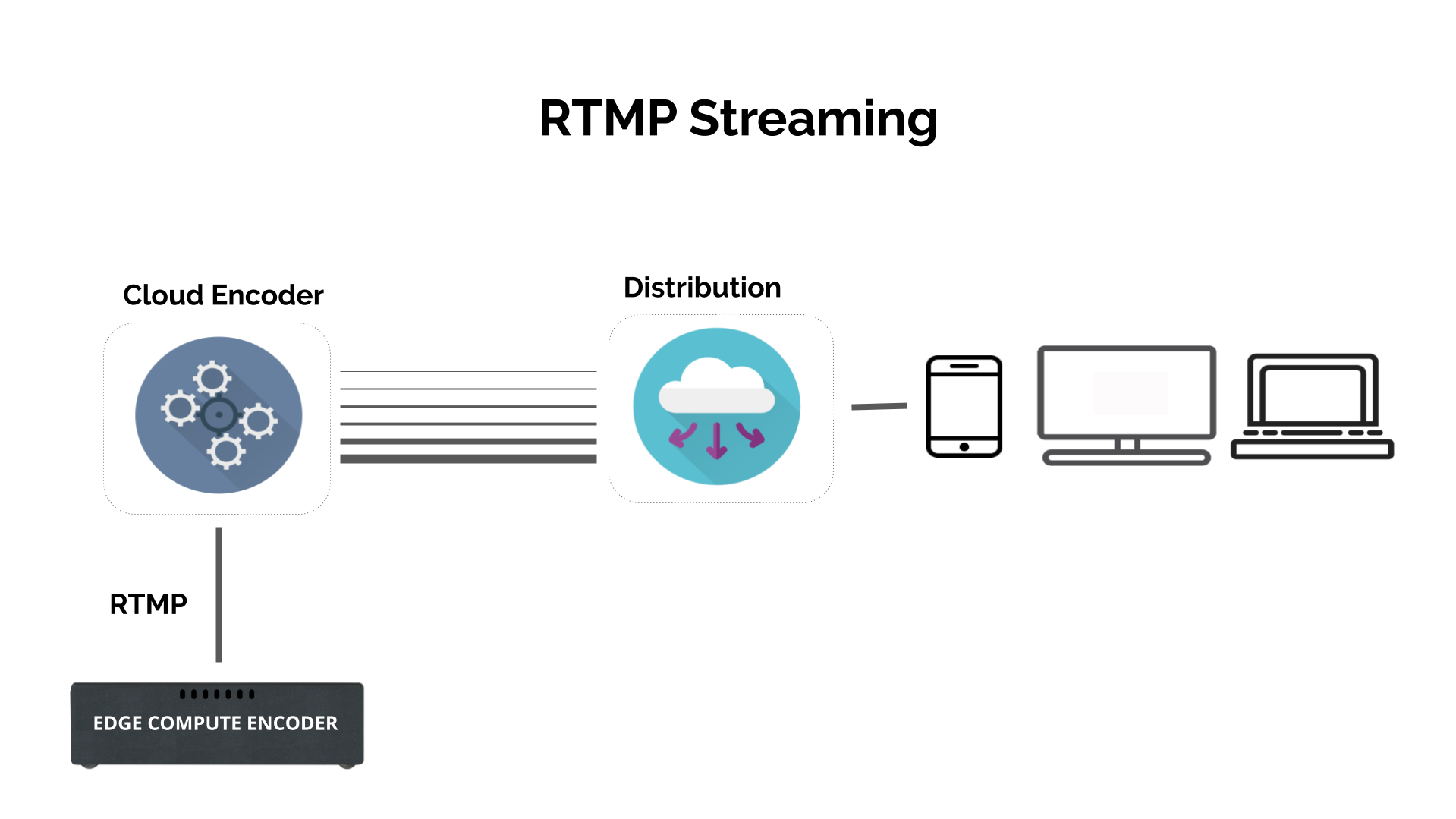

Go back a couple of decades in a time machine, and the Real Time Media Protocol (RTMP) was the protocol for streaming media. Video encoders pushed out RTMP for delivery to the cloud and, in turn, to a player. Everyone relied on RTMP, and the workflow was simple, without complications such as cloud transcoding or repacking.

Fast-forward to 2019 and RTMP is still widely used, although it doesn’t support High Efficiency Video Coding (HEVC, or H.265) and no longer has native support within mainstream player applications and operating systems. The industry continues to rely on RTMP-based workflows with streams pushed up to the cloud and then transcoded, transrated and repackaged before they are ready for distribution in the formats that today’s viewing devices support.

BEST OF BOTH WORLDS

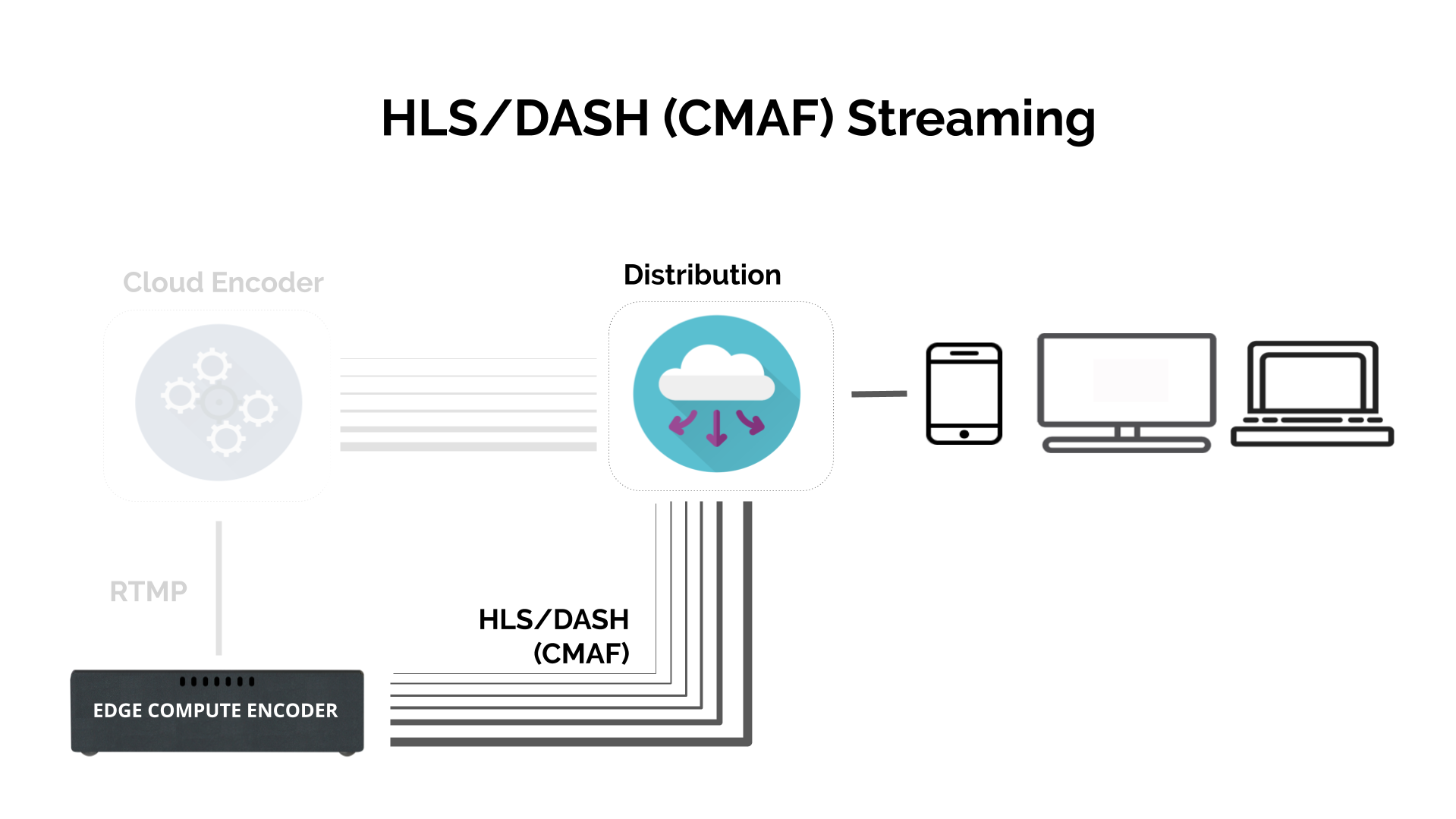

However, a new encoding and streaming model is gaining ground—and earning support as a smarter and more cost-effective means of modern media delivery. The combination of HTTP Live Streaming (HLS) and Dynamic Adaptive Streaming over HTTP (DASH) with new chip-level system hardware, along with the concept of edge compute encoding, is making the entire media delivery process faster and more efficient. And, HLS and DASH combined with the Common Media Application Framework (CMAF) enables low latency.

As a result, video delivery over the public internet is being achieved with latency levels low enough to enable a new set of applications—for example, increased interactivity that allows channel changing in emerging countries (where cable is not feasible) to be made possible. Other applications can include live auctions and sports gamification—and making streaming of “live broadcasts” actually live.

While neither HLS nor DASH is new, what is new is CMAF and the development of cost-effective live streaming video encoders that natively support HLS, DASH and CMAF. This advancement in technology is thanks to new system-on-chip (SoC) semiconductor technology that has allowed video encoders to now use more modern, purpose-built hardware rather than general purpose FPGAs and CPUs.

Built on the Qualcomm Snapdragon SoC, new edge compute encoding devices boast significantly greater processing power than their legacy counterparts. With this additional horsepower, edge compute encoding devices can address two critical challenges in streaming: adapting smoothly to changing network conditions and delivering high-quality video over relatively poor internet connections.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Content providers use adaptive bitrate (ABR) streaming to provide every viewer with the best possible image quality. With anywhere from four to eight versions of content—all with different resolutions and bit rates—available in the cloud, it’s possible to deliver the version that takes best advantage of available bandwidth for the target device. One version might be ideal for a small cellphone picture; one might be intended for an iPad, another for a PC or a set-top box.

BRINGING LIVE STREAMING TO INTERNET-CHALLENGED AREAS

The limitations of previous-generation encoding devices made it necessary to create all bit-rate variants of that initial piece of content in the cloud. Why? Because to take on the processing required to create multiple versions on the point of production, conventional encoders would need to be equipped with a bigger CPU or with a lot more FPGAs, and the costs for those add up. As mentioned, in a traditional streaming scenario, higher-resolution content is pushed at a particular data rate—often about 10 Mbps—up to the cloud, and then cloud processing outputs versions with different resolutions and bit rates.

In the new edge compute encoding model, the SoC-based device creates multiple versions of content and delivers them to the cloud for distribution. The latest generation of SoC-powered edge compute encoders can create six instances of video at different bit rates and send them all to the cloud, reducing both latency and the cost of cloud processing while maintaining image quality. And new SoC-based devices can create both HLS and DASH outputs, operating within CMAF, carrying HEVC to the cloud in a direct workflow that allows users to enjoy the bandwidth savings—upwards of 40% depending on the content—that HEVC affords.

Thanks to the high compression and high quality of HEVC, 4K video can be encoded and sent up to the cloud, thereby boosting the video quality offered to customers. While HEVC enables 4K video over public internet, consider the advantages of HEVC to a 720p signal. Maintaining outstanding quality of the 720p signal with less than 1 Mbps is now possible, opening internet-challenged areas for live streaming. Even commodity internet routing and switching gear available at consumer retail stores can be used to produce best-in-class video. This is the democratization of technology through the use of today’s standards.

The application of SoC-based edge compute encoding to streaming is transforming media-delivery workflows and the cost structures associated with them. Even more exciting is the prospect of the new applications that edge compute encoding can enable and engender. If anyone can take a camera, plug it into an encoding device and stream video worldwide in 3 seconds or less, what happens then? The possibilities are limitless, and the results will be amazing.

Todd Erdley is the CEO of Videon.