Three sensors or one

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Over the last few years, the DP, cameraman and videographer have never had a wider choice of cameras available to them. Cameras essentially fall into two groups: the television camera, with lineage back to the tube camera, and the digital cinematography camera, with its roots in film, stills and motion picture photography.

When color television was first developed, one proposal was to use sequential RGB frames and a spinning filter wheel. Color film pioneers, including Technicolor, had used beam splitters to separate light into color components for capture on monochrome film stock. Sequential capture had unacceptable color-related motion artifacts, and the beam-splitter concept became the core of future television cameras. As tubes gave way to solid-state sensors — CCD and now CMOS — the beam-splitting optical block has remained a key component in the camera.

Gearhouse Broadcast used Hitachi SK-HD1200 and SK-HD1500 supermotion cameras to cover ATP World Tour tennis.

When Kodak scientists developed the solid-state sensor, the approach they took was to use a single sensor with a superimposed color filter array (CFA) to derive the color information. The single sensor could be placed in the film plane, allowing existing film lenses to be used with a digital capture device.

Although early digital cinema production used modified television cameras, the single sensor with a CFA was soon adopted, allowing DPs to use their much-loved 35mm film lenses. The Super 35-sized sensors also delivered the shallow depth of field, an essential part of the “film look.”

The single-sensor camera has become popular for shooting all manner of program genres and for the production of commercials. However, the traditional television camera, typified by a beam splitter with three 2/3in sensors, remains the mainstay of studio and remote production, with smaller 1/2in and 1/3in sensors being used where a smaller, lighter camera is needed.

For everything from newsgathering to entertainment production, the conventional camera has many advantages over the single sensor with a CFA.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Three-sensor cameras

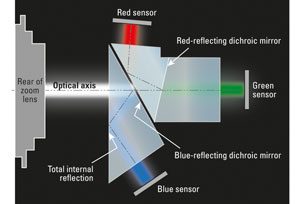

At the heart of the camera is the beam splitter. The typical design uses dichroic filters to split light into short (blue), mid (green) and long wavelengths (red). Dichroic filters use thin-film coatings on glass (the prism), and through optical interference, reflect or pass light depending on the wavelength. The coatings use many layers of different refractive indices to tune the filter band pass characteristics. (See Figure 1.)

Figure 1. The main components of a beam-splitting prism in a three-sensor camera

The individual sensors are bonded to the prism after careful positioning to ensure the sensors are centered at the optical axes and that the photo-sites align.

The beam splitter has low absorption of light, so the majority of the incident light reaches the sensors. For the CFA sensor, half the green light and three-quarters of the blue and red light is lost in the absorption filters in the Bayer pattern. The result is that for a given sensor size, the beam-splitter design is inherently more sensitive.

Single-sensor cameras

Early single-tube consumer cameras used methods such as colored stripes to derive color information but suffered from a large difference between horizontal and vertical spatial resolution, and lacked the necessary performance for professional applications.

The signature patent in the development of single-sensor imaging was from Bryce E. Bayer, and assigned to Kodak. This detailed an RGBG CFA using transmissible filters superimposed in registration on the sensor matrix. The patent details luminance sensor elements (green) alternating with chrominance sensor elements, such that every other sensor is for luminance, thus matching the sensitivity of the retina, and much like the NTSC and PAL color systems, where the color information is subsampled. Bayer’s patent details two color channels: red and blue.

The output from the sensor is demosaiced into RGB components, and then an algorithm is applied to reconstruct an R, G and B value for each pixel. This process does lead to artifacts, especially in the red and green channels, that are not found with the three-sensor cameras. Typical artifacts include aliasing in fine red and blue detail.

The regular pattern of the sensor inevitably leads to spatial aliasing, but this can be reduced with the use of an optical low-pass filter (OLPF) in front of the sensor. With a three-sensor camera, the design of the OLPF is matched to the resolution of the sensor. However, with a Bayer sensor, the photosite density for red and blue is half that of the green photosites. The effective fill factor is also different, so the design of the OLPF is more of a compromise with the single-sensor camera.

The Bayer RGBG layout is just one of several alternative CFA schemes that are used in cameras. One RED Digital Cinema camera even offers a single sensor without a CFA for the cinematographer who wants to shoot in black and white.

Pros and cons

The 2/3in format is a mature, stable design, with the main development being the shift from CCD to CMOS technology and the addition of 3Gb/s 1080p50 outputs. The format, along with the 1/2in and 1/3in versions, provides broadcasters with cameras with the performance demanded for HD and the versatility of ENG through to studio cameras. There is a wide range of field and box lenses, with the apertures and zoom ranges needed for sport, through to the lightweight lenses for shoulder cameras.

For some genres, especially drama and episodic production, the Super 35 PL-mount lenses provide a filmic look, partially stemming from the shallower depth of field with the larger sensor. The larger image format is more suited to fixed-focal-length prime lenses, and in this format, zooms are limited in their ranges, typically 3:1.

Sensors

The CCD has been the original technology for solid-state sensors in television cameras. For some years now, DSLRs and digital cinema cameras have moved to CMOS technology for single-sensor cameras. Continuing research and development has raised the performance of CMOS to the point where it is starting to be used in regular 2/3in broadcast cameras.

Facilities company DutchView uses the Grass Valley LDX camera on a multicamera studio production.

CMOS has advantages, lower power consumption being one. In addition, CMOS does not suffer from the vertical smearing of CCDs.

Proponents of the CCD imager point to the “Jello” effect exhibited by the rolling shutter of most CMOS sensors. However, it is perfectly possible to design CMOS sensors with a global shutter, which eliminates the effect. They are more complex, with extra transistors per photosite. The real estate of the additional transistors lowers the sensitivity of the sensor, but advances in semiconductor manufacturing allow the transistors to be made smaller, increasing the fill factor of the active sensor to that of earlier rolling shutter CMOS designs. With Super 35 sensors, this is even less of an issue than with 2/3in sensors.

With any solid-state sensor, the designers try to maximize the active light gathering area (the fill factor) to maximize signal-to-noise ratio. They will also endeavor to improve highlight handling and maximize dynamic range. The larger Super 35 sensors inherently gather more light, so they will have less noise.

Choosing a camera

Broadcasters have many aspects to consider when choosing a camera to purchase. Cost is obviously important and must be balanced against features, performance and other issues. Many broadcasters have favored suppliers with long-standing relationships based on support and service from the vendor.

The camera’s features are important. Does it have all the facilities that the camera operator needs? These include aspects such as the viewfinder, talkback, menu structures and position of controls, and many other facilities that make the operation of the camera suited to fast-paced live production.

For the director, performance matters. Is there noise in the blacks? How good is the highlight handling? What is the dynamic range? These factors are especially important for field production, where the DP does not have ultimate control over light and contrast ratios. Performance improves year on year, and what was acceptable in a camera five years ago may not cut it today. For premier productions, directors will expect the latest camera to deliver the best possible pictures. Much of the performance is ultimately limited by the sensor, and with constant improvements in sensor design, the useful life of cameras is becoming more limited.

A glance at a camera brochure results in a brief specification of the performance of the camera. There are headline parameters, like limiting resolution, but the only sensible way to establish performance and compare cameras is through thorough testing. Paper specifications can be misleading, or even meaningless.

To compare cameras, they must be tested with resolution charts to establish the modulation transfer function of the camera along with the lenses. To measure dynamic range and noise, practical shooting under typical lighting conditions will show differences between cameras, and hopefully improvements in the latest cameras.

Summary

As camera manufacturers move to higher resolutions, questions arise. Will 4K cameras be developed in the 2/3in format? Will they be sensitive enough when the pixels are one-quarter the size of HD pixels? Ultimately, resolution is limited by diffraction in the lens, as seen with the 1/4in sensors in some consumer HD cameras. Many manufacturers are now making 1080p50/60 cameras, dubbed 3G, but there are a few current examples of production in 1080p50. 1080i and 720p50 are favored for OB and studio production, and 1080p25 is favored for the filmic look. There may be 3G productions made with the future in mind, as 1080p50 upsamples to 4K with less artifacts than 1080i. However, it is already possible to shoot in 4K with digital cinema cameras if future-proofing is an aim, albeit at higher costs for storage and processing.

The next round of cameras for studio and field production in UHD format — 4K at 50fps or 60fps progressive — will need camera cables running at 12Gb/s, along with switchers and all the accompanying equipment to support live production. Early 4K equipment is using dual and quad 3Gb/s links as well as 6Gb/s SDI, which is already supported in silicon. 12Gb/s camera connections will be fiber, with copper only suitable for very short cables runs.

We are at a pause in camera development: HD has become very good, but it is early days for 4K television (as opposed to film) cameras. By NAB 2014, the way forward will be much more clear.

— David Austerberry, editor