Engineering trends in cameras and lenses

The camera industry is pursuing advanced — beyond HD — camera technology. These advances include both higher temporal resolution and higher spatial resolution. The latter, in turn, forces changes in the choice of a lens for use with an advanced camera.

Sensors and processing

The earliest of the recent advances in camera technology is the switch from CCD to CMOS sensors. One problem, banished by CMOS, is vertical smear. Unfortunately, this step forward has been achieved with a step backward. Rolling shutter artifacts are present when there is motion by the camera and/or objects moving within the frame. Slight random movements by a handheld camera can create a wobbly gelatin look that is particularly disturbing.

Because a CMOS sensor’s rows are processed — reset, integrated and output — in a sequence that occurs over time, a CMOS sensor exposes each frame in a top-to-bottom pattern. The row-exposure offset creates a rolling-shutter skew. (See Figure 1.) To date, the most common solution has been to read out multiple vertical slices of the image simultaneously. The more slices a chip can output, the faster a whole image is captured — and the less rolling shutter artifacts.

Figure 1. Rolling shutter artifacts present with motion

Most CMOS imagers today use active-pixel sensor (APS) technology that is implemented by three transistors. Each pixel has a reset transistor, an amplifier transistor and a row select transistor.

By adding a fourth transistor to each pixel’s circuitry, it is possible to capture an image with all sensor pixels simultaneously. All sensor photodiodes are reset at the same instant. By beginning image capture at the same moment in time, there is no row-exposure offset to cause rolling shutter. The fourth transistor holds a photodiode’s integration value until it can be read out. This global shutter design eliminates rolling shutter.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Frame rates and resolution

The multislice technology that enabled rapid sensor output, although not needed to reduce rolling shutter artifacts, still serves a purpose in today’s cameras. Camera manufactures can use fast sensor output to support higher frame rates. The obvious use is to provide the temporal resolution of 720p60 at a 1920 x 1080 or 3840 x 2160 spatial resolution.

Many consumer HD cameras already support 1080p60. Like 4K cameras, there are no distribution systems that support 1080p60. However, when editing projects that employ motion effects, the 2X additional full-resolution frames enable smoother effects. And, 4K enables static and dynamic video crops.

Figure 2. JVC’s UHD GY-HMQ30 camcorder

Increasing camera resolution supports two options. First, many new cameras use only a single sensor. Thus, some form of demosaicing is required to obtain RGB or Y’CrCb signals. A typical de-Bayer process yields a horizontal luminance resolution of approximately 78 percent of the sensor’s horizontal resolution. However, by employing a sensor with 2400 (or more) pixels, after a de-Bayer, the potential horizontal luminance resolution will be 960TVL or 1080LW/ph.

These cameras are referred to as “2.5K” cameras. Potentially, they can provide the same horizontal luma resolution as a 2K-pixel three-chip camera. A single-chip camera can also provide equal chrominance resolution when recorded to a 4:2:2 format. However, the competence of the demosaicing process determines the amount of chroma artifacts captured. Chroma artifacts are not an issue with three-chip cameras.

When recording to a compressed HD format, horizontal resolution is real-time downsampled within a camera to 1920 columns. Cameras that capture RAW information record all pixels with the downsample, if desired, performed in post.

Second, 4K, and even 8K, camera resolution is possible. UHD (3840 x 2160) and 4K (4096 x 2160) are coming to market. These cameras include JVC’s newly announced GY-HMQ30 (shown in Figure 2), which has a Nikon F-mount and records using 144Mb/s H.264, as well as RED Digital Cinema’s 6K EPIC DRAGON (RAW-only recording) and Blackmagic Design’s Production Camera 4K (RAW and log recording).

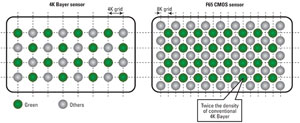

Figure 3. Sony F65 “True 4K” and 8K sensor

Sony’s F65 is capable of what it calls “True 4K” plus 8K capture using its non-Bayer 20-megapixel Super 35 chip. (See Figure 3.)

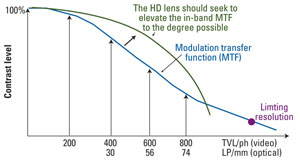

Higher camera resolution demands higher lens modulation transfer function (MTF). A lens’ MTF describes the relation between image contrast and spatial resolution. The higher the frequency, as represented by the X axis of Figure 4, at which roll-off begins, the more visible the fine detail that passes through a lens. Visibility is a function of contrast that is represented by the Y axis. Figure 4 presents a sample MTF for an HD curve. A 4K camera requires at least twice the performance.

Figure 4. Example MTF curve for an HD camera

Lenses

The quest for high-resolution lenses has led to equipping these new cameras with cinema and photo lenses. The F65 can use Sony’s electrically coupled versions of Zeiss lenses. Other 4K cameras provide a PL-mount for cinema lenses. Other cameras support Canon EOS lenses or Micro Four Thirds (M43) lenses. EOS and M43 mounts can employ adaptors that enable use of F-, A- and E-mount lenses. (See the Crop factor sidebar at the bottom of this page.)

Modern lenses developed for still cameras do not have an aperture ring. Aperture control is via an electronic coupling. Thus, an adaptor must, itself, provide aperture control. This control will drive the aperture within the lens via a coupling pin. Obviously, the adaptor ring cannot have marked f-stops. Aperture is set by monitoring exposure on the camera’s LCD.

Photo lenses typically alter aperture size in steps rather than continuously. That means you will not be able to smoothly adjust the aperture while shooting.

The lens and camera engineering trends we are currently experiencing are likely to continue because the next technology target is 8K video capture and recording resolution.

SIDEBAR:

Crop factor

A photographic lens’ focal length (e.g., 50mm) is specified relative to the dimensions of 35mm still film, which are 35mm x 24mm with a diagonal of 43.3mm. In the digital world, this is called a full frame. When a 50mm lens is mounted on a full-frame camera, it provides a field of view that is similar to that provided by our eyes.

When a 50mm lens is used with a camera that has a sensor smaller than 35mm x 24mm (for example, a 22.2mm x 14.8mm APS-C sensor, which has a diagonal of 26.7mm), only the central portion of a scene is captured. The resulting capture has an angle of view that is necessarily smaller. The scene looks as though it were shot with a longer lens on a 35mm camera. The relation between the 43.3mm and 26.7mm diagonals is called the crop factor. In this case, the crop factor is 1.6. Therefore, a 50mm lens behaves like a 80mm lens when mounted on the smaller sensor.

—Steve Mullen is the owner of DVC. He can be reached via his website at http://home.mindspring.com/~d-v-c.