Anticipating Change: Automating Storage Tiering

Karl Paulsen

Your organization just told you a building renovation project that starts in six months requires you to move all of your editing, storage and production systems to a temporary location during construction. Then you must move it back when the rooms are ready.

Six weeks prior to this notice you’d started an upgrade that would add new capabilities to your current operations. Now you’re faced with some new issues: Get your new system up and running only to tear it apart and move it to another temporary location.

Fortunately, you’d anticipated this might happen, so in the planning phases of the upgrade you unknowingly made some very important decisions. You figured, at the least, you should build out a tiered storage architecture for the future; and second, you included an investment in a media asset management component that added an archive solution coupled to digital tape.

AN AUTOMATED PROCESS

Storage tiering is an important and evolving part of any storage architecture for today’s digital media environments. Yet storage tiering is nothing new; this column has discussed the concepts and methodologies of tiered storage for several years.

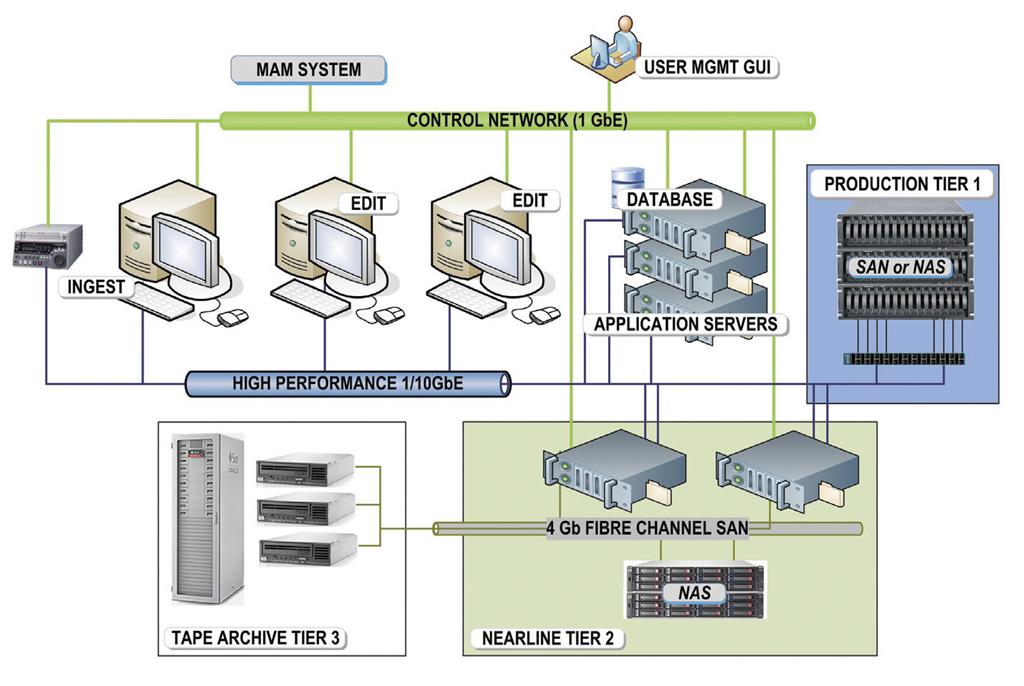

Fig 1: Typical three-tier, enterprise-level storage architecture with high-performance storage (tier 1), nearline (tier 2) and long-term LTO tape archive (tier 3). Some may place their tier 3 storage in the cloud if the need for accessibility is not great enough to warrant an in-house, highly accessible tape archive. However, what is now changing in today’s modern digital environment is a shift from what was once largely a semiautomatic or even all-manual process into one that is (depending upon your current storage deployment), an automated process driven by advancing technologies surrounding sub-LUN, block-based storage systems coupled to new drive technologies.

Out of necessity, storage systems are now required to ebb and flow based upon business needs and peripheral system demands. Understandably, we find that storage systems never seem to diminish in total capacity. They can, however, shrink in their local technical footprints based upon storage density increases, distribution of content to alternative sites or locations, or other physical parameters.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

One of the factors affecting these physical requirements—the “technical footprint” if you will—is the increasing growth of solidstate drives. SSDs are now deployed in many areas associated with the management of digital media, metadata and applications.

SSDs consume less space and power than spinning disks. They are faster, more reliable, last longer, require less cooling and can be used in physical environments where magnetic rotating media might not easily survive.

One of the applications for SSDs that has grown out of need and capability is that SSDs are now deployed in the automated migration of data from one tier level to another of a storage system. Once seen as an almost completely manual task, the movement of data from primary disk media (i.e., “the C drive”) to secondary disk (e.g., a portable backup drive), can now be accomplished entirely in software, and at a block level versus a LUN level.

In a major digital media production environment, asset management systems are looked upon to sense, manage and move data from primary high-performance storage to inexpensive tertiary storage such as near-line (SATA disks) or tape media. This practice was often lumped into the term “hierarchical storage management” (HSM), a term that grew from the world of transactional data processing—that belonging to cash registers, ATMs, online purchasing or bank accounts.

In an HSM system, data that was “in process” would be held on high-availability storage. Once the transaction was completed and all the necessary links to the databases verified, the data would sit idle for minutes to hours. HSM would watch that data and then automatically move it to another storage tier for days to weeks, depending upon usage algorithms or policies.

Eventually, that data would be archived to a tape system for long-term preservation and purged from spinning disk. Only the summary information plus a pointer in a metadata database would remain such that if called upon, the detailed data could be retrieved (restored) back to high-availability storage for other activities.

These processes were time-consuming, drive-intensive and took away from other system activities to the point that an entirely new storage migration server system would need to be deployed just to manage this continual data (and metadata) migratory process.

Most users, especially in the earlier days of digital media systems, didn’t have a MAM or an HSM system to “watchdog” their assets. Thus, all the data would accumulate on the same storage system until capacities were reached. Then someone would manually move the more important files to another storage medium.

THERE ARE RISKS

Risks due to data losses could be enormous and the costs to add mirrored system devices were costly or unaffordable. The advent of SSDs and automated processes has helped change that practice.

SSDs allow for data to be managed at a much finer-grained level than array- or LUN-based storage tiers. SSDs now seriously reduce the time required for data migration, especially when operating at the block level as compared to data management at the LUN (logical unit number) level.

Previously system-taxing operations, which were dedicated to specialized servers, can now be deployed in software. Some vendors now claim data migration between tiers is achievable in real time, vastly improving performance and making much better use of the SSD data caches.

Enterprise-level storage systems depend upon a consistent and predictable level of storage tier migration. Often these classes of systems must not only continually replicate entire data sets between main, backup and even third level—remote or geographically separated—storage platforms; they must also manage the data among the closest users on the network or calculate the fastest path through a fabric to achieve optimal performance for editors, ingest systems or delivery services.

Tiering is just one of the methods used by enterprise-level data systems to keep operations flowing and users happy

Combining the capabilities of SSDs achieves increased performance by balancing between content that is less active (kept on disk) versus content that is highly active and kept on SSDs. Thus, when read/ write production activities are low, software switches the SSD’s functions to storage tier management; and vice versa.

The overall results are transparent, with productivity increasing and mitigating having to add additional spindles or storage capacity to achieve similar results.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his current book “Moving Media Storage Technologies”. Contact Karl atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.