Advancing Lighting Techniques for Virtual Production

SMPTE2020 keynote explores ways to “join the real and virtual worlds through illumination”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

In his keynote presentation on the second day of the SMPTE2020 conference, Paul Debevec outlined how he has been bridging what he called the gap between the real and virtual worlds. This is something Debevec, senior staff scientist with Google Research and a research professor at the Institute for Creative Technologies (ICT) at the University of Southern California, has been working on for much of his career.

Debevec opened his keynote, “Light Fields, Light Stages and the Future of Virtual Production,” by outlining the various techniques that have been developed to "join the real and virtual worlds through illumination." This goes back to research into image-based lighting (IBL) at the University of California, Berkley, in late 1990. This led to Light Stage 3, which comprises 156 RGB color LEDs. Originally shown at SIGGRAPH 2002, it featured in David Fincher's 2010 film “The Social Network” to match the real and digital characters when the face of Armie Hammer, playing the Winklevoss twins, was composited on to the face of his stand-in.

REALISTIC & NATURAL

The key to making such work convincing, Debevec said, was to make the lighting on the objects or people being placed into a scene look realistic and natural. Even when this had been achieved, he continued, there was still the limitation that there was no reflection of the lighting or other things, such as scenery, that was in the original shoot.

Fortunately the technology available to achieve this technique has advanced. In the 2013 Sandra Bullock film “Gravity,” lighting crews used 9mm LED panels to create good light reflections in the actress’s helmet visor. This was taken further still for the Chinese production “Asura” (2018), in which an enormous (85x68x27 feet) LED set was built to allow an actor to be composited into a virtual mountain scene with fully matched lighting.

DEEP VIEW VIDEO SYSTEM

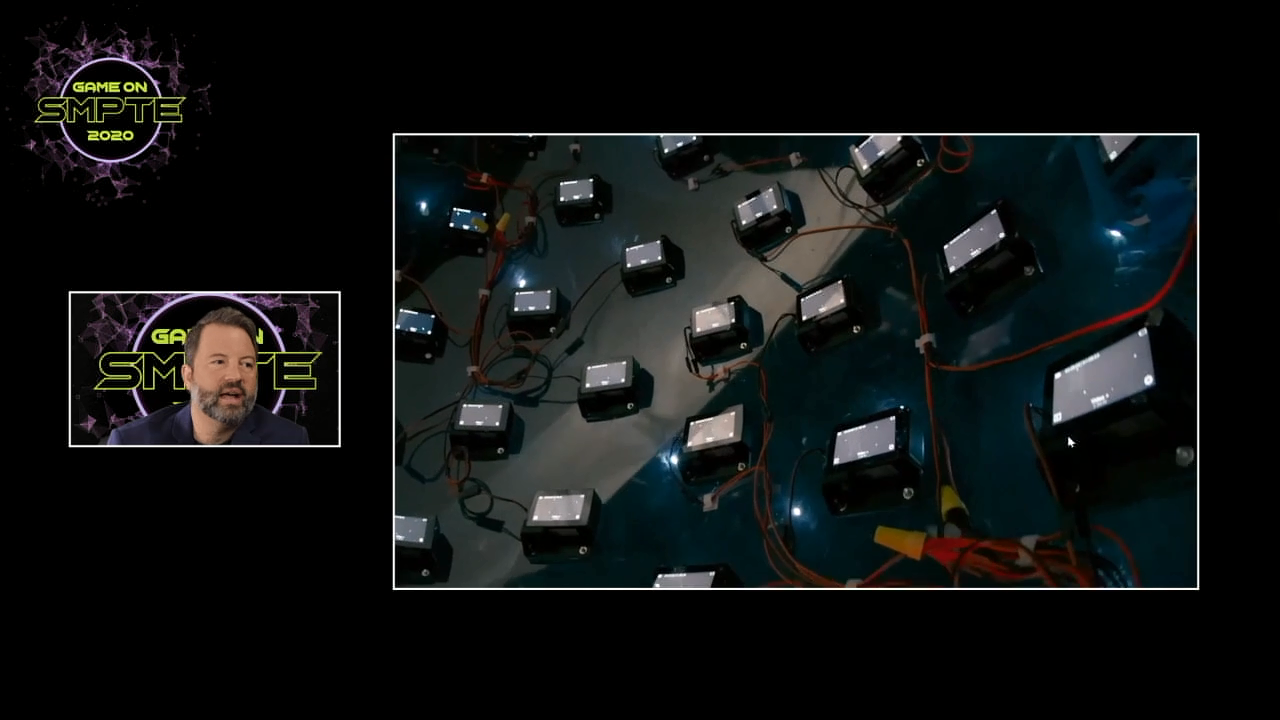

The latest development that Debevec has been working on at Google is the “Deep View Video System,” which sprang from his idea of using a sphere of small cameras in a fisheye/cylindrical configuration to produce a more immersive effect. Constructed using $6,000 worth of sports action cameras 3D printed on to a $200 acrylic hemisphere, connected by synchronization cables, it records 46 different videos of a scene looking in different directions.

A neural network algorithm is trained to transform the 46 videos into about 100 spherical layers of RGB alpha channels, which replicate all the views that were shot and all the views that would be between them. Through compression and rendering, the 100 layers are reduced to approximately 16 layers of depth mapped meshes (low resolution geometry) with high resolution alpha textures that can be compressed with temporal stability and transmitted in real time on mobile VR hardware, ultimately as a 4K or 8K video stream.

The result, Debevec said, is that video can be recorded and watched in VR, with the greater feeling of really being there, with the ability to look round and move comfortably in a scene. The system can be experienced by going to http://augmentedperception.github.io/deepviewvideo.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Debevec invites colleagues to contact him with suggestions of what else the technology could be used on (www.debevec.org).

Kevin Hilton has been writing about broadcast and new media technology for nearly 40 years. He began his career a radio journalist but moved into magazine writing during the late 1980s, working on the staff of Pro Sound News Europe and Broadcast Systems International. Since going freelance in 1993 he has contributed interviews, reviews and features about television, film, radio and new technology for a wide range of publications.