Internet Archive houses all TV news since 2009

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

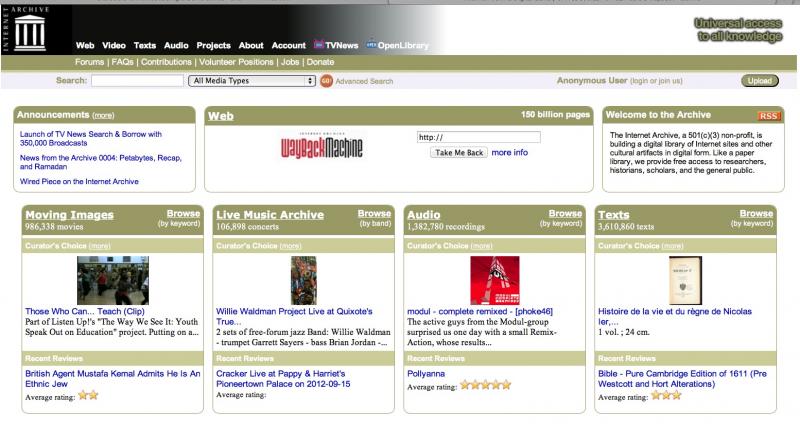

The Internet Archive, a giant aggregator and digitizer of data, now includes all the television news produced in the last three years by 20 different channels. The collection includes more than 1000 news series that have generated more than 350,000 separate programs.

Brewster Kahle, founder of the Internet Archive, told TheNew York Times that his company, based in Richmond, CA, “wants to collect all the books, music and video that has ever been produced by humans.”

The massive online resource has already digitized millions of books and tried to collect everything published on every Internet web page for the last 15 years — more than 150 billion pages. The material is designed for use by both researchers and the general public, and now totals 2 million visitors per day.

“The focus is to help the American voter to better be able to examine candidates and issues,” Kahle told The New York Times. “If you want to know exactly what Mitt Romney said about health care in 2009, you’ll be able to find it.”

Kahle said the news outlets being catalogued contain CNN, Fox News, NBC News, PBS and “every purveyor of eyewitness news on local television stations.” Jon Stewart’s “The Daily Show” is also included. “We think of it as news,” Kahle said.

The Internet Archive has been quietly recording the news material from all the outlets capturing programs, including every edition of “60 Minutes” on CBS and every minute of each day on CNN. All of the material is available for free to users.

The current method for search is the closed-captioned words that have accompanied the news programs, Kahel told the newspaper. The user plugs in the words of the search, along with a rough time frame of when the story was reported. Matches of news clips will then appear.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Although there may be hundreds of matches, he said the system had an interface that makes it easy to browse quickly through 30-second clips in search of the right one. If a researcher wants a copy of the entire program, a DVD will be sent on loan.

The inspiration for the massive databank is the Library of Alexandria, the archive of the knowledge in ancient world in Egypt. Kahle told the newspaper that early efforts to assemble the collected works of civilization was on his mind when he conceived the idea to use the almost infinite capacity of the web to build a modern equivalent.

“You could turn all the books in the Library of Congress into a stack of disks that would fit in one shopping cart in Best Buy,” Kahle said. He estimated that the archive now contains about 9000TB of data. By contrast, The New York Times reported that the digital collection of the Library of Congress is a little more than 300TB.

Kahle said he moved to the archive project after previously founding and selling off two data-mining companies, one to AOL and the other to Amazon. The television news project, he told the newspaper, is financed mainly through outside grants, though Kahle said he put up some of his own money to start.

Grants from the National Archives, the Library of Congress, and other government agencies and foundations made up the bulk of the financing for the project. He set the annual budget at $12 million, and the project employs about 150 people.

The New York Times reported that the act of copying all this news material is protected under a federal copyright agreement signed in 1976. That was in reaction to a challenge to a news assembly project started by Vanderbilt University in 1968.

The archive, Kahle said, has no intention of replacing or competing with the web outlets owned by the news organizations. He said new material will not be added until 24 hours after it is first broadcast.

He plans to go back year-by-year, slowly adding news video reaching to the start of the television medium. That will require some new technology for searches because the common use of closed-captioning only started around 2002.