Equipping Apollo for Color Television

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

(The first installment in this three-part series examined the very special camera used to capture images during mankind's first visit to the moon's surface 40 years ago. This installment looks at the technology used to produce color video during that same mission.)

Alexandria, VA When Neil Armstrong set foot on the moon in the evening hours of July 20, 1969, only a very small percentage of the roughly half billion person global viewing audience was mildly annoyed that the images were being transmitted in black and white. On the one hand, the feat was so dramatic and remarkable that most marveled it could be done at all. On the other, color television receivers were only then beginning to appear in significant numbers, so the majority of viewers then didn't notice the lack of color.

While there was a color camera on the Apollo 11 mission, it was not part of the equipment package contained in the Lunar Excursion Module, or LEM. The very specialized Westinghouse camera that Armstrong carried outside the LEM produced only black and white images.

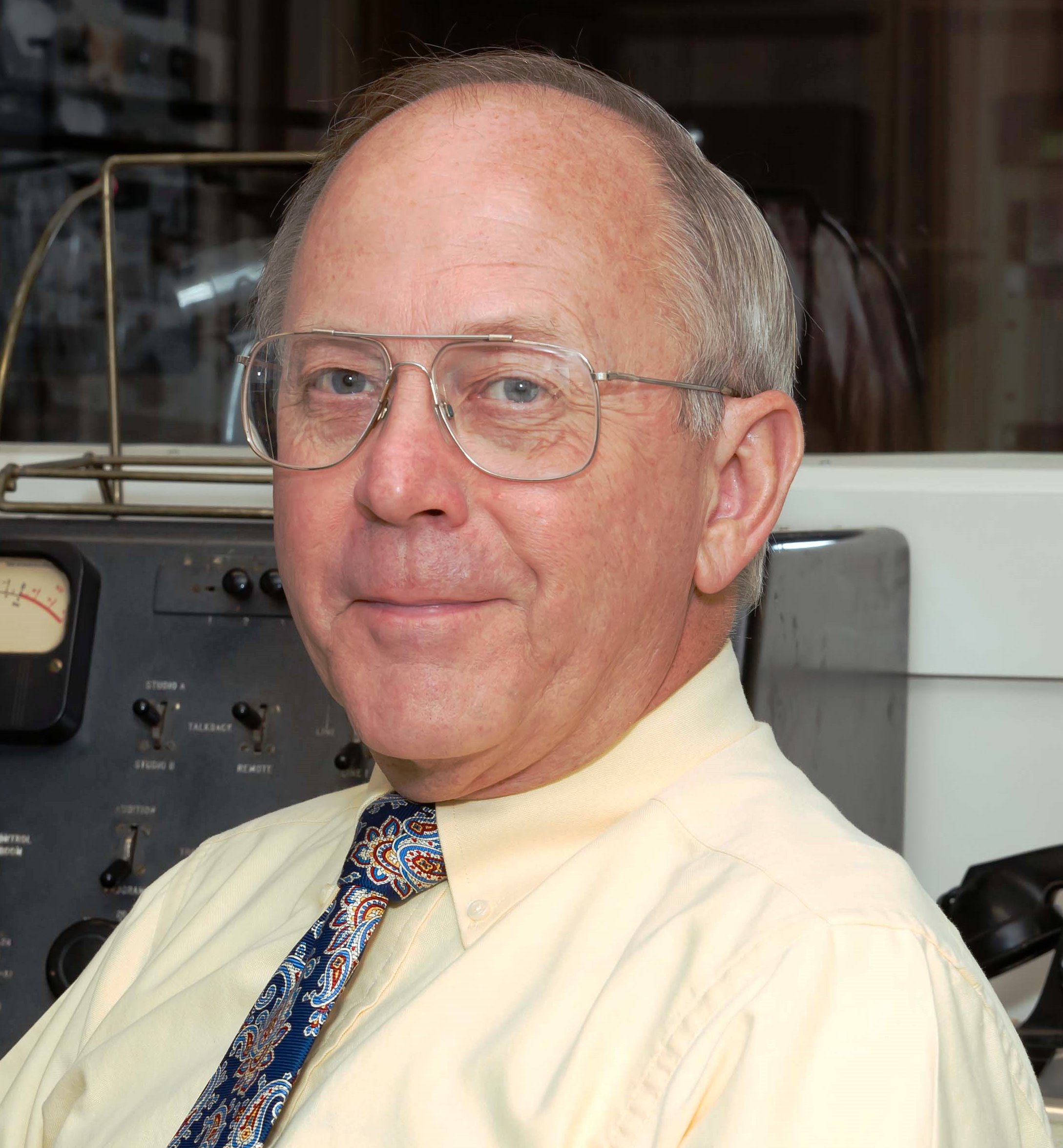

A Westinghouse employee appears with the custom assembled video monitor and the Snoopy "spacedog" doll used to show off the capabilities of the single-tube color camera built for Apollo space missions. Dick Nafzger, a NASA employee involved in video for more than four decades, explained the decision to restrict the feed from the lunar surface to black and white.

"We had narrowed the bandwidth as much a possible to try and get a good S/N, "Getting TV from the moon was a big unknown. We figured that slow scan video would give us the best chance for locking onto [the signal] with a 210-foot dish."

Nafzger observed that at time, Apollo missions had a fairly wide bandwidth FM link to Earth for telemetry and voice communications─so wide in fact, that it was possible to send nearly full bandwidth 525/60 NTSC video back from both the command module and the lunar lander. However, a design decision was made to limit the LEM video transmissions to 500 kHz to assure a good signal-to-noise ratio from the moon's surface, as there were many unknowns, including a small transmitting antenna and low power transmitter. This necessitated a reduced resolution/slow scan television system.

"It was obvious that we couldn't do color," he said. The first [lunar landing] mission wasn't going to change to include a color camera. It was only after this that we began to get confident that we could handle three MHz from the moon's surface."

The color images that did originate during the July 1969 moon mission came from the Apollo command module─the ship that orbited the moon with Michael Collins remaining on board, as Neil Armstrong and Edwin "Buzz" Aldrin Jr. trod the lunar surface below. The camera there had been used during the three-day journey from the Kennedy Space Center to the initial lunar orbit.

HOW THE COLOR VIDEO PROGRAM ORIGINATED

Westinghouse beat out two television industry giants─GE and RCA─in supplying the special camera used for the first moonwalk, and in what was considered a real coup, also kayoed RCA─the number one color promoter─in supplying the first color camera used by Apollo astronauts. And Apollo 11 wasn't even the first mission to have color capability. The Apollo 10 "dress rehearsal" mission took that honor in May of 1969.

Early on in NASA's project time table, no real thought had been given to color transmissions. In fact, during the early─and even mid-60s─color television was pretty much ignored by everyone. Few people had color receivers, and the networks and local broadcasters transmitted so little color then that ownership of a color set was hard to justify. That finally began to change in 1966 when NBC launched a full color schedule.

Stanley Lebar, the Westinghouse project manager in charge of the Apollo camera development program, recalled that some people in the project were mindful of this sea change and began to consider adding color to the imaging gear they had been contracted to build.

However, just as the television camera carried by Armstrong was no ordinary monochrome model, a strictly off-the-shelf approach wouldn't work for color pickup from space either.

Weight, bulk and power consumption were big driving factors in any equipment carried on NASA missions. The only commercially available color cameras were constructed around three pickup tubes and color-splitting optic systems, and in addition to being physically large, had equally large appetites for electricity.

AN OLD SOLUTION TO A NEW PROBLEM

More than a decade earlier, CBS's Peter Goldmark had perfected a color television system based on a single pickup tube with a tri-color filter spinning in front of it, creating samples of red, blue, and green scene information to be sent to a similarly equipped viewing device in a sequential manner─field sequential color. While it looked good (no registration problems), was relatively simple to implement, and fairly cost effective, it had one very large drawback. The system produced video (405 lines/144 fields) that was not backwardly compatible with the existing millions of television receivers. Whenever CBS turned on the color, they lost virtually all of their viewing audience, compelling the Goldmark system to an early grave.

Field sequential color had a lot to offer to NASA though. For starters, there would be no compatibility problem, as video transmitted to earth was not exactly "standard" anyway and had to undergo a conversion to feed the networks. The single-tube approach to color made for a small camera with low power demands and few operational controls─a natural for extraterrestrial applications.

Dick Nafzger Some time before either 1969 Apollo mission, Lebar's group schemed up an outboard color adapter for monochrome cameras.

"The team came up with the idea of using a self-contained color wheel unit that would slip over the lens for field sequential color," Lebar said. "NASA was quite excited about this.

To maintain color synchronism, this outboard converter had to keep its color wheel spinning very accurately. Lebar's group turned to a watchmaker for this.

"A contract was awarded to Bulova for the timing mechanism," Lebar said. "Their Accutron watch division worked on it. It was just about finished when we got word that it wouldn't be used on Apollo 11. The contract was cancelled and the project shelved after about $100,000 was spent on it."

A more solid approach to color was realized by adapting an existing Westinghouse military spec camera.

"We added the color wheel and other elements and made sure that it satisfied NASA," said Lebar. "We turned it around rather quickly, as it was already built to high military specifications. The first engineering model was finished in four or five weeks."

Shortly after the final touches had been placed on the modified camera and Lebar observed its color output, he called his counterpart in Houston with the news.

NO PEACOCK AVAILABLE FOR DEMO

"I alerted him to the fact that we had built and tested this camera," Lebar said. "His response was 'we'll get back to you.' They did so within the hour, asking that I take the camera to the Goddard facility [in Greenbelt, Md.] and operate it. They would set up a line between Goddard and Houston and observe it there. Since this was such short notice the best thing we could come up with to show off the color was toy balloons.

The demonstration proved to be an instant hit, with Lebar being requested to take camera to Houston for astronaut Tom Stafford's evaluation.

"It was a complete package now," said Lebar. "We'd added a small monitor for viewing the images in the command module, and also a zoom lens. After Stafford looked it over, he was satisfied that it would work. I was then asked if we could it tested in time for the Apollo 10 launch. They needed it at least a week before the launch date."

The Westinghouse team worked around the clock to transform their engineering model into a space-worthy camera. It was ready by the appointed time─almost. The lack of a suitable motor to drive the color wheel resulted in it being driven a slightly different speed than that needed for a solid color lock.

"We were told that it would take months to get the special motor we needed," Lebar said. 'The one we used resulted in a small interference band that was visible in the video. This was corrected in time for the Apollo 11 mission."

Getting the red/blue/green sequential fields back to earth and ready for viewing on the NTSC color sets that existed then took a little effort too. A special standards converter was constructed and installed at NASA's Houston facility.

"There was only one converter," said Nafzger. "Signals were sent from the tracking stations to Houston as black and white NTSC (525/60) video. The converter pulled out the red, blue and green and sent it out as NTSC color."

Nafzger admitted that relaying color images from space really wasn't quite as simple as it sounds 40 years later.

"We had three megahertz or so of bandwidth," he said. "But there was an audio carrier at 1.25 MHz and another for telemetry at 1.025 MHz. We were hitching a ride on a spacecraft system that had already been designed─the data was where it was at─it was not going to be changed just to accommodate TV."

Nafzger said that initially notch filters were used to pull as much of the undesired carriers out of the video bandpass as possible. Later special phase cancellation circuitry was developed.

Stan Lebar in 1969 with the Westinghouse color and monochrome cameras used in the Apollo lunar mission "The trick was to get rid of the audio and telemetry; not the video," Nafzger said. "Notch filters worked, but removed a lot of the video as well. The 180 degree phase cancellation was much better."

THE POLITICS OF COLOR

Lebar recalled that some of the problems Westinghouse had with their color camera came not from engineering constraints, but rather from sources completely outside the company.

"Peter Goldmark was trying to claim credit for the color system," Lebar said. "We had to fight him off continually. He said that anything that anyone did was infringing on his work. The color wheel was really all that we used. The frame rates he had used were totally incompatible with NTSC. It wasn't the same animal at all. He didn't have the technology available in the early 1950s that we were using."

Lebar said that CBS Labs took credit for both the camera and the color scan converter.

"Both were Westinghouse products," he said.

The other force was RCA. The company's president, David Sarnoff, had locked horns with Goldmark and CBS in the early 1950s over a color standard for U.S. television. RCA invested a tremendous amount of manpower and money on a backwardly compatible color system and Sarnoff was obviously upset that it was Westinghouse, and not his company, that got the NASA nod for bringing back color images from space.

"Sarnoff was fighting desperately to get color television presence in connection with the Apollo missions," Lebar said. "But he just would not allow his people to go with sequential color and this kept him out of the competition."

RCA was not to have color involvement until much later. According to Lebar, this was as the supplier of a field sequential camera used on the lunar "rover" vehicle on the Apollo 15 mission in 1971.

"Sarnoff really had to eat crow to go with the sequential approach," said Lebar. "He finally went to NASA and agreed to build a special field sequential camera and to provide extra [NBC] network coverage for the space missions."

Lebar added that as a bit of final insult, the deployment of the RCA rover camera was viewed back on earth with the Westinghouse camera. That mission did spell the end of Westinghouse cameras in space, though.

[Editor's note: Following the publication of the first installment of this series, another player in the Apollo video project has emerged and contacted the author with his recollections about the addition of color television to the mission.]

Renville McMann, Jr., was vice president and the director of engineering for CBS Laboratories and was working with Dr. Goldmark during the Apollo years. He recalls the field sequential color camera portion of the project quite well, and offers another perspective

"We had been working with the Westinghouse Elmira, N.Y. tube plant in connection with a field sequential color camera for news broadcasting, medical programs and military reconnaissance purposes, using their SEC (secondary electron conduction) camera tube," McMann said. "There were no good quality portable color cameras then. When we learned that the Apollo 11 video would be in black and white, we questioned why it couldn't be in color and sent two of our representatives─Art Kaiser and Henry Mahler─to Houston to ask about this. NASA said that it was just too late at that point. We then sent a working camera down there and became a consultant to both Westinghouse and NASA. We fully cooperated with Westinghouse and NASA and explained the intricacies of doing field sequential color."

McMann says that it was CBS Labs that provided Westinghouse with a sample color wheel and perhaps the motor used to drive it.

"A color wheel with pie-shaped filter cutouts doesn't work," McMann said. "These have to be specially shaped to provide an equal amount of light exposure for each color. We gave them a wheel to show what the proper shape had to be, saving them time in designing their own."

McMann also remembers that it was CBS Labs who invented the magnetic disk drive-based video converter technology for transforming field sequential images into "standard" NTSC color television signals. (McMann filed for a patent on the disk drive video TV field storage principle in 1966. It was awarded four years later.)

"We described how to build the converter to both Westinghouse and another outfit," he said. "The NASA converter was built in accordance with our teachings."

McMann recalled that the CBS Labs color camera made a major impression on NASA.

"They saw it and said 'that's what we want,'" said McMann. "Later, when live images were sent from Apollo it was a glorious day for Peter Goldmark. Dr. Goldmark was delighted that a color system whose principles he had pioneered in the 1940s was used in a modern version to bring back color video from a spacecraft."

[Part three of this series explores recording technology for capturing the non-standard lunar video and also the long quest to find a group of NASA recordings covering the historic July 20, 1969 manned lunar landing.]

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

James E. O’Neal has more than 50 years of experience in the broadcast arena, serving for nearly 37 years as a television broadcast engineer and, following his retirement from that field in 2005, moving into journalism as technology editor for TV Technology for almost the next decade. He continues to provide content for this publication, as well as sister publication Radio World, and others. He authored the chapter on HF shortwave radio for the 11th Edition of the NAB Engineering Handbook, and serves as contributing editor of the IEEE’s Broadcast Technology publication, and as associate editor of the SMPTE Motion Imaging Journal. He is a SMPTE Life Fellow, and a member of the SBE and Life Senior Member of the IEEE.