Storage System Limitations Impact Workflows and Growth

Integrating high-performance storage solutions into rich-media, file-based workflows bring a range of topics to the surface, one of which is why and how to select a storage system.

With the proliferation of disk-storage systems throughout the marketplace, prescribing the correct storage platform is becoming more important than ever before.

For small-scale systems, selecting a storage solution may be relatively straightforward. However, for systems that are expected to grow significantly and require many different forms of clients and workflows; and for systems that bridge into other platforms, the configuration of a proper storage solution with few limitations becomes much broader.

We are beginning to see the impacts caused by improperly sizing the performance requirements as they relate to both the storage and the server subsystems. Poor storage-system performance will slow workflows significantly. While bottlenecks may not show up initially as users become accustomed to the advantages of file-based workflows, deficiencies in sizing and scaling of the storage system will certainly lead to reduced overall system performance.

An obvious issue in storage-performance deficiency might at first seem to be insufficient storage capacity. However, there are other hidden issues that become performance-killers once a large-scale storage solution is required.

Small-scale, dedicated storage platforms are usually tailored for the specific components and workflows that are initially attached to the storage system. Some systems will combine editing interfaces, simplified media asset management and storage into a single “package.” These solutions are often groomed so that the subcomponents are matched for specific throughput, access and collaboration. Such systems are usually optimized mainly for the initial requirements of a specifically defined workflow. Often these systems are configured into a compact, economical system that meets the objectives of the users in their current working environment.

When a system is configured for only a few editing workstations, optimal storage performance parameters are relatively easy to achieve. Smaller systems generally do not require a significant number of spindles (hard drives) to achieve what are usually modest bandwidth requirements aimed at general editing or file-migration activities.

When a facility’s needs reach enterprise levels, the storage and server systems might now handle dozens of craft editors, graphics systems, closed-caption processing, audio sweetening, external MOS-interfaces and more. These may also employ multiple ingest, play-out servers and transcoders. Once a system approaches this size and scale, the storage system architecture becomes a critical factor in defining end-to-end performance.

ENTERPRISE CLASS

In larger, “enterprise class” systems, those with a high degree of media asset management (MAM) services, the requirements to handle and process significantly more activities may be orders of magnitude greater than when just 5 or 10 editing stations are attached to a small storage area network (SAN) or network-attached storage (NAS).

Many of these activities may be in the background, consuming system bandwidth even though they may not directly impact storage access or capacity at a significant scale. As a facility’s overall system requirements increase, some dedicated “purpose-built” storage systems (those comprised of spinning disks in a straight RAID configuration) may become incapable of managing what becomes an ever-expanding need for the migration of data (content) between various subsystems and workflows.

For systems incorporating a MAM, an enormous number of content, data and metadata files will be continuously generated. Here, the system data and metadata files are typically handled by IT-centric server hardware sized to manage the various processes that occur throughout the various workflows.

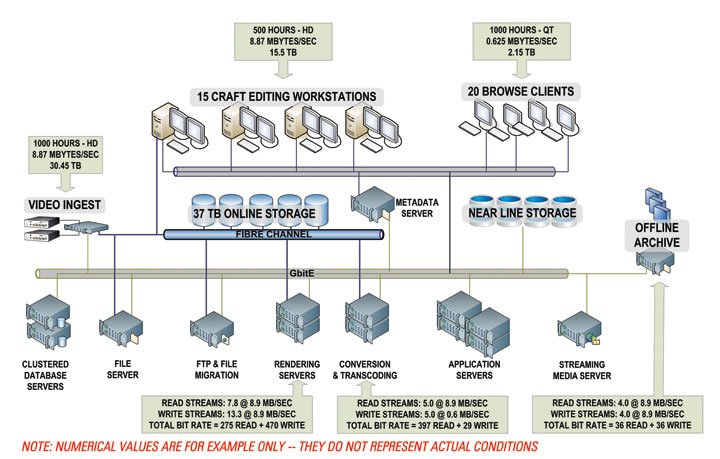

Fig. 1: A modest scale “enterprise class,” digital media file-based production system showing the common components, servers, clients and storage systems. Inserted text boxes describe some of the typical data rates and stream counts for a system of this size. The total bit rate (including some additional items not shown on this diagram) for a system of this dimension might easily approach 2.3 Gbps of system bandwidth—plus additional services for near line NAS or DLC gateways in the 140–150 Mbps range. Additional IT-servers can be deployed to manage the content (raw files, proxies and renderings) that shuffle between devices (encoders, decoders, transcoders) and storage systems (SAN, NAS or local direct-attached storage (DAS) caches on the devices).

In larger systems involving numerous workflows occurring simultaneously, all of the various services involving file exchanges must be thoroughly accounted for. Examples (see Fig. 1) include ingest operations with proxy generation and transcode functions; migration of files from local storage to central storage and archive; edit-in-place and/or file exchanges between central storage and editing workstations; layering and rendering; and video servers in support of QC, baseband play-out, video-on-demand or streaming.

MAKING PREDICTIONS

Fundamental to specifying overall system performance, especially as it relates to the bandwidth of the storage system and the network interfaces, is the task of accurately predicting the total number of data reads and writes expected from the system.

System sizing involves not only storage capacity, accessibility or bandwidth—but now includes the network storage interfaces, accelerators and the capability to correct for ambiguous data, which can occur during writes to the disk drive itself.

The overall system design needs to manage and mitigate all potential limitations in performance. Design parameters must include the number of spindles supporting the storage solution; the number of read and write cycles per group of disks; the type of interface to the storage (e.g., Fibre Channel vs. GbitE vs. InfiniBand); file sizes and formats; and the number of input/output operations per second (IOPS).

As a benchmark point, when the aggregate read+write bandwidth reaches 1–1.5 GBps, conventional commodity storage components probably become insufficient—and an enterprise-class, media-centric storage solution needs to be considered.

Having the right parameters increases the ability to share access to all the content, both at high resolution and at proxy levels, and at all times.

Balancing all these demands among all the clients and users, whether attached directly as “thick-client” workstations or when in a Web-based interface, is essential to maintaining system stability, achieving high performance and providing sufficient availability.

Karl Paulsen (CPBE) is a SMPTE Fellow and chief technologist at Diversified Systems. Read more about other storage topics in his latest book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.