Improving WAN Throughput

Wes Simpson

In this column a few years ago, we discussed how the end-to-end delay of a network can have a serious impact on the amount of data that can be transferred across the link. In a nutshell, longer delays mean lower data-throughput rates, due primarily to the amount of time it takes to acknowledge good or bad data transfers.

To understand this, it is important to remember that Transmission Control Protocol (TCP) was designed to provide perfect accuracy for data transfer, which is crucial for all sorts of applications, such as banking data, executable files or even highly compressed video content files. To accomplish this, all TCP transfers must be acknowledged by the receiver, to indicate that the correct number of bytes have been received without error. Acknowledgment packets must travel back to the sender, and any in-transit delays will add to the amount of time that the send must wait before transmitting new data.

CALCULATING TCP THROUGHPUT

The total data throughput rate on a TCP connection depends on several variables, including:

• The round-trip network delay (latency)

• The size of the TCP receive window

• The speed of the data connection between the two ends of the network

Of these three variables, the speed of the data connection can often have the least amount of impact on the overall data throughput rate.

To understand this, consider an example where the round trip delay is 20 milliseconds (such as between New York and Chicago) and a 64 kB receive window, the maximum size allowed by the basic IETF standard (RFC 793). With a 100 Mbps Fast Ethernet connection, the maximum possible throughput would be 20,542 kbps.

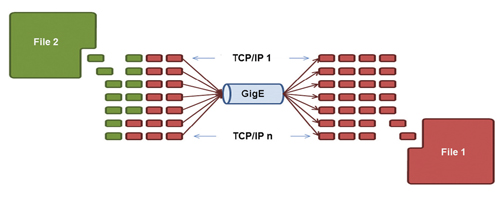

Fig. 1: Using multiple simultaneous TCP/IP connections over a single network With a 1,000 Mbps Gigabit Ethernet connection, the maximum throughput increases to only 25,510 kbps. This slow performance (relative to the link speed) is due to the amount of time that the sender must wait to receive acknowledgements before sending new data.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

RFC 1323 AND LARGER TCP WINDOWS

In 1992, the IETF published RFC1323 as a revision to the TCP standard that permits larger receive windows (up to almost a gigabyte) to be used for data transfer. This change allows greatly increased throughput on high-bandwidth networks and also those with long delays. With a larger receive window, the sender can transmit more data before it has to wait for an acknowledgement.

In the above New York to Chicago example, if the receive window is increased to 1,024 kB, the Fast Ethernet throughput increases to 77 Mbps and the GigE throughput increases to 290 Mbps.

However, this comes with a potential risk—if as little as one packet is lost out of the total receive window, the entire window full of data might need to be retransmitted.

Another way to improve the utilization of high-speed data connections is to deploy multiple simultaneous TCP/IP connections over a single link. This approach requires greater intelligence on each end of the link, but it can deliver throughput exceeding 95 percent of the total link capacity. Fig. 1 shows how this works, with several blocks of data being delivered in parallel over a single physical connection.

BLOCK VS. FILE TRANSFER

Two principal data models are used in most file storage systems today: block level storage and file level storage. Block level storage is used in Storage Area Networks (SANs) and relies on the requesting device (typically a server) to know the individual addresses of each block of data (typically 512 to 8k bytes, but other sizes are permitted by the standard).

File level storage is used in Network Attached Storage (NAS) and allows the requesting device to simply ask for files by name, which causes the file storage system to transfer the entire data file to the requesting device. Block-level storage is usually implemented using SCSI protocol links over Fibre Channel or Ethernet physical layers. File-level storage can be implemented using a variety of file systems, such as CIFS or NFS via IP connections.

This same data-transfer interface dichotomy applies to data transport systems; they can connect to data sources and destinations using either SCSI connections or file-level connections. When file-level connections are used, the transport system copies entire files across the wide-area connection. When block-level interfaces are used, the transport system can just simply copy new or recently modified data blocks.

This approach is ideal for large data sets such as video content stored on tape or disk, where only small portions of a file might be modified at any time (such as when footage is edited into a ready-to-air program).

Direct SCSI interfaces are particularly useful for providing mirrored backup of SAN arrays over a wide-area network because any changes in the source system can be rapidly propagated to the remote system simply by transferring the blocks that have changed.

One design limitation of a SCSI-based system is the tight set of timing tolerances used in standard implementations. This constraint makes it even more important that the acceleration system makes full use of the bandwidth of the WAN to rapidly transport data blocks as quickly as possible.

A properly engineered system that includes the capability of adapting to changes in the network environment can maximize total data throughput across a wide variety of network configurations.

Note: Thanks to Pete Gilchriest of ARG ElectroDesign and David Trossell for their helpful comments on this article.

Wes Simpson is an industry consultant and author of “Video Over IP, Second Edition,” from Focal Press. Your comments are welcome towes.simpson@gmail.com.

Wes Simpson is President of Telecom Product Consulting, an independent consulting firm that focuses on video and telecommunications products. He has 30 years experience in the design, development and marketing of products for telecommunication applications. He is a frequent speaker at industry events such as IBC, NAB and VidTrans and is author of the book Video Over IP and a frequent contributor to TV Tech. Wes is a founding member of the Video Services Forum.