Building a scalable MAM system

For a media asset management (MAM) system to be truly valuable, it must offer much more than a repository for content with associated metadata and storage services. In today’s file-based, software-orientated world, a MAM system must offer services for content processing and manipulation. In doing so, it orchestrates people and wider enterprise resources. Media processing is key to putting content to work in the world of post production, broadcast and distribution. Such processing can happen outside a MAM system or inside as an integrated system. Either way, processing high volumes of large media files requires careful thought.

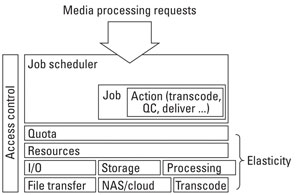

Figure 1. The key to scalability, extensibility and powerful media management is the interaction of actions, jobs, resources and quotas. These concepts and components make up an enterprise media processing system.

This covers some useful software engineering approaches that can be followed when building scalable MAM systems. The main focus is on the key concepts and components that must come together in an enterprise media processing component. (See Figure 1.)

Multi-tenancy

For a MAM system to be truly useful, every object and component must work within a multi-tenanted environment and, therefore, support access control and ownership. This is a critical component, and it will be shown throughout the article why it is so important.

Jobs and actions

An action is a unit of work or software plug-in that is executed against an asset or group of assets. It is the fundamental building block for media processing. An action has a type that defines the kind of work that it will carry out. It can be a general- purpose file action, such as copy and move, or a media-centric action, such as transcode, QC, package or deliver.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

The key to extensibility of this paradigm is that new action types or software interfaces can be created. So, to create new types of transcodes or QCs, for example, one simply needs to implement the related action type interface to change the underlying behavior. For example, for a Deliver Action, one may develop concrete implementations of action adaptors for delivering to Daily Motion and YouTube, or to a broadcast system.

As a unit of work, the action must run within a runtime environment. In the case of media processing, this requires careful consideration as actions are often expected to run for an extended period. With this in mind, any runtime environment must be asynchronous. It must also be transactional to enable rollback from failed media processing actions such as transcodes, file moves and copies.

The fundamental wrapper for the action, in order to bring wider services such as state, transactional integrity, priority and times, is the job. A job incorporates a requirement for access to resources of a given type. In addition, a job points to a type of action and is configured to run at a certain time and with a certain priority. Jobs can be persisted in a database so that the state of the job can be retained in perpetuity for auditing and reporting purposes. Retaining its state also means a failed job can be retried, rescheduled and reprioritized if required.

Given that media processing is resource-intensive and that such jobs can last for an extended period, a job is run in its own execution context by a job scheduler. A scheduler is responsible for preventing jobs from interfering with each other. If jobs are allowed to contend for resources, they will generally decrease the performance of the cluster, delay the execution of these jobs and possibly cause one or more of the jobs to fail. The scheduler is responsible for internally tracking and dedicating requested resources to a job, thus preventing use of these resources by other jobs. When clusters or other high-performance computing (HPC) platforms are created, they are typically created for one or more specific purposes.

The job scheduler polls the jobs residing in the underlying job store (database) and executes them in an execution context. This context is injected into the job, thus allowing a running job to have access to systemwide services such as logging and system state.

In any given scheduling iteration, many activities take place. These are broken into the following categories:

- Update state information. During each iteration, the scheduler contacts the resource manager(s) and requests up-to-date information on compute resources, workload and policy

- configuration.

- Refresh reservations.

- Schedule reserved jobs.

- Schedule priority jobs. In scheduling jobs, multiple steps occur.

- Backfill jobs.

- Update statistics.

- Handle user requests. User requests include any call requesting state information, configuration changes, or job or resource manipulation commands. These requests may come in the form of user client calls, peer daemon calls or process signals.

Resources and quotas

Most media processing jobs require access to other systems and software to carry out meaningful work. For example, moving a file requires access to a storage resource, and transcoding a file requires access to transcode hardware and software. So the key to enabling scale is to understand the availability of resource.

The resource object, like an action, has a type such as processing, storage or I/O. Each type has a subtype, such as transcode. For example, processing resources could be transcoding and QC, and I/O resource could be network services for file transfer. This subtype can then be implemented for various specific resources. With a transcode, resource implementation would be a specialized software adaptor for delegating transcode requests to a transcode engine.

The benefit of the resource adaptor model is that it provides an elegant interface to the job scheduling layer, where all available resources and resource types are made available for consumption. This is where multitenancy comes in, as one needs to control access to resources to ensure that files, folders, transcodes, etc., can only be utilized by named users or roles.

So with the use of actions, jobs and resources, you have a layered and extensible approach to managing media assets. You can create new action types and use a whole range of networked resources for supporting all kinds of media management tasks.

However, with a resource-hungry job scheduler, executing potentially thousands of jobs, how do we ensure we make the most of our expensive resources? How do we ensure that jobs don’t get starved of resource or that resources don’t go idle? The compute power of a resource is limited, and over time, demand will inevitably exceed supply. Intelligent scheduling decisions can significantly improve the effectiveness of media processing with more jobs being run with quicker turnaround. Subject to the constraints of the traffic control and mission policies, it is the job of the scheduler to use whatever freedom is available to schedule jobs in such a manner so as to maximize system performance.

The missing piece in controlling job access to resources and, therefore, making the most of the resources available, is the quota. One might assume that the only parameters that are useful for orchestrating access to a resource are named user, priority, start time and end time. All are useful, but they are far too coarse-grained for advanced scheduling.

A quota encapsulates a user’s access to a resource. The rules governing a user’s access are retained within the quota, thus making the architecture pluggable and configurable. Remember the importance of multi-tenanting?

A quota is abstract in the sense that it encapsulates different rules for different resource types.

For example, in the case of storage or folder resources, a quota represents total storage allowance (gigabytes) allowed to a certain user. It may contain a threshold for raising alerts when a user is close to reaching their limit.

In the case of network resources, quotas can be allocated in terms of bandwidth. This might be a consistent rate, contended per session or throttled at times of the day.

In the case of processing jobs such as transcoding, this becomes much harder to implement as the time taken for a transcode job can depend on the input format, the output format and a huge range of transcode parameters.

In the case of processing a transcode resource request, the quota may throttle total jobs per user or total concurrent jobs per user. Again, the quota may be configured or implemented to allow access at certain times of day.

The beauty of a pluggable model is that access to precious processing resources can be throttled in an unlimited number of ways. A quota could look up access to another system to see if a user has paid his or her bill or whether he or she is a bronze, gold or platinum user. Bringing cloud technologies into the quota and resource layer enable the concept of elastic computing. This makes resource scheduling much easier as the job scheduler can instantiate new resources and tear them down as and when required.

In summary, the key to scalability, extensibility and powerful media management is the interaction of actions, jobs, resources and quotas. With the work being carried out by the Advanced Media Workflow Association (AMWA), this is a great opportunity for more standardized interfaces in the area of media processing, which can only be good in a large and rich media technology ecosystem.

—Jon Folland is CEO of Nativ.