Non-Volatile Memory Grows in Popularity

Whether baked from silicon hardware or made fluidly agile with software codecs, video servers have become stable, mature and mainstream necessities for media capture, distribution and play-to-air for the broadcast industry. Even though professional broadcast video server manufacturers continue to refine and retune their mission-critical playout applications, we’ve seen only minor changes in the fundamentals of their platforms or architectures over the past three to five years.

But when looking at the streaming media world, there’s a different perspective. Streaming media models continually strive to “pump more out of the servers and to feed more connections” using codec and server advances that rival previous developments in professional video servers for linear playout by orders of magnitude.

To put this into perspective and to be fair, these are really two different playing fields—at least in today’s environments. Yet today’s audiences are shifting, coming ever closer as content delivery facilities and end users begin to collide on how video is delivered to any device at any time. Expect traditional video server users to migrate to a multistream, multi-user delivery mode going forward; and for VOD to become part of the traditional broadcaster’s workflow regardless of the distribution or delivery platform.

The name of the game for Internet-based content is to get massive amounts of differing content to multiple sets of stream engines. The technology premise is rooted in the need for efficient, minimal latency, and very fast storage data flow. Internally the way content (i.e., data files) moves from the storage medium to the server is key to getting the performance that users now expect when clicking on a clip or movie. This means higher bandwidth with less latency added to a data management architecture that can be consistently replicated as the quantities of servers necessary for delivery begin to expand.

WHERE THE BOTTLENECKS ARE

In general, enterprise-class commercial off-the-shelf server varieties seem to manage data flow satisfactorily throughout their internal bus structures. Now data access bottlenecks are coming because the storage medium and the interface to the storage itself couldn’t keep up with CPU or GPU demands. That is all changing.

Advances in storage technologies are now reducing this data access bottleneck; and we’re about to see yet another significant change in how the storage medium industry addresses data accessibility, system scalability and overall performance. And, like the past, this improvement will be driven in part by the PC and high-volume users of laptops, tablets and mobile notebook devices.

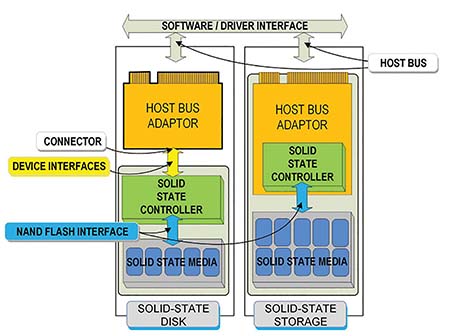

When generalizing “storage technology” there are three primary element groups that make up the storage components associated with the technologies: the physical medium, the controller(s), and the interfaces, including the network. In the next generation of changes, we expect to see fundamental and significant change that will drive portions of conventional storage systems away from exclusively magnetic spinning disk and head toward non-volatile memory (i.e., solid-state drives) either by standalone device or through a hybrid approach combining SSD and HHD technologies.

THREE FAMILIAR FORMATS

When looking to the future, a view of the solid-state drive architectures currently covers at least three familiar physical formats: the all-flash array, the flash cache and the mSATA SSD.

An all-flash array is a solid-state storage disk system that contains multiple flash memory drives instead of spinning hard disks. Some video server manufacturers are offering this concept in their “solid state” server platforms, which bode nicely when rapid recall and short clip segments make up the majority of your uses. While very performance-effective, there remains a significant cost penalty for SSD. According to a recent publication, the pricing for SSD is about $0.80 per gigabyte, while for spinning disks (HDD) this cost is about $0.05 per gigabyte.

Flash cache is the temporary storage of data on NAND flash memory chips to enable requests for data to be fulfilled faster, and will accelerate the recall of commonly called routines or applications that, heretofore, had to reside solely on spinning disks. These can be built into metadata controllers and database caches, as well as some storage disk arrays offered by high-performance storage system providers.

At a smaller, discrete scale is the mSATA (mini-connector serial ATA) SSD, a trademarked solid-state drive used in portable, space-constrained devices such as laptops and netbooks. mSATA SSDs come in memory capacities that range in the 128 GB to 256 GB, in a flat footprint (e.g., 2.5 inches) form factor that is typically PC board-mounted through the mini-connector. The mSATA SSD drive provides good performance, high capacity and a relatively low price.

The mSATA specifications—which began development in 2009 under the guidance of the Serial ATA International Organization (SATA-IO)—support transfer rates of 1.5 Gbps (SATA 1.0) and 3.0 Gbps (SATA 2.0); with conventional SATA specifications from 2010–2011 reaching to SATA 3.0 for 6 Gbps transfer rates. Like the USB 3.0 specification, the SATA 6 Gbps technology was all new and required new motherboard interfaces to take full advantage of the benefits.

Solid-state hybrid drives (SSHD) entered the market a few years ago when Seagate introduced a 2.5-inch form factor for laptops. Primarily focused on the PC market, the approaches are gradually making their way into the workstation and server domains. The SSHD product line provides a conduit for field or stage capture that permits transfer to larger editorial systems quickly and securely.

NON-VOLATILE INTERFACE EXPRESS

Coupled with the physical storage medium will be a non-volatile memory express interface specification, otherwise known as NVMe or “NVM Express.”

NVMe aims to enable the SSD to make the most effective use of the high-speed Peripheral Component Interconnect Express (PCIe) bus in a computer, which continues to gain popularity. In comparison to SATAbased or SAS-based SSDs, the primary benefits of NVMe when coupled to PCIe-based SSDs are increased Input/Output operations per second (IOPS); lower power consumption; and reduced latency through the streamlining of the I/O stack.

In recent announcements, support for NVMe is being extended to high-performance compute servers used for transactional and database applications. NVM Express Inc., a consortium of vendors in the storage market, is initiating an effort to standardize NVMe over fabrics. While currently in prototype, this approach to solidstate storage is targeting flash-appliances that could use fabrics (e.g., InfiniBand or Ethernet with RDMA) and may be more appropriate than PCIe.

For broadcast systems such as asset managers, these additions should meld nicely into the SQL database engines or the search engine modules utilized in the MAM or PAM. The result should be faster seek times for clips as well as reduced size, power and heat when using SSDs versus spinning HDDs. Metadata storage and metadata controllers may also find improvements through NVMe on PCIe or PCIe RAID implementations.

When building up systems to support MAM, streaming engines or other similar functional systems, check to see if the COTS servers can deploy NVMe and SSD capabilities. And also check whether or not the MAM vendor or search engine developer has taken advantage of these newer technologies to improve efficiency, reduce latency and increase throughput.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.