The beginning of a new year is an excellent time to reflect on life and ponder mysteries—such as why we make a big deal out of one year starting as another ends, and whether time is just an imaginary construct in the first place. Of course, it’s also a good time to look back at the tumultuous year that just passed and consider what the new year will bring.

Next-generation audio’s biggest impact will be realized through earbuds and headphones.

NEXT GEN AUDIO

Next Generation Audio was top of the list of audio revolutions that made headlines in 2017 while making almost no difference to the end user. That will change in 2018 as ATSC 3.0 begins to roll out to consumers and the age of immersive and personalized television audio begins.

The greatest challenge for the companies tasked with delivering NGA technology will be to make the interface user-friendly and as brain-dead-easy as television is supposed to be. If they deliver real usability to the masses we could see a serious resurgence of interest in broadcast television, and if they don’t, people will simply turn back to their streaming boxes.

As for the technical side of NGA, it would be wonderful to see immersive audio transform multitudes of living rooms into surround environments, but its greater impact will almost certainly be realized through earbuds and headphones. People already love their individual listening devices and immersive audio will deliver a very personal and important television audio experience.

STREAMING TV AUDIO

It will be interesting to see how things go in the world of streaming television audio this year, especially since streaming has amazing potential as a delivery platform for personal immersive audio. The AGOTTVS group within AES has done a fantastic job of setting guidelines for content creators and streaming providers to follow, but whether those guidelines will be followed across the board remains to be seen.

When Congress mandated loudness management through the CALM Act, it had little impact in Silicon Valley, but if streaming audio loudness problems aren’t solved voluntarily, it is entirely conceivable that similar legislation could be put in place for streaming.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

AOIP

Audio and video-over-IP will continue to grow this year as television facilities add more IP- and IT-based equipment in place of traditional equipment. As this happens, television facilities will begin to look a lot more like IT facilities.

As the level of IP-based equipment increases so does the need for engineers with IT skills while the need for traditional television engineers decreases, something everyone working in television engineering needs to keep in mind.

At the same time, SMPTE, AES and other professional organizations continue to develop and refine standards to make the myriad television IP standards function together and interoperate.

IMPACT OF FCC CHANGES

The FCC was certainly busy last year, and at times their decisions didn’t seem terribly pro-television. On Dec. 14, 2017 the FCC reversed 2015 Title II Order, which deemed the internet to be a public utility, ending what is widely known as “Net Neutrality.” Prior to the 2015 order, internet providers were actively throttling traffic of various types.

Now that the order has been reversed we’ll have to wait and see what impact, if any, this decision will have on our ability to successfully deliver streaming television content to customers. Of course, 2017 also brought the reality of the wireless frequency repack and sell-off of spectrum, which displaced nearly 1,000 stations and caused chaos and confusion throughout the industry.

In the world of wireless microphones, belt packs and IFBs, 600 MHz spectrum is already gone, with T-Mobile announcing plans to start utilizing those frequencies at any time.

Between the loss and shuffling of spectrum and the possibility of throttled streaming, it feels like a very bad time to be in the business of delivering television content.

AUGMENTED REALITY

Virtual reality was quite a fixation in 2015 and 2016, and it hasn’t really gone anywhere. But a technology that is far more interesting, which will make big waves this year, is augmented reality. AR doesn’t replace the world around us with something different as VR does; it adds dimension to the world we’re in.

This technology has actually been around for years—there were AR apps for older smartphones, and Pokemon Go is an extreme example of the potential of AR technology.

Augmented reality may prove to be an enormous benefit to healthcare since doctors can have medical information immediately at hand using goggles or glasses, but the potential for augmented television content is there as well.

With consumers already watching content on smart devices, AR is a technology just begging to be utilized. For audio professionals, this area is especially intriguing because AR and VR experiences are hollow without an audio soundscape to accompany the visuals.

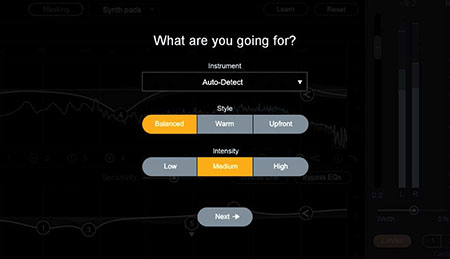

iZotope’s Neutron track assistant

MACHINE LEARNING

Artificial intelligence was the subject of many, many discussions in 2017 and it will continue to be in 2018 as people worry that robots will become sentient beings and take over. Machine learning, on the other hand, sounds far less ominous so we’ll use that term instead.

Machine learning can best be described as building a machine that can learn and then letting it do so. Google’s language translation AI is a perfect example of machine learning because it has created its own internal language in order to translate languages quicker.

The fruits of machine learning have already started to appear in some commercial audio products, but there is a far deeper movement using it for all kinds of audio work. Acoustics, signal processing, language and signal analysis, music classification, signal and source separation are just some of the areas where machine learning is already being applied.

My first inkling that machine learning was in use for audio software was when iZotope released Neutron, their mix analysis and processing tool. Experiments with Neutron’s track assistant have caused me to rethink some final mixes. Machine learning has made its way into several iZotope products and they’re not the only audio company using it. No matter how you feel about it, machine learning is here to stay, so expect to see more of it in 2018.

Jay Yeary is a television engineer and consultant who specializes in audio. He is an AES Fellow and a member of SBE, SMPTE, and TAB. He can be contacted through TV Technology magazine or attransientaudiolabs.com.