The Art of Load Balancing

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

KARL PAULSEN Those who design, build or operate video facilities have grown accustomed to employing multiple PCs, workstations and IT servers as part of their systems. In the infant years of digital video, PCs were often used as interfaces much like an A-to-D converter was used to bridge analog video signals into the digital domain.

Computing capabilities and the relatively low quality of video on these early machines prevented them from delivering high-quality, broadcast video for several years. Instead, the PC (or Mac) was utilized as an offline editor that generated EDLs for conforming online video in production suites. This functionality continued until better video compression technologies could be developed.

Enter the 1990s when purpose-built professional video servers arrived. Used first for ingesting and playing back broadcast-quality video, these video servers have now all but replaced videotape transports. Video servers essentially became the first true interface between linear video and nonlinear video at a professional level. The fundamental technologies for video servers were initially used in clip players, offline nonlinear editors, and in sophisticated video compositing production tools like the original Quantel Harry and Abekas A62/A64 products.

In those early digital years, we didn’t worry about overloading the systems; the major concerns related primarily to storage capacity and reliability. At that time, each video server channel handled essentially a single stream fed from a single input port. A BNC connector carrying a single audio/video signal was on the input, with a single video signal exiting on the output side. This model continued until streaming technologies emerged and computer capabilities reached the levels that allowed IT-type products to handle the tasks of delivering compressed video formats such as we have today.

CONVERGING SYSTEMS

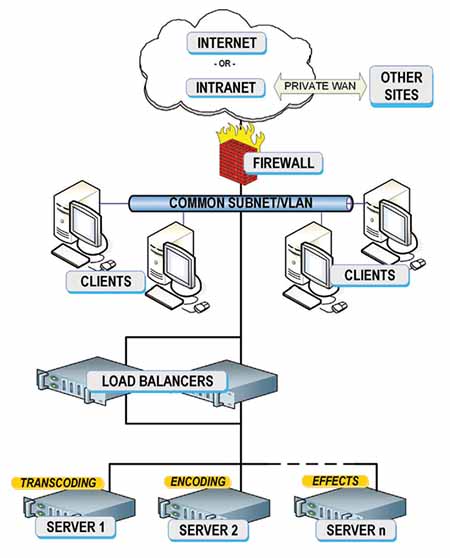

Fig. 1: High-level block diagram of a dual redundant load balancer system for VLANs, public Internet or corporate Intranet networks The convergence of computers with video technologies was a steady, but not a particularly easy challenge. A corollary to this was when computers were first used to interface between devices; essentially they were deployed for a single dedicated purpose and did not share tasks efficiently. Computers were then used as human interface converters and as data signal processors, which modified serial data streams connecting hosts to clients.

The Media Object Server protocol, initially employed for newsroom computer systems, is a good example of such a data interface. MOS was intended to be a standard protocol that allowed communications between the NRCS and video servers, audio servers, still stores and character generators. The MOS interface was typically a dedicated PC before it became embedded in the host/client devices or as part the services residing on an IT server.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

The concepts of MOS have grown considerably since the founding meetings of the MOS Protocol development group held at the 1998 Associated Press ENPS developer’s conference in Orlando, Fla. MOS is now one of many methods used for exchanging data between devices and subsystems, which leads to the central topic of this month’s article.

Managing the flow of media content and other data (including metadata) among the server systems found in datacenters, streaming media cores or broadcast technical video-infrastructures is far more complicated than what MOS dealt with initially. With multiple signal formats being carried on the same network or the same server, we’ve realized that operational stresses can and will overload (unbalance) these systems. Computers, which once handled only a few discrete services, transitioned to servers that address dozens to hundreds of data streams continuously, often with little-or-no prioritization over which service is more important or timelier than the other.

EXCEEDING THE LIMITS

When the number of streams expected to arrive at a server’s port reaches either the limits of the network interface’s bandwidth or exceeds what the server I/O system can address in terms of input/output operations per unit time (IOPS), designers will add additional servers to split up those signals or tasks accordingly. Sometimes these servers will handle differing processes—such as database management, transcoding, encoding or stream buffering. Other times the servers are grouped to handle multiple streams performing the same tasks across those servers in parallel.

Whenever these tasks must be delegated among a server pool, there are tendencies for one or more of the servers to become overburdened. MAM systems—like MOS interfaces for news production systems—typically touch nearly every component in a media system’s architecture. Thus, MAMs are designed to balance loads among its servers such that processing and signal flow bottlenecks won’t occur.

Other places where load-balancing processes occur are in the facility’s command and control systems; those which communicate the health and status of the entire system to a centralized monitoring platform (e.g., Grass Valley’s iControl or Evertz’s VistaLINK). And for systems that manage Web-based distribution, streaming servers or other hosting-processes, load balancing may also be suggested.

A load balancer essentially divides work processes between two or more computers or servers (Fig. 1). The principle concept is that the work gets done in the same amount of time, but without any single server or workstation becoming overloaded. They also help improve overall system resiliency and fault tolerance.

CORE NETWORKING SOLUTION

Load balancing is a core networking solution that can be achieved by hardware, software or a combination of both. For load balancing to be effective, it must be agent-less and platform/protocol independent. When network-based, the load balancing function should require no special configurations or clustering. This is because load balancers are positioned ahead of the servers, essentially becoming “traffic cops” that divide the application requests (i.e., loading) appropriately among the servers.

Load balancing also achieves another function; it mitigates single points of failure. Such failures may be as simple as a process slowdown or impacting as a catastrophic failure of server hardware due to a network segment collapse or server shutdown.

In routine operational modes, should an application server become unavailable or too busy, the load balancer then directs the traffic to another server in the resource pool. The concepts are not unlike what occurs in the cloud, where the user doesn’t know which server is performing the task—they are only concerned that the tasks assigned happen within the boundaries of the timeframe needed and to continually deliver all the requested services.

Load balancing is a straightforward method of scaling out an application server infrastructure. As demands increase, new servers can be added, which can become resource pools from which a load balancer can begin sending traffic to.

In the cloud-based concept, the servers can address the needs or services, yet the user doesn’t know if there is a single server providing a service or the maximum number in the pool. Remember, while the general perception of the cloud is that of an off-premise implementation, the cloud may indeed be a group of servers in the asset management resource pool that is physically located in your data center or central equipment room.

Load balancers also carry the functionality of application acceleration. Sometimes referred to as an application delivery controller (ADC), the purpose now becomes the optimization of resources alongside the minimization of response times. An ADC can be one that is locally deployed or geographically dispersed. The ADC can be a replacement for traditional load balancers, especially when addressed in the perspectives of website (content) availability and scalability.

Regardless of which terminology is applied, the bottom line is that peak performance is achieved when there is a higher fault tolerance for the services that need to be provided. This is just one of the many reasons that, when designing a media management system, you need to provide sufficient resources (i.e., enough servers) and give extra consideration to peak usage periods in order to keep the gears turning smoothly, while limiting the degree of human intervention or reconfiguration.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about this and other storage topics in his book “Moving Media Storage Technologies.” Contact him atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.