HDR: Standards, Standards, Everywhere

To date, we’ve discussed many of the technical aspects of HDR, including brightness and color gamut. Now it’s time to discuss how we get the signal to the viewer so that they can enjoy the step change improvement in the viewing experience. For that, we need delivery standards — and luckily for us, there are a number of them (that’s the great thing about standards — there are so many to choose from!).

INCREASED BIT DEPTH

The first thing to note in all of the delivery standards is that without exception, they use a higher number of bits to carry each component, the minimum being 10-bit. A subtle point here is that we have dramatically increased the gamut of colors that the system has to reproduce, so of course we need more bits in order to be able to numerically represent that wider color space.

EOTF

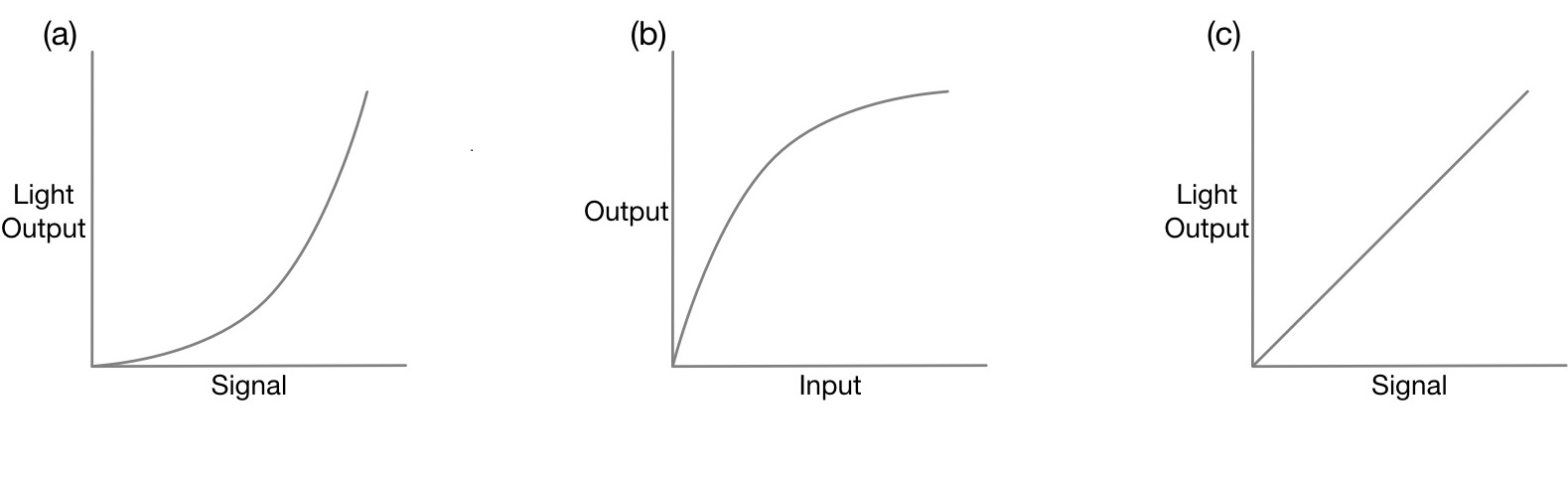

Many of us are well versed in the idea of gamma. This was the term for the nonlinear curve that was applied to the linear light output of video sources to account for nonlinearities in the delivery and display systems. The idea is simple, and illustrated in Fig. 1: if the display output is nonlinear, then by applying the inverse nonlinearity to the signal, the output to the viewer would then be linear (there is way more to it than this, but this explanation will do for now).

This assumes, of course, that all display devices have the exact same nonlinearity: a generalization to be sure, but it stood the test of time pretty well. You could have put this correction in the TV set itself, but that would have increased the cost of every TV set ever made, so it was more economical to do this at the source. In the HDR world, this correction is called the “Electro Optical Transfer Function,” or EOTF for short. SMPTE has published a standard for this transfer function in ST-2084 (commonly referred to as “Perceptual Quantization” or “PQ” for short), and this has been adopted by three of the delivery standards.

Other transfer functions have also been proposed and adopted — specifically HLG, or “Hybrid Log Gamma.” The idea behind HLG is to offer some level of backwards compatibility with SDR displays (PQ is not backwards compatible with SDR displays). It offers somewhat reduced luminance capabilities, but retains all of the color detail — of course, the SDR set needs to understand Rec.2020 color space in order to reproduce all of those colors.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

METADATA

Modern displays have different properties and capabilities, depending on their underlying technologies. It is therefore crucial that we give the display information about the image, so that it can maximize its ability to produce the best possible picture. The minimum metadata set that the display needs are: (1) The RGB values of the black point, (2) The RGB values of the white point, (3) “MaxCLL” — Maximum Content Luminance Level — the value (in nits) of the brightest pixel in the entire clip and (4) “MaxFALL” — Maximum Frame Average Luminance Level — the value (in nits) of any single frame. MaxFALL is generally smaller than MaxCLL, and is a parameter used by the display to prevent damage if a frame exceeds its safe handling capability — OLED pixels, for example, can burn out if run at maximum intAppliensity for a period of time.

There are two versions of this metadata: Static (for the entire clip) and Dynamic (can change scene-by-scene or even frame-by-frame). Static metadata was adopted first, but, of course, is “set and forget.” Dynamic metadata allows the colorist to adjust settings on a scene-by-scene basis and therefore gives greater creative freedom.

We now have the information we need in order to be able to compare the individual formats.

HDR10

This is the base level standard. It uses 10-bit resolution (hence the name), the PQ transfer function and static metadata, and is targeted at broadcast, Blu-ray and VOD applications. It is not compatible with SDR displays, so any media company who wishes their content to be available in both HDR and SDR must process the media through two separate paths (or at least a final conversion stage).

HDR10+

This is HDR 10 with the addition of dynamic metadata. The clip will still contain the static metadata, though, so HDR10 displays that lack the dynamic capabilities can still reproduce the image

HLG

This format is being championed by the BBC and Japan’s NHK. It does not use metadata at all, relying on the HLG transfer function to manage the image. It is particularly targeted at live broadcast, but its main advantage is that it is backwardly compatible with SDR. The HLG transfer function can result in color shifts or desaturation, however.

DOLBY VISION

This format uses 12-bit quantization (which in theory means that a compatible display could go as bright as 10,000 nits!), so it has quite a bit more resolution than the other formats. It uses the PQ transfer function and provides both static and dynamic metadata. This is a proprietary, licensed system however.

Paul Turner is founder of Turner Media Consulting and can be reached at pault@turnerconsulting.tv.

Other articles in this series:

Candelas and Lumens and Nits – Oh, My!!

How We See: The Human Visual System