SMPTE ST 2110-30: A Fair Hearing for Audio

This is the fourth installment in a series of articles about the newly-published SMPTE standard covering elementary media flows over managed IP networks. This month, the focus is on audio transport, specifically on uncompressed, studio quality audio for broadcast applications.

UNCOMPRESSED AUDIO

The full title of SMPTE ST 2110-21 is “Professional Media Over Managed IP Networks — PCM Digital Audio.” This standard is closely related to, and heavily based on AES67, which is titled “AES standard for audio applications of networks — High-performance streaming audio-over-IP interoperability.” Although the document titles may not be totally self-explanatory, both standards are all about transmitting raw, uncompressed samples of audio signals directly within RTP/UDP datagrams using an IP network.

To understand how audio signals are packed into these datagrams, it helps to remember that each individual channel of uncompressed digital audio signal is created using a fixed sampling frequency and a fixed number of bits per sample. In the case of ST 2110-30, all senders and receivers are required to support 48 kHz sampling, at a minimum. In broadcast applications, 24 bits (3 bytes) are generally used for every sample. So, for a 48 kHz, 24-bit stereo audio pair, the raw audio data would consume 48,000 x 3 x 2=288,000 bytes/sec which equals 2.304 Mbps without any packet headers.

PACKETIZATION

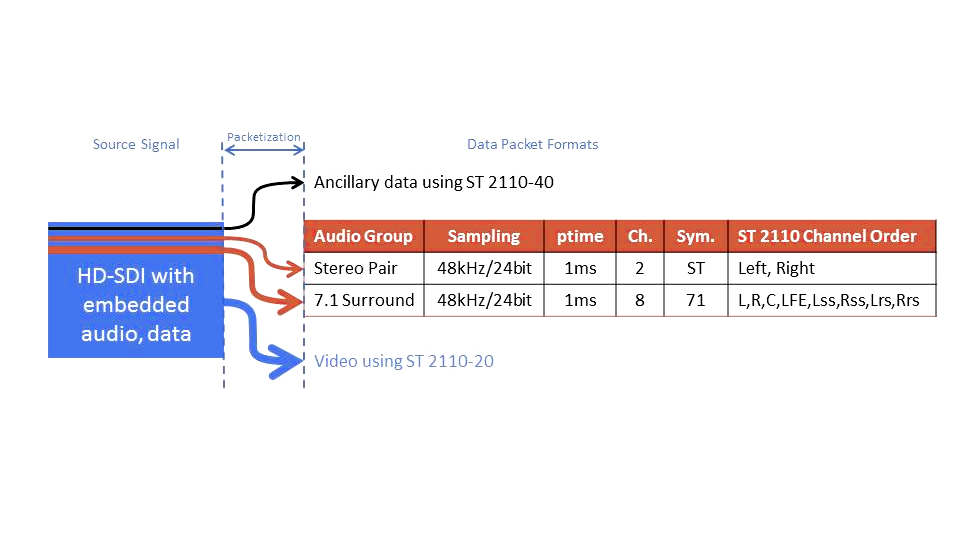

An example of how audio samples are placed into packets is shown in Figure 1, where an HD-SDI signal is separated into individual IP packet streams for each media type. Video is encapsulated using ST 2110-20 and ancillary data is done using 2110-40. Two audio groups are shown — one stereo pair and one set of 7.1 surround sound (which is eight channels uncompressed). Each of these signals is packetized into a separate stream, as shown in the two rows that make up the table. By keeping the number of audio channels at eight or below for each of the two packet streams, maximum flexibility in choosing receivers is achieved, since the minimum (Level A) conformance level for an ST 2110-30 receiver is eight channels.

[Read: What SMPTE-2110 Means for Broadcasters]

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Four factors control the way that audio samples are packed into the RTP packets that make up a stream, and are listed in the table within Figure 1 for each audio stream. The four factors are:

· Audio sampling rate. Most broadcast applications today use 48 kHz sampling, so all ST 2110-30 senders and receivers are required to support it. Some applications use 96 kHz sampling, and 44.1 kHz can also be found in practice, so the standards recommends that both additional rates should be supported. Other sampling rates are out of scope for the standard.

· Audio sampling depth. Because IP packets are formatted in bytes, the audio data payload must be an integer number of bytes. Therefore, AES67 and ST 2110-30 only allow 16-bit and 24-bit audio sampling.

· Packet time. This parameter indicates the timespan covered by the audio samples contained in each packet. For example, when 48 kHz sampling is used with a packet time of 1 millisecond, there will be 48 audio samples from each audio channel in each packet. Note that longer packet times increase the end-to-end latency of the audio stream (because it takes longer to fill each packet) and shorter times increase the number of packets in a stream.

· Number of channels. Normally, all of the parts of a multichannel audio signal, such as stereo or surround sound, are transported in the same IP packet stream. Thus, a 5.1 surround sound signal would have samples from six different audio channels interleaved within each packet.

A receiver relies on information contained in the SDP (Session Description Protocol from RFC 4566) in order to properly interpret the packet contents. The SDP data, which typically consists of a few lines of text, can be transported in multiple ways from a sender to a receiver. SMPTE ST 2110-30 does not define a specific way to do this. Instead, methods are being developed by the AMWA (Advanced Media Workflow Association) for use by media production facilities.

Along with the four parameters described in the preceding paragraphs, the SDP values defined in ST 2110-30 provide standard order for the individual channels within the IP packets. Two examples of this are shown in the table in Figure 1, including both the symbols that are used in the SDP file (“ST” and “71”) and their associate channel order in the last column in the table. Symbols and channel orders are defined within ST 2110-30 for other audio systems such as matrix stereo, 5.1 surround and 22.2 surround (symbols “LtRt,” “51,” and “222,” respectively).

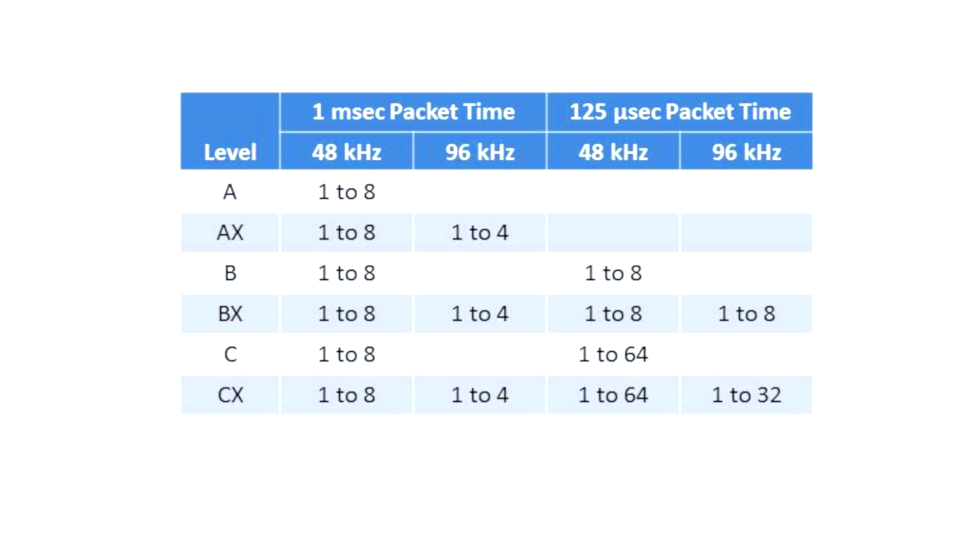

SMPTE ST 2110-30 also defines a set of six compliance levels for audio receivers, as shown in Figure 2. To achieve compliance at a particularly level, a receiver must be able to accept any quantity of audio channels in a single stream within the range shown in the table for each combination of packet time and sampling rate. Note that only the “–X” receivers are required to support 96 kHz sampling.

COMPATIBILITY & DIFFERENCES WITH AES67

One question that might be asked about AES67 and ST 2110-30 is: “Can they be made to work together?” The answer is: “Absolutely.” Because ST 2110-30 is based on AES67 and includes multiple “normative” (i.e. required) references, it is very easy to achieve interoperability. That being said, there are a few areas of difference.

First of all, ST 2110-30 receivers are not required to support SIP connection management for unicast audio signals. This is likely not an issue, since large audio networks frequently use IP multicasting to allow signals to be sent to multiple destinations simultaneously. This does mean, however, that ST 2110-30 receivers won’t be able to send or receive VoIP (Voice over IP) calls that use SIP for connection setup. Also note that RTCP (RTP Control Protocol) is recommended for use in AES67 but only needs to be “tolerated” in ST 2110-30 devices.

There are some differences with respect to how PTP (Precision Time Protocol, as defined in IEEE-1588) is implemented between the two standards. One important difference is that RTP clock offsets are not permitted in ST 2110-30. This means that AES67 receivers can work fine with ST 2110-30 senders, but that AES67 senders must not use an RTP clock offset when sending signals to ST 2110-30 receivers. There are also some differences in the specific profiles of PTP that are used in the two standards, however, the permitted ranges overlap so the two systems can be set up to work together.

One other slight difference: ST 2110 requires that every device has an option that allows it to be set in a PTP slave-only mode. When this mode is enabled, the device will never attempt to become a PTP master. This is important in large networks in order to prevent chaos when every device becomes available to take over as PTP master when an interruption in the PTP distribution system occurs. This option is not required in AES67, but should be a useful feature in many products.

HARMONIOUS SOUND

SMPTE ST 2110-30 was developed specifically to make audio as compatible as possible with video. By using the widely-accepted AES67 standard as a base, this new standard allows a wide range of existing audio equipment to harmonize with the rest of the 2110 suite.

Other entries in this series:

SMPTE ST 2110-21: Taming the Torrents

SMPTE ST 2110-20: Pass the Pixels, Please

Wes Simpson is President of Telecom Product Consulting, an independent consulting firm that focuses on video and telecommunications products. He has 30 years experience in the design, development and marketing of products for telecommunication applications. He is a frequent speaker at industry events such as IBC, NAB and VidTrans and is author of the book Video Over IP and a frequent contributor to TV Tech. Wes is a founding member of the Video Services Forum.