Kubernetes Automates Open-Source Deployment

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Whether for television broadcast and video content creation, delivery or transport of streamed media, they all share a common element, that is the technology supporting this industry is moving rapidly, consistently and definitively toward software and networking. The movement isn’t new by any means; what now seems like ages ago, in the days where every implementation required customized software on a customized hardware platform has now changed to open platforms running with open-source solution sets often developed for open architectures and collectively created using cloud-based services.

These trends, concepts and methodologies are well into adoption, applicable to all media ecosystems, and extend to developments such as software-defined networking, storage virtualization, software-defined data centers (SDDC) and the like. The open concepts have even reached the storage domain in the form of software-defined storage (SDS).

TECHNOLOGY OR MARKETING?

Sometimes the buzzwords associated with these emerging trends can be marketing driven vs. technical fact or capabilities—once new terms show up in the industry, they seem to stick like the “technology” they are attached to. Take SDS for example—originally it was a marketing term for policy-based provisioning of computer data storage software. The management of that data’s storage is essentially independent of the fundamental hardware itself.

SDS hardware may or may not also have abstraction, pooling or even its own automation software. Whether for storage, compute or applications (apps), software-based products are driving techno-suppliers toward open-source development. With this comes new capabilities built on commodity servers in a virtualized architecture that provides “hands-free” routine operations, self-healing and automated load balancing, to name just a few.

Open-source development and implementation is happening across all segments of the manufacturing and media landscape; and continues to take a front seat in many compute and storage environments—especially in the cloud. One of the more recent open-source automation platforms gaining momentum is called “Kubernetes.” Originally designed by Google, Kubernetes is an open-source framework for automating deployment and managing applications in a containerized and clustered environment. Kubernetes is now maintained by the Cloud Native Computing Foundation (CNCF), founded in 2015 to promote containers.

Kubernetes, while closely associated with cloud-services, is not exclusive to the “public” cloud. Its principles and perspectives are applicable to cloud technologies irrespective of where that “cloud” is physically located.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

To understand what this is about, we need to review some related terms: clusters, virtual machines and containers; noting these terminologies have perspectives beyond just applications—that the general principles are being applied to multiple operating environments including cloud, storage and other platforms, frameworks or architectures.

VMS, CONTAINERS & CLUSTERS

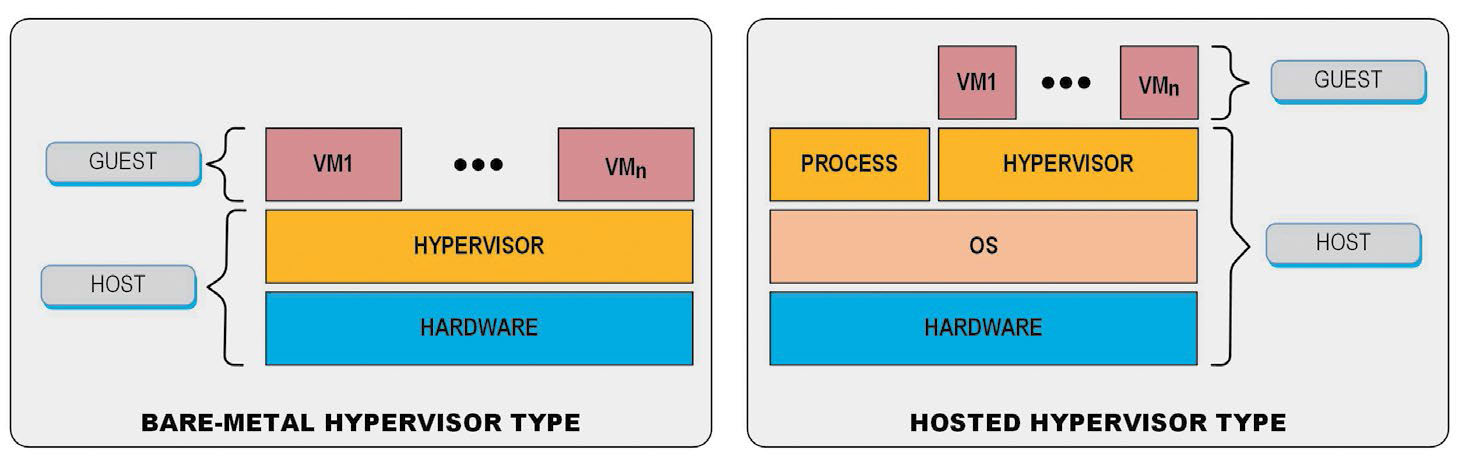

Virtual machines are nothing new but are becoming more prevalent as developers and users begin deploying applications (software) that run on multiple operating systems from the same hardware—especially servers. The compute power of modern servers allows several sets of tasks to occur simultaneously. These tasks must be managed, which occurs using software referred to as a “hypervisor” from manufacturers such as VMware or VirtualBox. As a process, the hypervisor separates the OS and the applications from the physical hardware; in effect insulating the tasks required of hardware systems (keyboards, displays, storage devices) from the core needs of the OS and apps (Fig. 1).

Characterized as “light weight, virtual machines,’ containers are standardized units of software that package up code and their dependencies such that applications can run efficiently (quickly and reliably) from one compute environment to another. Designers will create applications using containers, letting those applications be transportable to other operating systems, e.g., iOS to Android. A “light-weight” VM will share the machine’s OS system kernel, thus not requiring an OS per application. This promotes greater efficiencies, reduces server counts and decreases licensing costs.

Clusters are groups of (similar) “things” that are positioned—figuratively—close to each other. In computing, the coupling may be either “loosely” or “tightly” such that they are viewed as a single system to other resources. Clusters generally refer to servers—but for storage, this can relate to file system types, network-attached storage (NAS) grouping or to sector sizes on a disk (512-byte vs. 4-kibibyte or KiB).

KUBERNETES FRAMEWORK

At its core, Kubernetes is a framework, i.e., a means to enable automatic deployment with an ability to scale easily. It’s also about monitoring, a necessary subset that allows for the maintenance, notifications and for engaging self-healing techniques and modifications to facilitate updates, failovers and manageability during scaling.

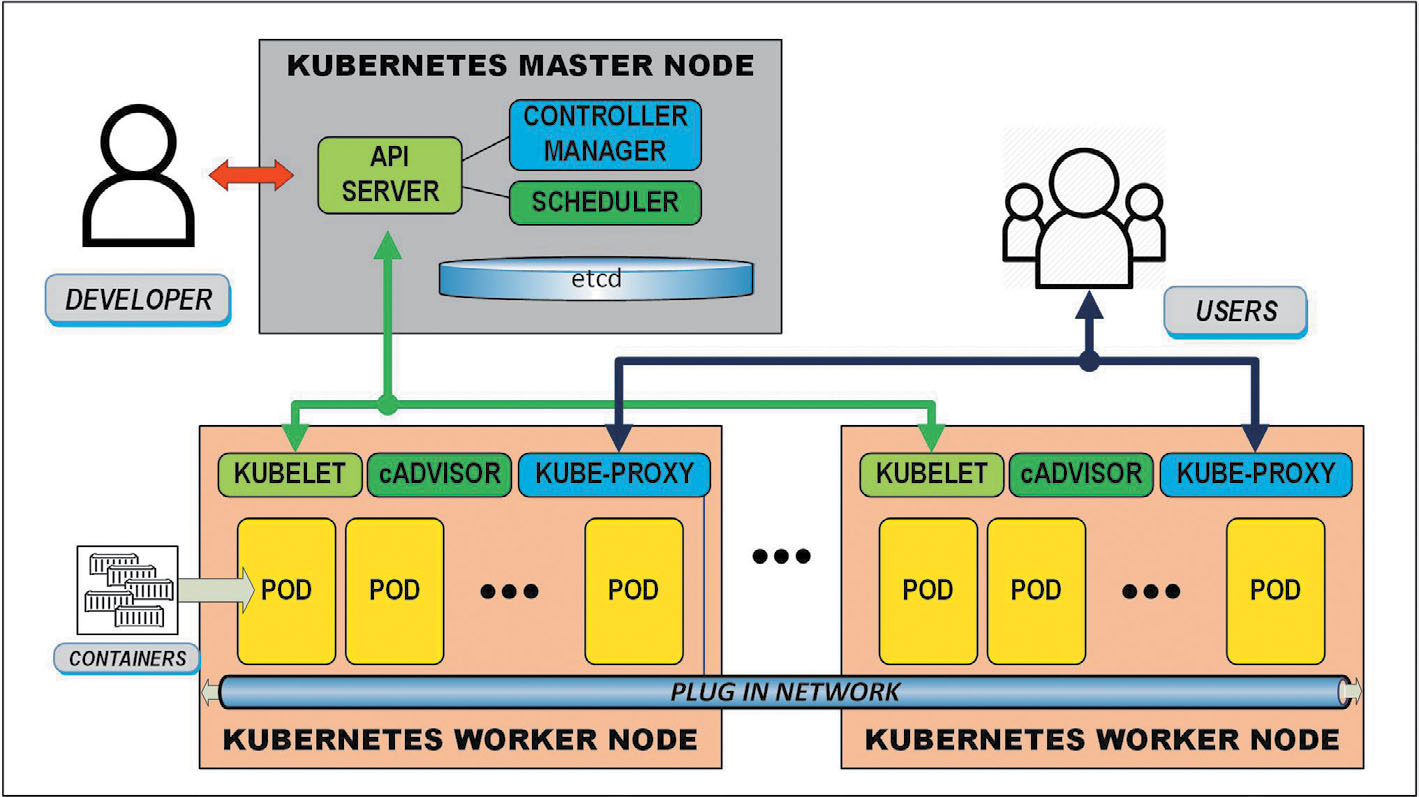

Architecturally, Kubernetes has a master node that is part of a cluster along with multiple “worker” nodes (Fig. 2). Kubernetes knows about the other servers, which you can deploy containers to. Each worker node can handle multiple “pods,” with the pods containing multiple containers clustered as a working unit.

Designers start building applications using multiple pods; once complete, the system lets the master node know the definitions of the pods and number of pods to be deployed. Kubernetes then takes over and deploys the pods to the worker nodes unassisted. Once operational, should any of the worker nodes fail (go down), Kubernetes immediately deploys the pods to other functioning worker nodes.

Load balancing and the complex management of pods, nodes, etc., evaporates, letting designers focus on improvements and efficiencies at any scale required.

Originally deployed for cloud applications, Kubernetes is now being applied to other valuable operations whether as “cloud native” or “hybrid on-prem/in cloud” solution sets.

SCALING UP AND OUT

Kubernetes allows systems to scale, with the scaling functions automatically being assigned and managed. Generally, scalability (or scaling) refers to the strategies of adding more services or devices to maximize a target need, use or application. Scalability is characterized by two types—scaling up or scaling out. Scaling “up” means to add more resources to the same server or device; whereas scaling “out” implies the linking of lower-performance machines to collectively do the work of a more advanced single service machine. The latter is often thought of as synergy, where the sum of the parts solution is worth more than the numerical quantity of those parts by themselves.

Scaling up can be expensivem with some arguing there becomes a finite point where the value becomes less than the effort, due in part to the individual limits of the hardware (or storage) itself based upon performance. One example of those limits is when continuing to add small capacity storage (disks) to a cluster beyond the finite ability to control those devices or beyond their lowest common denominator—such as disk throughput or volume capacities.

Scaling out is often what’s behind bigdata initiatives. In the scale out model, a central data handling software management system administers enormous clusters of components (hardware), yielding flexibility and versatility.

STORAGE CLASSES

In Kubernetes, the “StorageClass” provides a way for administrators to describe the “classes” (i.e., the framework) of storage offered. These classes and their complements may map to quality-of-service levels or to backup policies. Other arbitrary policies determined by cluster administrators may be included.

StorageClass may be called “profiles” in other storage systems, but Kubernetes, itself, is moot about what classes they represent; its concepts and principles being insulated from the products it manages.

The Kubernetes web site shows the concepts, example code scripts, etc., which further describes how to utilize their resources.

Karl Paulsen is CTO at Diversified and a SMPTE Fellow. He is a frequent contributor to TV Technology, focusing on emerging technologies and workflows for the industry. Contact Karl atkpaulsen@diversifiedus.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.