IP Contribution and Distribution for Broadcast

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Click on the Image to Enlarge

There is much talk about IP infrastructures for broadcasting at the moment. Typically, the talk centers on live production and interoperability between systems. This is, of course, extremely important. But there is another area in which it has the potential to deliver transformative change.

To get content from a remote location back to the broadcast center, traditionally you booked a contribution circuit (aka “backhaul”). This was usually just that: a single circuit. So if you were covering, say, a major football match, you had a single feed from the outside broadcast truck back to the studio.

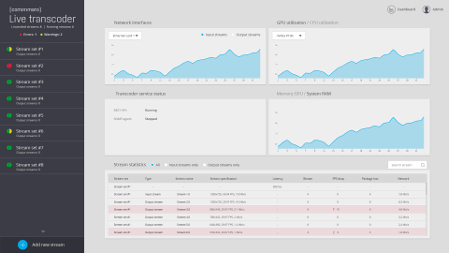

Comprimato Live

Major venues would have broadcast circuits permanently installed. These were video cables, and thus of no use for any other purpose, which meant that the provider—usually the local telco—had to charge a significant sum to cover the costs of installation and provision.

Where there were no video circuits available, productions were forced to use line-of-sight microwave links (which were limited in range and location) or satellite uplinks. Like fixed links, both radio solutions were expensive, risky in terms of resilience, and in the case of satellite links, added a significant latency.

With the coming of realtime IP connectivity for professional audio and video, all this changed. By converting the feed to IP, it no longer needed a specialized link, and could be carried as data over any carrier that had sufficient bandwidth. In particular, as telcos installed high-capacity dark fiber across their territories—and particularly in the sort of metropolitan areas home to major sports stadiums—the stream could be carried as data alongside other traffic.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

This saved money as telcos tended to charge for the amount of data carried, so broadcasters only paid for what they used. It also increased resilience, as geographically diverse redundant paths could be used, with the receiving device switching seamlessly between the strongest signals.

MULTIPLE FEEDS

Successfully delivering contribution over IP depended on good signal routing and on high-quality codecs to achieve broadcast quality in the optimum bitrate. While H.264 was widely used—with H.265 just over the horizon—this was seen as an ideal application for JPEG2000. This codec provides high video quality with 10:1 compression ratio for contribution applications and its wavelet algorithms are generally considered to degrade more gracefully than the discrete cosine transforms used in MPEG-type compression.

JPEG2000 also allows for uncompressed but packetized delivery, when no quality compromises can be tolerated.

Once broadcasters accepted the concept of IP carriage for contribution circuits, and the potential use of mild compression, then the obvious question became “can we use the available bandwidth to carry more than one stream from the venue to the studio?” It is this which is transformative for production.

It makes possible the ability to deliver multiple parallel feeds from an event. For rugby or football, you could have different cuts for each team. For an athletic event you could have separate track and field-oriented feeds or you could provide an international feed alongside a domestic production, which included an on-site studio for discussions and presentation.

It also means that you can deliver alternative content alongside the main feed, allowing the rights-holder to package an event in different ways for different platforms. All these provide new ways to engage with the audience, and to monetize the coverage of the event.

LOCAL AND REMOTE DISTRIBUTION

Another important requirement is to deliver multiple feeds at the location. An obvious use case is for a video referee, who will want to look at multiple camera angles. Rigging a large number of video feeds is challenging and time-consuming: running in a single fiber is much simpler.

While video referees are usually located at the event, in time it may be that major sports will follow the lead of the NBA in America, which has a centralized video referee center collecting feeds from all simultaneous games across the country.

Broadcasters frequently provide courtesy feeds to stadium screens and to other areas in the venue such as the press box and radio commentary. Again, distribution of multiple feeds over networked fiber is much easier to provide.

This concept can then be carried on to distribution, the delivery of streams from the broadcaster. Again, this is traditionally a single channel output over a video circuit, which is then modified for the platform at each individual headend. So the broadcast signal will be compressed by hardware at the headends for terrestrial, cable and satellite before multiplexing; and it will be transcoded for storage for video on demand and for live streaming across multiple platforms.

This architecture is inherently expensive, because it requires dedicated devices for each stream at each headend. It is also a quality risk, because the remote transcoders are outside the physical control of the broadcaster.

This same architecture of presenting multiple video streams along a single or multiple strands of dark fiber can be applied to distribution. This allows the broadcaster or content provider to maintain quality control over all the encoding in house, distributing all the different formats required ready packaged.

SOFTWARE ENCODING

Central to moving these ideas from a theoretical discussion to a practical solution is the ability to encode and stream potentially large numbers of high quality strands in a cost-effective manner.

The power of standard IT platforms today, and particularly GPU power, allows high quality codecs to be implemented in software. In turn, high power platforms—either virtualized or in physical devices—allow multiple instances of codec algorithms running simultaneously.

In other words, one appliance could have a single video feed in and, on a fiber output, multiple feeds at all the resolutions, formats and codecs required. This provides a secure and controlled solution to multiple distribution formats, perhaps even carrying the different streams over the public internet to the headends.

Alternatively, the same hardware could take in multiple video feeds and put it on a single fiber to another similar device, acting as a break-out box. So all the cameras or server outputs could be concentrated and delivered over a bunch of dark fiber to the television match official, or over the stadium network to the scoreboard controller.

It also provides a solution to deliver multiple feeds from a stadium to the studio, using open fiber connectivity. The same fabric could carry live realtime feeds and also transfers from the remote server network. Because IP is inherently reversible, the studio could use the same path to send archive material to the site.

Any such device would need to support a range of codecs to meet the production’s needs. It should provide transparent quality for contribution, particularly as viewers are becoming even more demanding as the option to deliver Ultra HD becomes a reality.

It should also achieve this with the minimum of latency, because consumers expect live events—and particularly sports—to actually be live. Finally, it should also acknowledge the realities of remote production where truck space is severely limited. Solutions such as Comprimato’s Live transcoder, offer multiple codecs and the ability to deliver as many as 70 full HD streams in a single 1U server with an end-to-end latency of less than 700ms.

IP connectivity provides new flexibility in both contribution and distribution circuits, allowing producers to create more targeted, engaging content, while ensuring quality is carefully controlled by bringing all the processing in house. Software systems that run on standardized hardware benefit from the continuous improvement in performance from the IT hardware industry, and through regular software updates the ability to add new functionality quickly, securely and cost-effectively.

Jirˇí Matela is CEO of Comprimato.