Elements of Enterprise COTS Servers

Karl PaulsenSTARBUCKS, WASHINGTON— Performance, capacity and reliability— these are not exactly the “three Rs” of past—but they do state three of the prime metrics used in selecting many of the new IT-based media server and storage components.

It’s no longer a surprise that commercial off-the-shelf (COTS) servers continue to replace purpose-built black-box hardware for many media servicing applications. While the choices seem to be many, in actuality there are but a modest set of mainstream COTS IT servers that will fulfill the enterprise class requirements expected by broadcast operations and production services organizations.

As a result of a paradigm shift to COTS over the past decade, end users now must make choices not only for the manufacturer and type of server; they also need to pick the specific components that go inside the server itself. This takes the concept of “off-the-shelf ‘stock IT-servers’” and moves them into the realm of “customized to order”— sometimes termed CTO—whereby the performance, capacity and reliability is governed by several technology contributors.

ENTERPRISE CLASS SERVERS

To meet these three objectives, CTO enterprise class servers must be engineered to provide higher availability and a level of serviceability that outplaces more conventional standard business-class servers. More succinctly, by definition, an enterprise server is a class of computer containing programs that collectively serve the needs of an enterprise as opposed to a single department, user or specialized application.

Others consider the term enterprise server to describe a super-program that runs under the operating system (OS), which services application programs or specialized services that, in turn, orchestrate (or run) other computer activities.

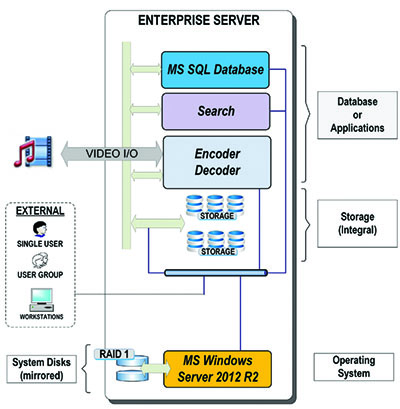

Fig: 1: example components that typically might be installed in servers used for media services applications. When this class of server is configured, users will be tasked with determining the specific component sets including: the processor, the memory, the I/O and the mass storage medium. The selection process is no longer limited to simply which processor; users must decide how many processors running at what speed and with how many cores in order to meet specific operating environments.

Selecting the main memory type and the amount (16 GB, 24 GB, 48 GB, etc.), becomes the metric probably most easily understood. Nonetheless, this also becomes more confusing when a server uses both flash (solid-state drives) and dynamic random access memory (DRAM) to increase operational throughput. DRAM variations include video (VRAM), window (WRAM), fast page mode (FPM or FPRAM), extended DRAM, burst EDO (BEDO) RAM, synchronous graphic and synchronous dynamic (SGRAM and SDRAM, respectively).

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

However, when turning to the mass-storage components (hard drives and arrays), users have a plethora of choices that can make or break the functionality (read that as “performance”) of the server based upon the designated applications. Storage systems may be integral to a specific server, shared among a collection of servers (as in a cluster), or may be the centralized storage component that provides data to most, if not all, of the servers and users in the system. Thus, we’re finding that storage systems are now available in an ever-increasing set of drive types, drive capacities, array formats (RAID levels) and in various access and/or protection configurations.

Costs will often take precedence over one or more of the server’s metrics. Any approach that leans the server’s capabilities should be taken with caution as a “cost-effective” server will produce only what it is offers. Cheaper obviously means less of something: less performance, less capacity or less reliability. Demanding applications, such as those in media playout and media management, require high-availability (HA) servers. To produce HA means you need to have a suitable quantity and type of onboard processors. You also must have sufficient I/O capability; yielding a need to maximize the memory—both DRAM and mass storage such as spinning disks or solid-state arrays and high-performance internal backbone (i.e., internal networking) and external data interchange capabilities. The latter may be accomplished by moving to 10 GbE (or above) for the LAN and Fibre Channel interconnections to storage for the memory; the former is a matter of design and class of server itself.

DATABASE SERVERS

Most media management systems require a database server platform. Database servers used in media management applications (e.g., SQL clusters or equivalents) have fairly consistent requirements and are, therefore, familiar to most IT-savvy administrators and product providers.

However, when it comes to handling the demands of multiple streams of isochronous file-based content delivery to software-based encoders or decoders, this is truly understood only by a few, as this is a specialized class of constraints that bare little relationship to conventional database or transactional computing.

You need to be absolutely certain the core application(s) deployed on the servers are qualified as to the make, year and version (e.g., MS SQL Server 2012) and the same goes for the operating system (e.g., MS Windows Server 2012 R2).

Servers used for search purposes (sometimes called “indexers” or “search engines”) may require a specific operating system and even hardware components. Indexer activities are seldom transaction-based such as activities confined strictly to a database server. Indexers are constantly thrashing metadata to find specific content based on Boolean search terms.

Indexers may work on parallel threads of computation data that query databases and storage metadata at very high speeds. Once a set of matches are found, the indexer might then become that same unit, which retrieves the media (content) based upon the search criteria, serving it up to the content distribution platform for other actions such as editing, transcoding, etc.

An alternative operational model may relegate that serving task to a media migration server, which parses that information, connects to central storage, retrieves the files (or proxies) and then directs the content to a playout decoder, to an editor or to another function in the system such as archive or externally to VoD or content delivery.

Search engine applications utilized in MAM’s index server may be provided by third-party developers. In this case a MAM vendor has integrated the search engine into its own media manager application and runs it on one or more dedicated indexing severs.

As in the SQL server, the indexer server’s operating system must be selected based on the search engine’s software requirements. Search activities typically deal with high volumes of processes, especially during peak demands as editors or journalists are looking for clips that will be used in creating one or more versions of a compilation.

Thus the selection criteria (i.e., the OS’s make, series and revision numbers) and the central computer’s core makeup are usually set by search engine developer and specified for a minimum CPU type, often with dual CPUs, and a specific minimum number of cores per CPU.

There are numerous other subsystems, which make up the enterprise server. Cloud services often build out massive sets of servers each with high availability and multiple sets of CPUs with 8, 12, 16 or more cores each. The cloud services delegate the individual server’s performance based upon demands set by the specific work-task requirements.

Cloud services throttle the performance up or down based upon the requested needs for servicing applications at that particular moment. This is why the cloud makes better sense for some, especially when the uses and demands are unpredictable; and would be constrained if only a finite set of servers’ CPUs and cores were available in the users closed facility environment.

Other forms of servers are finding their way into the world of media asset management solutions, including blade systems, which are growing in popularity for media processing applications, or the control and management of entire systems. Blades are smaller slot-based servers that can be dynamically allocated or added to without having to buy an entire new server in a dedicated single “pizza-box” chassis configuration.

Blades consist essentially of CPUs, memory and I/O. Blades share a common chassis, common data highways and a common (larger) power supply, making them more cost-effective to expand and space-effective as the user needs to increase services.

Not to be left out are the server’s storage requirements, but that must wait for a later installment.

Karl Paulsen, CPBE and SMPTE Fellow, is the CTO at Diversified Systems. Read more about other storage topics in his book “Moving Media Storage Technologies.” Contact Karl atkpaulsen@divsystems.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.