Designing the IP-Based Media Network

ALEXANDRIA, VA.—Broadcast facilities are now commencing what many believe will be a global transition from current digital (SDI) infrastructures to an all “IP-based (network)” facility. Not since the migration from analog to digital in the 1990s, has the industry experienced such a change.

On the surface, the transition seems logical, expected, and maybe even straightforward, given the level of IP/IT-integration already present at many facilities. Yet under the hood, both IT and broadcast technical professionals are in for a paradigm shift in concept, facility design and support practices.

This two-part article discusses the issues associated with next-generation IP-based facility design. The topics are not going to detail the transport of media over long distances nor the practices involved with file-based workflows, storage transfers or even OTT—which all use components of IP in an IT-domain. Instead, this article explores what broadcast and IT professionals will need to know about their future commitments to next-gen network-centric infrastructures.

CURRENT IP MEDIA PRACTICES

Professional media environments provide multiple means for moving video (i.e., compressed-files or streaming media) from point A to point B. Those points might be across the campus, between cities, to arbitrary distribution points—or anywhere between. In most applications, signal transport of the audio/video is a compressed video format of which there are dozens available. Some formats are highly compressed for Internet delivery and others are mildly compressed contribution- quality, e.g., from sporting venues to studios or for production integration prior to broadcast.

Manufacturers’ products for compressed media transport prepare the data for the subsequent stages in the content production chain. Few (if any), provide a means to transport uncompressed, high-bit rate content end-to-end over the network. This article doesn’t address the discussion for the absence of this form of transport.

With that preface, we’ll focus on the latest applications for high bandwidth, real-time, live broadcast production using IP over a media-centric network.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

HIGH BIT RATE–UNCOMPRESSED VIDEO TRANSPORT

Broadcast production is beginning to use new capabilities for the transport and manipulation of high bit rate (HBR), uncompressed (UC) signals over an IP-network topology. These applications are specifically for live/real-time production activities. Recent SMPTE standards (ST 2022-6 & -7 and ST 2110 published year-end 2017), alongside industry forums and initiatives are driving new technological efforts that will reshape the broadcast facility.

“Internet Protocol” (IP) network technologies are already in use at many media facilities. The approaches are applicable to file-based workflows, data migration, storage and archive, automation and facility command-and-control. Previously, these weren’t necessarily called “IP.” Once the capabilities for UC/HBR video transport came about; that nomenclature evolved. Now, it seems, “everything” is IP, irrespective of how that terminology is applied to which application.

With that said, we’ll set the stage for what is happening in the future, and that ‘future’ is now.

RULES OF ENGAGEMENT—IT CHANGES EVERYTHING

IP is a “set of rules (“protocols”) which govern the format of the data sent over the internet.” For broadcast or studio facilities, “internet” is more appropriately called the “network.” Essentially, the application of certain constrained IP technologies will fundamentally address the facility infrastructure changes associated with studio/ live media-production and their content-chain processes going forward.

Designing and building an IP facility will require a renewed technological approach to IT-networking accompanied with a new mindset compared to those for traditional SDI-facilities. To comprehend what it takes to design, build and operate the IP-based professional media facility, an understanding of what “real-time” (RT) IP is and how it is differentiated from conventional SDI implementations (including file-based workflows or data storage) is necessary.

One key-target in this will be to keep “audio and video (over IP networks) acting precisely the way it does in an SDI-world” without the burdens or constraints of traditional SDI infrastructures. Fundamentally, facilities will leverage the advantages of network-based IP/IT structures for agility, flexibility, cost, and extensibility/expandability.

WHERE ARE THE DIFFERENCES?

SDI, born out of standards from the 1980s, was intended to permit transport and synchronously switch audio/video from source to destination without disturbances and to mitigate the generational quality issues associated with analog video and audio. The isochronous nature of SDI is straightforward for live, continuous video inside the studio and for long distance transport. However, these capabilities are not as easily accomplished in a file-based, non-real time, or streaming media environment.

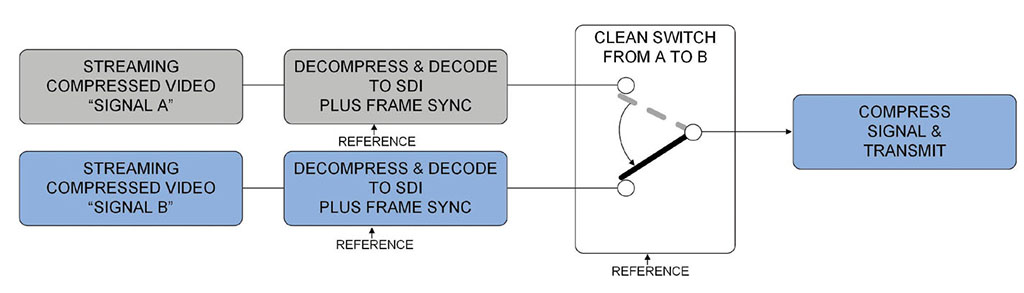

Frame-accurate (undisturbed) transitions with compressed video, while somewhat possible in streaming media, is generally accomplished using peripheral equipment which essentially receives compressed video, then decompresses it to a “baseband” (SDI) form, where then seamless transitions from A-source to B-source are completed (Fig 1). Resulting signals may again be compressed to another format depending upon the application.

[Read: SDN: Not Just Another Three Letter Acronym]

These processes each take time, adding latency to the non-real-time chain. It is impractical for most live applications to cleanly switch sources and maintain timing and synchronization.

Program videos on YouTube or Netflix leverage sophisticated receiver buffering techniques or will make use of adaptive bit-rate (ABR) streaming functions to keep their “linear delivery” as seamless as possible to viewers. However, the ability to provide live and glitch-free source-by-source video possible is curtailed due to GOP (group of pictures) issues and compression/decompression latency.

For professional media IP systems—real-time/live signals, on a network, are transported over isolated, secondary or virtual networks (VLANs). For live and real-time, HBR signal transport, new network topology and timing rules must be adhered to. These “rules” (protocols) are defined in SMPTE ST 2110 and/or ST 2022 which include applications of IETF RFCs as defined in the new standards.

DIFFERING DATA TRAFFIC STRUCTURES

Another key point in understanding next-gen facility design is that differing data traffic types are not (generally) mixed on the same VLAN/network. Packet structure and formatting is different per each data type’s intended uses.

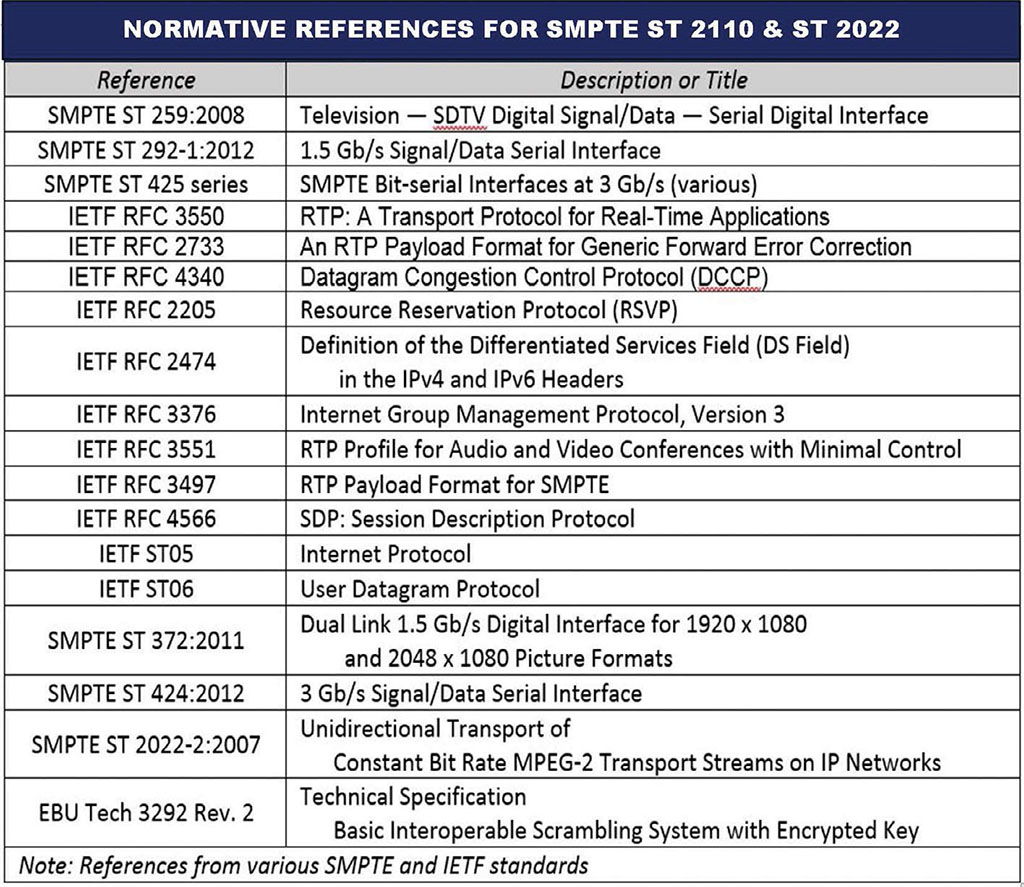

Real-time transport networks are conditioned to carry HBR traffic. Packets from senders (transmitters) are constructed based on IETF RFCs (Fig. 2) such as “real-time transport protocols” (RTP) and “session description protocols” (SDP); and supporting IEEE and SMPTE standards. Coupled with conditions identified in the SMPTE ST 2110 or ST 2022 standards— timing, synchronization, latency and flow control is managed so that the transport of media packets over professional media networks is possible.

One differentiator from previous IT-like network designs is that HBR traffic must run continuously at non-wavering data bit rates. File-based and streaming media is intended to, or can run at variable data rates. The data is likely to be randomly delivered and is often “bursty” in nature. In file-based transport, data from senders need not “arrive” at receiver input(s) in an isochronous (time bounded) nature. Streaming media acts in a similar fashion with fluctuating rates that are stabilized at the receiver end. Buffer sizes, connectivity bandwidth and variable file-data rates are accepted in these applications—but cannot be tolerated in real-time HBR applications.

In streaming media delivery, occasional interruptions or “buffering” is expected. That is a non-starter for live real-time video which must be synchronously time-aligned to allow for real time seamless switching.

Thus, a major difference in facility design is in how the various “network” segments are thought of. With that said, we’ll set the stage for what is happening in the future, and that “future” is now.

System designs now include distinct considerations for real-time and non-real time signal flows. Real time management and flow control associated with the endpoint peripheral devices must be “orchestrated” and will differ from non-real time delivery. File-transfer, storage and/or file-based workflows will likely reside on a different, less constrained network (segment).

Part one has now introduced broadcast and IT professionals to the differences and conditions associated with IP-centric professional media transport for studio and live operations. In part 2, we’ll discuss how next-gen design for IP-facilities differ and what engineers will need to know about their future in a multicast IP world.

Karl Paulsen is CTO at Diversified and a SMPTE Fellow. He is a frequent contributor toTV Technology, focusing on emerging technologies and workflows for the industry. Contact Karl atkpaulsen@diversifiedus.com.

Karl Paulsen recently retired as a CTO and has regularly contributed to TV Tech on topics related to media, networking, workflow, cloud and systemization for the media and entertainment industry. He is a SMPTE Fellow with more than 50 years of engineering and managerial experience in commercial TV and radio broadcasting. For over 25 years he has written on featured topics in TV Tech magazine—penning the magazine’s “Storage and Media Technologies” and “Cloudspotter’s Journal” columns.