Netting quality in the video stream

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

The streaming video era is upon us. The advent of streaming video technology is not only changing how traditional TV delivery is accomplished, it also is transforming business models, subscriber experience and content availability. It is creating a “video renaissance” for the broadcasting industry.

Why is streaming video technology transformative? What challenges does it solve? What problems may it create? What can I do about it? There are many questions, but one thing is for certain: We must find ways of enabling content to be consumed on not just the TV, but other devices and locations. Streaming video technologies make that possible and cost-effective.

The streaming video project now has many variables, but the monetary equation’s root is video quality.

Industry transformer

Streaming video technology has not only enabled almost anyone to provide any type of content directly to the subscriber, it has given the ability to change how we view the subscriber.

Historically, the video industry developed business models around a “television household.” This lumped all individuals in the home into a single group, even though each had distinct viewing preferences, habits and purchasing power. Also, it was hard to break down the TV household because individuals shared televisions. Similarly, early in the streaming video era, content was primarily delivered to a PC. It, too, was a shared device, although typically used by one individual at a time.

As broadband speeds increased, new streaming technologies were invented. Quality increased, and new devices like tablets and smartphones became commonplace in the home. The TV household was finally broken down to represent individuals, all having their own device. At this point, this evolution enabled the industry to provide specific content to each individual in the home, parallel to communal viewing on the TV and PC. Furthermore, advancements with game consoles, new set-top boxes and Smart TVs have enabled streaming video content on the television. As an industry, we can now technically provide content to any device in most homes. Moreover, we can provide content to individuals or the household.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

With these new capabilities, broadcasters, video service providers and content owners can tailor content and business models to each individual next to traditional video business models. As we build these new individualistic models, it assumes that we can gather information about how video is being consumed, the quality of the viewing experience and how it impacts subscriber behavior.

Intentional quality reduction

One of the biggest challenges streaming video solves is the ability to provide content to any device over nearly any network. One of the biggest problems created when streaming video is the intentional and unintentional reduction of quality due to limited network or device capabilities. In many cases, the cause and effect on the subscriber is unknown. This unknown must be measured in order to better understand what drives user behavior and how service can improve.

Not only do we need to measure and understand streaming service quality provided to the end user, we also need to ensure sourced video is high quality. If the source’s quality isn’t measured, then it is junk in and junk out, no matter how the video is encoded and sent over the streaming video network.

Solutions

Various operators, suppliers and standards bodies are working on different aspects of how to address these issues. The ATIS Internet Interoperability Forum is working on a standard to correlate end-user quality and source quality in a streaming video network. Complementary to that, the SCTE is working on video artifact definitions and measurement guidelines. The following two resolutions below are based on these developing standards, and can also be accomplished using various vendor implementations.

Resolution 1: Junk in, junk out — In any video network, it is critical to measure video quality at the origination. For a cable TV, satellite TV or IPTV operator, the source would be the incoming feed from a programmer before it is re-encoded for transmission over their networks. For a broadcaster, this might be the national feed being sent out from post production, or it could be the incoming feed from a remote. For a local affiliate, this might be the national feed received and the retransmit with local ads and content. The “junk in, junk out” issue arises anywhere video content is handed off to another party or is being re-encoded.

For instance, you could be monitoring video at a headend that has been encoded and re-encoded several times by other parts of the video distribution. Network and MPEG layers are typically error-free; however, the impairments are in the content itself due to prior encoding. Only perceptual quality monitoring (PQM) can identify visual artifacts within the content itself and determine video quality. PQM can identify video artifacts like: blockiness, blurriness, jerkiness, black screen, frozen screen, colorfulness, scene complexity, motion complexity, ringing and several other visual artifacts, regardless of cause. Definitions of these artifacts can be found in the SCTE HMS177 draft standard. Most tools that detect these artifacts can also use them to compute a mean opinion score (MOS). A MOS is a 1 (bad) – 5 (perfect) score of the overall video quality representing how an average viewer would rate video’s quality. Using the artifacts and MOS score, you will know at each point in the video transmission path if junk in results in junk out.

Figure 1: One of the most critical places to apply PQM monitoring is prior to encoding and packaging of content (point A).

One of the most critical places to apply PQM monitoring is at the point prior to encoding and packaging of content for a streaming video service. Monitoring at point “A” provides a quality baseline for the service. (See Figure 1.) The same PQM monitoring is then applied for each of the bit rates at point “B” and compared to their counterpoints from point “A.” From the comparison, the measurable degradation can be calculated. If the degradation is significant, or the quality was poor at the source, then the content should be halted or at least an alarm sent to operations. We can then catalog the quality for each bit rate and segment in a database for correlation with each view. (Measurement at point “B” in Figure 1 can be before or after the origin server, but before the CDN.)

Resolution 2: End-user quality of experience correlation and potential impacts — From the first resolution, we know the baseline quality of video prior to encoding and packaging for a streaming video service. We also know the quality of each bit rate by segment, so we know how much degradation is imposed when the content is encoded to a lower bit rate. Using this information and telemetry from the streaming video client, the quality for each subscriber can be calculated, per service, and then correlated with user behavior.

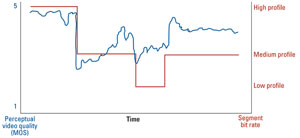

The bandwidth and network quality for each subscriber may change over time, and the client will adapt to the conditions by requesting different bit rates and using various other techniques. Calculating each subscriber’s quality is straightforward when using the new MPEG DASH standard on a client. (If another client like HLS or SmoothStreaming is used, the client metrics telemetry reporting may not exist, or it may be proprietary.) The MPEG DASH standard includes an option to provide telemetry and metrics that include what segment was requested, when it played and a reason if it didn’t. Using this information from point “C” in Figure 1, we can look up the quality measured back at point “B” for each segment that was played. Then, the user’s quality can be calculated over the entire viewing period. (See Figure 2.)

Figure 2. The MPEG DASH standard gives the option to provide telemetry and metrics at a specific point. This information is used to calculate a user’s experience over an entire viewing period.

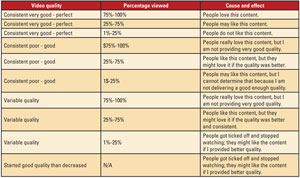

In addition to calculating each subscriber’s video quality, the average viewing quality across all subscribers is calculable as we gather this data from all the clients. From this data, it can be better understood how each subscriber is receiving streaming video service — not just from a bandwidth perspective, as many companies currently look at, but from a true perceived video quality perspective. This is critical as one looks over not just a single video viewing period, movie or clip, but over a longer time period. If users are experiencing high video quality consistently, they are more likely to use the service. If they have consistently low quality, they will have a different behavior, and yet another different behavior if it is inconsistent. The quality information can also be correlated with the percentage of video completion for a fixed video asset (like a TV episode, user generated clip or movie, for example) to understand the potential cause and effect on users. (See Table 1.) Similar conclusions can be generated for the length of someone watching a live broadcast or streaming channel.

Table 1: This shows the potential cause-and-effect range based on quality and percentage viewed.

Without correlating the video quality with the percentage viewed, it is difficult to understand what drives user behavior, keeping in mind that quality may not always be correlated with the bit rate since some content is harder to encode than others and may change throughout a program, asset or movie. For instance, if you were streaming a channel, the video quality decreased because the complexity of the content increased (i.e., going from a newsroom to a sports event). And, even though the bit rates remained the same, knowing the subscribers stopped watching it would be an important data point. Why did they stop? They didn’t stop watching because of the type of content; they may have wanted to watch the sporting event, but the degraded quality convinced them otherwise.

Conclusion

Streaming video is enabling a video renaissance where we, as an industry, are able to provide endless types of content to almost any device, anytime, anywhere and do so economically. However, in the process of becoming so flexible, the quality of video becomes much more variable than in our traditional HD/SD to a TV model. Understanding the quality of provided video is critical to any video service provider, be it traditional or streaming. In a streaming video service, it becomes critical to understand the cause and effect on subscriber behavior. From that, we can understand what content is liked more compared to others, and why that is the case. From there, more emphasis can be placed on those video assets for monetization.

—Jeremy Bennington is senior vice president of strategic development, Cheetah Technologies.