Storage and workflow

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

Content creation and distribution have moved from a tape-only infrastructure to one that must now support multiple hard media types, including portable and fixed disks, as well as solid-state memory. All of this requires increasing amounts of mass storage, and with that, more sophisticated and complex workflows. With more content being handled in file-based form, emphasis must now be placed on file storage in the broadcast plant.

Integrated network storage

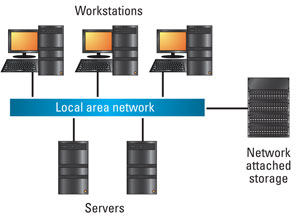

File-based data and digital content today can be stored and accessed using essentially three types of architectures, currently based on the hard-disc drive (HDD): direct attached storage (DAS), network attached storage (NAS) and storage area networks (SAN). In a DAS system, storage devices are directly connected to — and often physically integrated within — a workstation or other client system; one form of DAS is a set of hard drives within a workstation, not necessarily connected to a network.

With a DAS system, the storage devices cannot be directly shared with other clients, making efficient workflows hard to implement: Management systems are complex, and many copies of the content will exist in different places, making version control extremely difficult. However, when combined with a fast interface, DAS systems can provide fast data access, because each storage device specifically supports the system in which it is integrated. For this reason, DAS has long been the preferred solution in post-production.

But the use of DAS in broadcast workflows is rapidly declining, because higher-bandwidth data networks have now made shared network storage practical, resulting in improved efficiencies and productivity. Because DAS has the limitation that stored data cannot be shared with others for collaborative work, and is difficult to coordinate and manage, content managers have been switching to NAS, which allows the stored content to be shared simultaneously among multiple clients. NAS storage also has the advantage of utilizing cost-effective high-speed Ethernet interconnectivity, further improving workflows.

A storage area network (SAN) is a dedicated network that connects disk storage devices directly to managing servers, using block-storage protocols; file management is left up to the controlling server. SANs have also provided a method to readily access shared content, using a specialized high-speed optical fiber or GigE networking technology. With SANs, access to data is at the block level, similar to the physical-layer storage on a hard-disk drive. SANs offer the benefit of fast, real-time content systems, but that performance comes at a financial cost. In order to provide wide access to the content, SANs were often combined with NAS, whereby the latter filled the need for near-online storage, with content archived in a lower-cost, slower-access storage tier. A SAN also requires a third-party file system to be located on each client system accessing the data on the storage network with specialized network adaptors. This makes interoperability with other, more common file systems a challenge.

Figure 1. NAS storage consolidates workflow assets.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

In contrast, network attached storage, as shown in Figure 1, operates at the file level, providing both storage and a file system. Because of the rapid drop in the cost of building and maintaining GigE networks, as well as the increased performance level of NAS networks, many content producers and distributors are now moving toward NAS technology for content storage. In the past, in order to assure 100-percent reliability, users would rely on dual-redundant DAS or SAN servers with redundant network interfaces, so there would be no single point of failure. But one of the problems with DAS/SAN storage is scalability; when content managers need more storage, higher-speed access, or more supported users, these legacy systems most often required redundant capacity, increased complexity or completely new build-outs.

In contrast, a NAS system often can scale easily, keeping up with performance and storage needs, allowing for growth, while combining the convenience of a single expandable file system with ease of use and uncomplicated management. NAS servers ease system administration issues, improve file sharing and reliability, and provide high-speed performance. By adding support for multiple protocols, Unix and NT users can share stored files without requiring any specialized software on the clients. NAS file network protocols include FTP, HTTP and NFS, providing compatibility with data management and content editing systems, and allowing versatile operating software to manage files, users and permissions easily.

Reliability and QC

For reliability, file storage systems will often be grouped into redundant clusters, with fail-safe prioritization. One common way of doing this is in a Redundant Array of Independent Drives (RAID) storage cluster, which uses either mirroring (full redundancy) or mirroring combined with striping (distributed data) to allow all data to be accessible even if a number of drives fail. Mission-critical drive systems can also be equipped with redundant power supplies (or with battery backup) and fans, with nearly every component hot swappable, including the drives. In addition to providing drive diagnostics, health monitoring modules are available that can anticipate and head off failures; capacity expansion can be as simple as adding more drive modules to an existing system.

Solid-state drives (SSD), which have gained popularity in small laptops and netbooks, are based on rapid-access Flash memory, and would seem to be the next phase of mass-storage technology. SSD memory, which offers higher speed and reliability than the Flash memory used in USB thumb drives, could offer performance gains compared with disk-based storage.

However, SSD is probably several years from practicality in the production environment, because of high cost, capacity limitations and unproven long-term reliability. Although a consumer-grade HDD currently runs about $100 for a 1TB (terabyte = 1000GB) 2.5in drive, an SSD of the same capacity and form factor would cost about $600. That’s about $0.10/GB for the HDD and $0.60/GB for the SSD. (One hour of uncompressed 10-bit 1080i requires 585GB.)

Today’s broadcast/production workflow includes many component processes. On the content ingest side are satellite, Internet, and mobile asset sources and interfaces. On the playout side are various destinations, configured for real-time and file-based I/O that include wired and wireless interfaces. In between these sits the asset management/browsing/editing/archiving functions, which invariably rely on multiple storage solutions.

Transcoding to multiple platforms is already of growing importance, and with the ever-growing amount of content that must now be processed — much of it from amateur sources — quality control has become a critical issue. Although human input had served this function in the past, the sheer volume of production material now requires automated QC to keep up, much of it available offline, in faster-than-real-time capacity. In addition to the high-level function of detecting (and correcting when possible) file errors, sophisticated software can now detect and re-process incorrect video formats (resolutions and frame rates) and levels (skin tones, black levels); digital artifacts like blockiness and contouring can be flagged for re-coding if possible. Software can now monitor audio characteristics as well, and provide a safeguard for dropouts and even the now-important loudness requirements of ATSC A/85, EBU R-128 and EBU Tech 3341.

Future storage requirements

Content handlers and producers that have not yet upgraded to state-of-the-art file storage will soon hit a critical situation that will weigh heavily on their CAPEX plans. Although cloud solutions may offer a temporary fix, data security and ease of manipulation mean that a more permanent and integrated solution must be addressed now.

—Aldo Cugnini is a consultant in the digital television industry.