Why NextGen Video Monitoring Is Essential in the ATSC 3.0 Environment

Delivering a high quality of experience and quality of service for NextGen TV is critical

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

You are now subscribed

Your newsletter sign-up was successful

ATSC 3.0 is currently being deployed as the next-generation standard for digital television. The NextGen TV standard will enable more interactive content to be delivered as well as improved video (e.g., UHD, HDR, WCG and HFR) and audio quality (e.g., Dolby AC-4, with support for Dolby Atmos). These improvements, however, do not come without issues. ATSC 3.0 mandates multi-delivery, multi-user requirements, which create inherent challenges with regards to video quality monitoring for the processing of native UHD content, as well as for content downconverted to a lower resolution.

As NextGen TV is more widely deployed, advanced monitoring solutions will be needed to detect and resolve video quality issues related to color gamut, block errors, upconversion to 4K resolution, motion tracking, the use of heavier compression and video coding scalability.

COLOR GAMUT

Color gamut issues will arise and must be addressed when transitioning to ATSC 3.0. Typically for traditional color gamut spaces, one color component at 8 bits is required, based on the ITU-R Recommendation BT.709 for HD and ITU-R Recommendation BT.601 for SD. However, ATSC 3.0 relies on ITU-R Recommendation BT.2020, which specifies that 10 to 12 bits (and possibly even up to 16 bits) are needed for UHD HDR with Wide Color Gamut content delivery.

Broadcasters need to be able to identify color gamut issues in Wide Color Gamut (WCG) content (either by correctly interpreting the content metadata or using other inference methods). They also must be able to handle the distortion-less conversion from a higher to a lower bit resolution to ensure interoperability with the legacy ITU Rec709. The color space plot shown in Fig. 1 demonstrates the relative color space (inner triangle) occupied by the legacy Rec709 (HDTV) and the Rec2020 (UHDTV) color space (outer triangle).

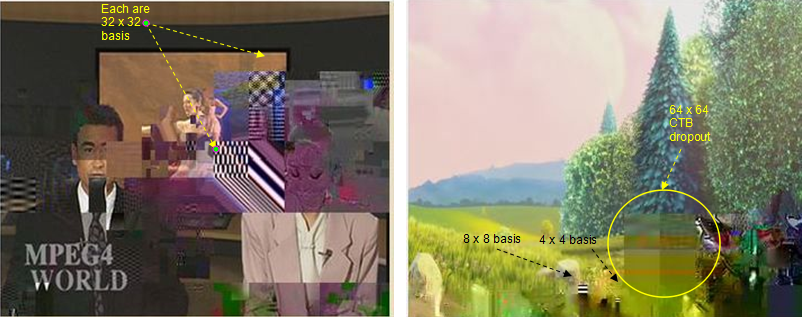

BLOCK ERRORS

A wider set of geometries of block and tile errors also must be addressed with ATSC 3.0. That’s because ATSC 3.0 supports more modern (and better compression enabling) codecs such as HEVC, Google VP9 and H.264, which have greater varied block geometries compared with legacy standards like MPEG-2 or MPEG-4. Fig. 2 shows a dropout capture with basis function geometries from 32x32 all the way to 4x4.

UPCONVERTED RESOLUTIONS

Currently, true HD content is somewhat limited to streaming content from OTT streaming services like Netflix, Amazon Prime Video and Disney+, and platforms like YouTube. In the near to mid-term, a significant amount of content delivered as 4K will continue to be upconverted from native HD or even SD. The upconversion process causes inherent video quality issues, such as loss of detail, blur as well as scaling of the block grid, burnt-in text and icon edges, digital noise and ghosting profiles.

MOTION TRACKING AND BLURRING

Given the larger canvas of 4K and 8K, the impact on, and hence the efficacy of, the existing tools that evaluate and assess motion will also need to be reconsidered.

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

Delivering UHD high frame-rate content, as proposed within ATSC 3.0, makes the viewer experience much more immersive, wherein the user is watching UHD content at an effective distance so that all of the fiber spatial of the content can be discerned. The drawback of delivering such an immersive viewer experience is that motion blur, especially in high-motion sports events, becomes noticeable if pronounced. This can adversely impact viewers’ quality of experience.

All LCDs suffer from motion blur problems. In many cases, this can impact up to 40% of their visible resolution in regions where there is significant spatio-temporal activity. Without motion interpolation, even higher refresh rates do little to fix this motion blur, which can be accentuated while viewing a big screen display (especially since the true UHD experience is best achieved when the viewing distance from the panel is optimally close).

HEAVIER COMPRESSION

The higher compression associated with 4K streaming makes the perceptual video quality for the end user more susceptible to packet loss, an issue that is made worse due when there are bandwidth constraints.

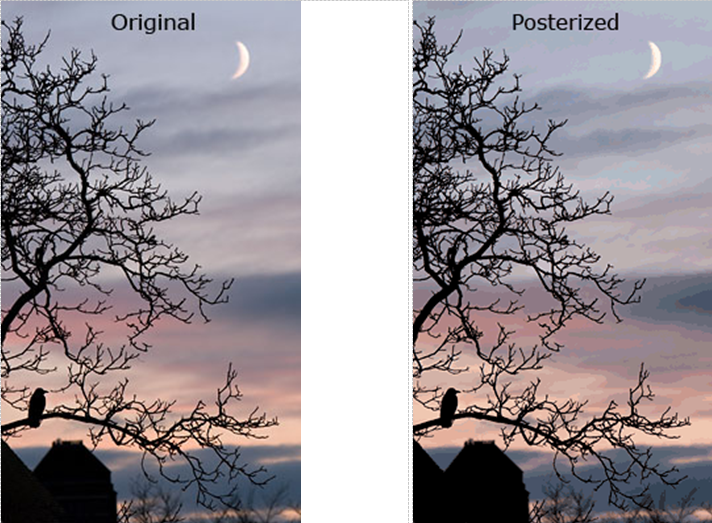

Heavier compression may also result in issues such as blocking, as well as banding artifacts (i.e., posterization effects) in gently varying backgrounds when a lower bit depth is used. Fig. 3 shows the effects of posterization.

High brightness levels, compression artifacts in Dolby PQ or HDR10 type panels could get accentuated if the bitrates are not sufficiently high. This can be explained by the steepness of the ST 2084 EOTF (Luminance L vs. 10-bit code value) curve in brighter areas. Performing compression-related checks such as blockiness and tiling, ringing and mosquito noise are essential.

SCALABILITY

Leveraging scalable video coding, the industry can address the issue of receiver compatibility, in terms of existing BT.709/BT.601-SDR displays being compatible with HDR content. This is important because broadcasters delivering UHD HDR content want to ensure that legacy viewers don’t miss out. The content needs to be backwards compatible with legacy or traditional HD and SD display technology.

Two basic approaches have been discussed: single layer and multilayer. With the single-layer approach, the compressed content is created with HDR, and the associated metadata is used to convert or map the HDR content to a standard SDR panel for backwards compatibility. With a multilayer approach, an SDR version of the HDR content is decompressed for legacy viewing, wherein the associated metadata containing HDR-related information is ignored. For HDR viewing, the associated metadata is used in a subsequent post-processing stage to achieve the tone rendering for targeted HDR performance.

Both approaches use extended metadata for image reconstruction. SDR rendition is used for single layer and HDR in the case of multilayer. With each approach, the adequate and appropriate usage of metadata for image reconstruction within the allocated dynamic range is crucial. Moreover, issues with out-of-gamut color mapping or localized contouring due to tone mapping may need to be factored into video quality control when scalable approaches are implemented.

WHY NEXTGEN MONITORING IS ESSENTIAL

Delivering a high quality of experience and quality of service for NextGen TV is critical. While ATSC 3.0 has the capacity to deliver better video and audio quality, the complexity of the ecosystem can also intensify issues, which must be addressed if broadcasters want to maintain viable UHD video quality.

By deploying a real-time content monitoring system for IP-based infrastructures, broadcasters can confidently deliver error-free, superior-quality video. The monitoring system will examine all aspects of video streams and perform important video and audio checks to detect issues that may be more prevalent in the ATSC 3.0 environment, such as those related to color gamut, block errors, upconverted resolutions, motion tracking and blurring and more.

Having a high degree of visibility into video and audio quality issues, broadcasters can successfully move forward with NextGen TV and offer the exceptional quality that ATSC 3.0 is designed to enable.

Advait Mogre is principal scientist at Interra Systems.