Sinclair Taps ‘Cloud Power’ for Daily Program Origination

HUNT VALLEY, Md.—Is the public cloud really ready for primetime?

After more than a year of satisfactory experience with using cloud modality for daily distribution of content to some 200 stations, at least one broadcaster thinks so. Sinclair Broadcast Group, one of the largest owners and operators of U.S. TV stations, has been tapping the public resource for automated content storage, playout, and ad insertion in connection with a three-hour daily programming block. Operation in the cloud began in September 2017, and according to Don Roberts, Sinclair’s senior director of television systems, the results have been very satisfactory.

“We’d been discussing how we could enter the ‘cloud’ playout environment for some time, and this was the perfect opportunity to try it out,” he said, adding that while it would have been relatively easy for Sinclair to construct its own playout facility, or utilize existing equipment and personnel to accommodate the new program block, the group felt that movement to the cloud by broadcasters is inevitable, and this was a good opportunity to test the technology.

“This was to be the great experiment,” said Roberts, noting, however, that as this was territory that had never been explored, it seemed prudent to have a fallback plan ready, just in case.

“We had a NOC [network operations center] located at our West Palm Beach, Fla. station, which in addition to operating multiple TV stations, is also providing operational support for two of our emerging networks, ‘Comet’ and ‘Charge,’” said Roberts. “We had previously run a sports network from that facility, so there was premium hardware already installed with sufficient capacity to run the programming if the cloud system didn’t work out. This was designated as our ‘Plan B.’”

And there was even more backup waiting in the wings.

“We also had an additional playout facility installed at our Las Vegas station which could handle things if necessary. This was our ‘Plan C.’”

The professional video industry's #1 source for news, trends and product and tech information. Sign up below.

(And as things would work out, Roberts admits that he was glad he had these backup scenarios fully developed and equipment ready to go.)

DEVELOPING THE PLAN

In creating the new programming block, there was obviously a lot more to be done than contracting for cloud services, as these would only be used in connection with content storage and playout.

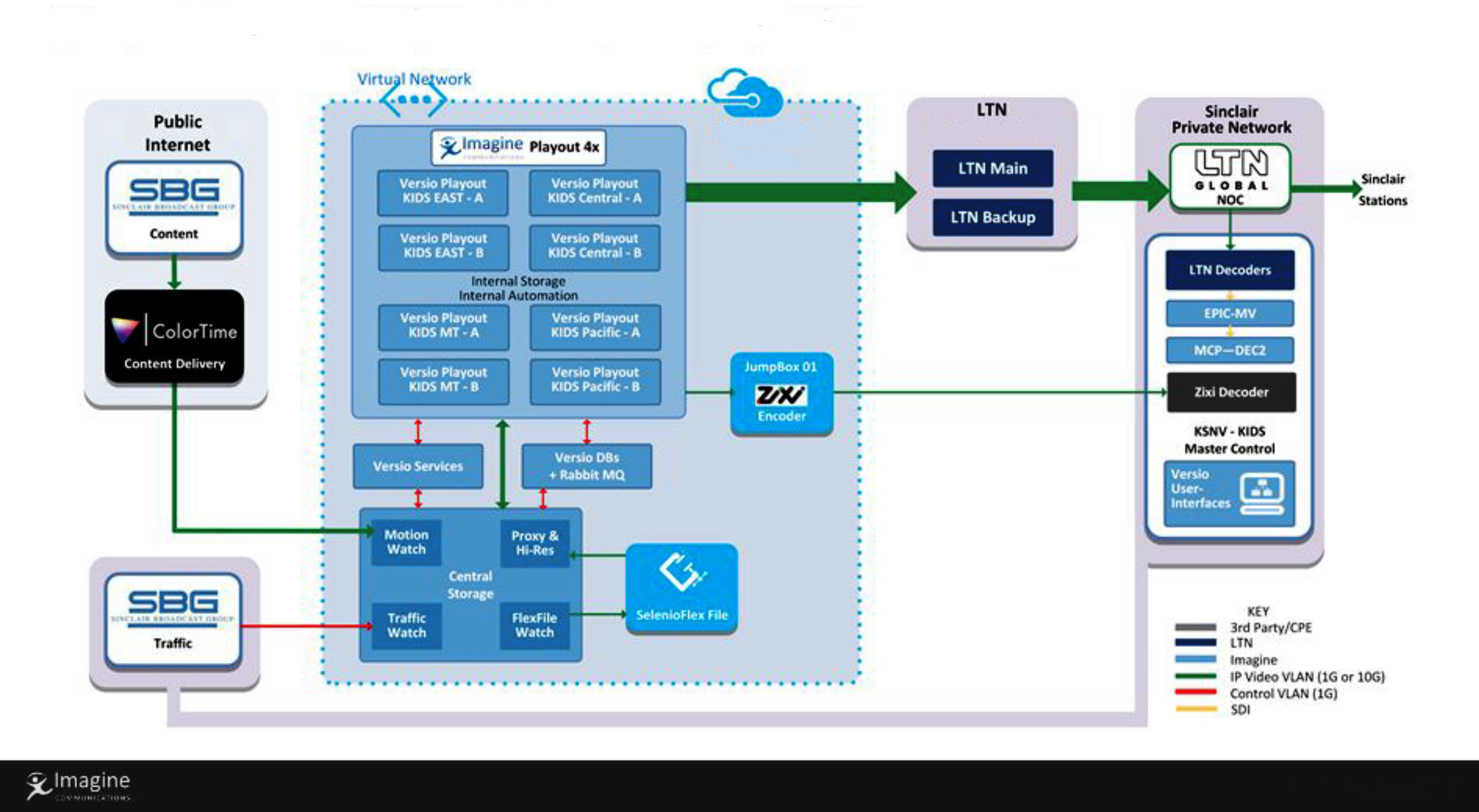

“We have content coming in from all over the world in many formats,” said Roberts. “We brought in a post house in Burbank—Colortime—to handle format conversion, color correction and other requirements in getting this content ready to air. They proved to be an instrumental part of the content post work, as well as a partner in developing an XML workflow for us.”

Imagine Communications was tasked with providing the necessary automation via their software-based Versio Platform, which is tailored for virtualized environments. In addition to automatically handling ingest of fresh content and four daily scheduled playouts of the program package to accommodate time zone requirements, the system handles branding, insertion of ads and ancillary material, and communicates with Sinclair’s traffic operations to accommodate schedule updates and last-minute ad changes.

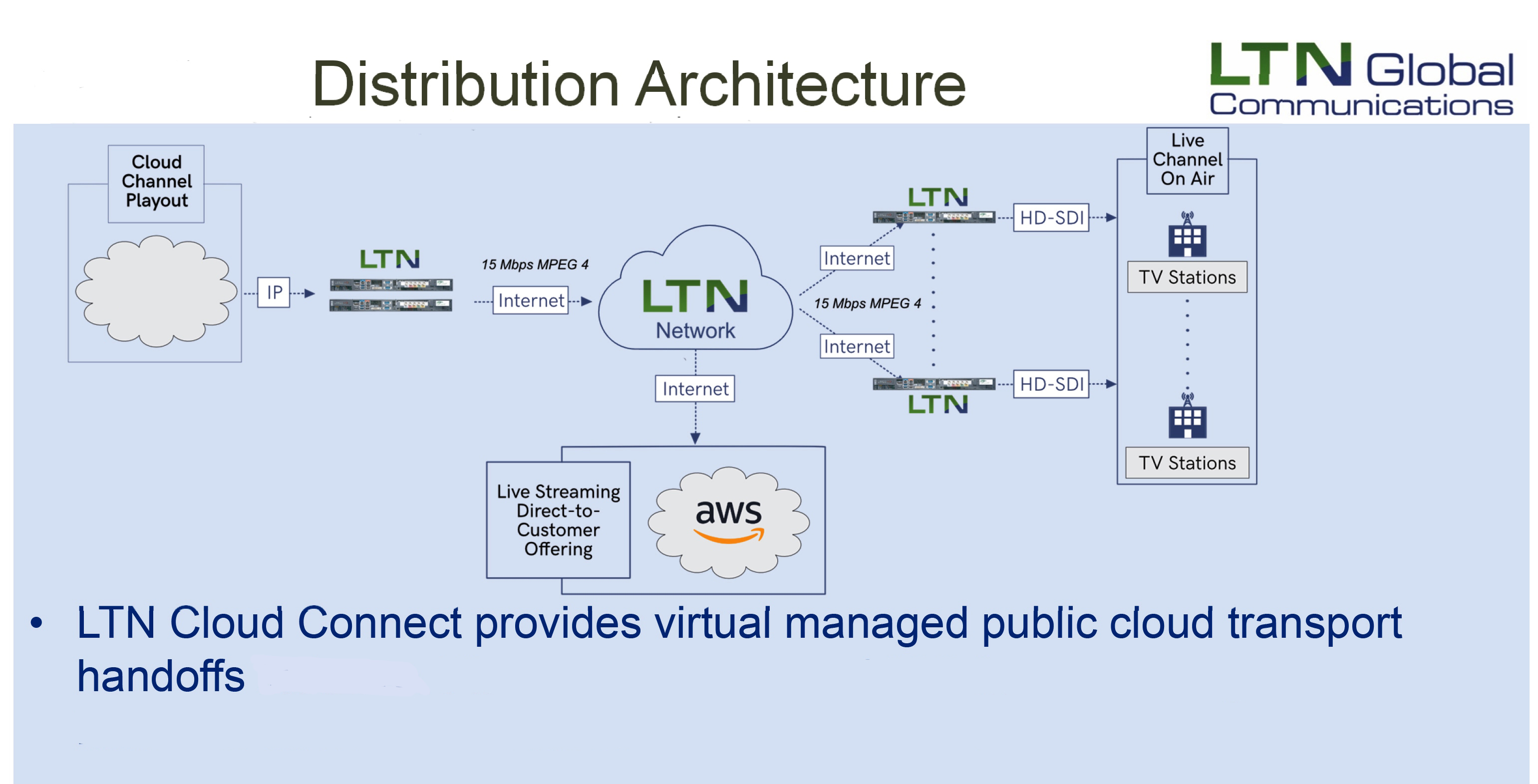

One additional element—the real-time delivery of the program stream handed off from the cloud to stations airing the feeds—is handled by Savage, Md.-based LTN Global Communications. LTN receives the IP packet stream from the public cloud, performs monitoring and distribution operations, and is responsible for delivery to individual TV stations and conversion of the IP data to SDI video.

“We had planned on doing about three weeks of run-throughs before the target launch date,” said Roberts. “We started during that three-week window, but never ran through an entire playlist with commercials and bugs and everything else until about a week before the launch. This didn’t go perfectly; we identified some problems and we were anticipating that the problems we’d identified would be fixed by the time we launched, but they weren’t.”

Roberts said that in general, the major difficulties involved the embedded automation system’s operation in the cloud environment, connectivity issues between the Versio platform and the LTN video transport platform, and also with monitoring at the West Palm Beach facility.

BACK TO THE DRAWING BOARD

The July 1, 2017 startup date finally rolled around and it appeared that everything was in readiness, but soon after the launch took place a number of things didn’t go quite as planned and there was no popping of champagne bottle corks.

“It didn’t quite live up to our expectations,” said Roberts. “Things didn’t always run in the order we wanted; the system would go to a break, then either skip around or play the same segment over—things like that.”

After trying unsuccessfully for a couple of days to make the cloud approach work, the decision was made to go with “Plan B,” the West Palm Beach playout arrangement, so that the kinks could be worked out; however, as everyone involved soon found out, this wasn’t going to happen overnight.

“’Plan B’ was not intended to be a long-term solution,” said Roberts. “But we stayed with it for about three weeks, and then switched again to ‘Plan C,’ moving the operation to Las Vegas on July 24, as the setup there was better suited for playout of multiple channels.”

Roberts noted that all vendors involved really wanted to see this cloud “first” succeed, and everyone was very proactive in the troubleshooting and resolution, not letting up until the system performed as expected.

“When we experienced these problems, Imagine and LTN didn’t just throw up their hands; they burned the midnight oil,” he said. “They bent over backwards and put every asset they had into fixing this,” noting that this cloud playout scenario was really uncharted territory for all players. “We were all making it up as we went along.”

Finally, after more than two months of almost non-stop diagnostic work, head scratching, and “cut-and-try,” the cloud system was finally stable. On Sept. 18, programming origination moved back to the cloud and has been there ever since.

LESSONS LEARNED

Roberts said that much useful information was gleaned about operating with someone else’s hardware during the new program service shakedown. Perhaps the biggest “unanticipated consequence” came about as a result of the new service not operating on a 24/7 basis. (The programming block only runs three hours each day, with four time zone feeds.) Ordinarily this would not be an issue; however, with storage handled on a multitude of platforms in cloud environments, servers assigned are apt to change from day-to-day, with no guarantee that VMs (virtual machines) and their MAC addresses will stay the same, and as was quickly discovered, such changes can really upset an automation system that wants to see the same hardware all the time.

Philip Grossman, vice president of solutions architecture and engineering at Imagine Communications, acknowledged that the ever-changing server MAC addresses were a big problem in trying to develop a stable system, but there was another issue that was equally troublesome.

“We’d resolved the MAC address issue by changing our licensing model so that things weren’t tied directly to underlying MAC addresses,” said Grossman. “Then we found that there were network bandwidth differences between different racks of servers. We’d be sending out a 20 Mbps stream and their initial network interfaces were for 10 Mbps. Actually, it became clear that the public cloud was not necessarily ready for broadcast television and there were a number of issues discovered as we tried to start up the service. We had to step through each of these one-at-a-time.”

However, Grossman said the cloud operator was very proactive regarding the difficulties, and in the end, resolution involved fixes on both sides.

“They changed the way it was doing some things and we adapted our Versio architecture on top of that. Everyone worked together to try and find an optimal solution.”

He noted that other shortcomings which surfaced, such as instances of jitter and packet loss, were resolved by increasing VM resources in the gateway between Imagine and LTN.

Jonathan Stanton, vice president of technology at LTN Global, noted too that one of the lessons learned from debugging this initial cloud tryout was the importance of multiple vendors working together. The new cloud environment also needs new standards to help govern the way in which various entities involved interface with each other.

“I think standards for interconnections in this broadcast cloud environment are very important,” said Stanton. “The broadcast world has a big diverse ecosystem of providers and we need to interoperate with each other.”

WILL THERE BE AN ENCORE PERFORMANCE?

Would Roberts do it over again, given the chance?

“Definitely,” he said. “Even though things got off to a bumpy start, it was a great experiment, and we learned a lot. We certainly attained the goal that we wanted to attain and proved to ourselves that this technology can work.”

With the new programming service now a success, does Sinclair have plans for more cloud utilization?

“We’ll ultimately roll out all of our stations in some sort of cloud-based technology, said Roberts. “This will probably be a private cloud. It’s still a work in progress.”

And what about advice and suggestions for other broadcasters considering tapping the cloud?

“Allow plenty of time,” said Roberts “We soon found that 75 days wasn’t realistic. Also, it’s hard to know what you want to do when it’s an unproven technology. You learn as you go and you adjust as you go and you have to be flexible enough to roll with the punches. We learned that there were some aspects of this that were nothing like we expected. The other vendors also learned a lot. In addition, we planned alternatives if the cloud didn’t work as expected. This was very important.

“You have to have a set of goals and a very clear sense of how you want it to operate. It’s not like a standard broadcast facility; it’s not like that at all.”

James E. O’Neal has more than 50 years of experience in the broadcast arena, serving for nearly 37 years as a television broadcast engineer and, following his retirement from that field in 2005, moving into journalism as technology editor for TV Technology for almost the next decade. He continues to provide content for this publication, as well as sister publication Radio World, and others. He authored the chapter on HF shortwave radio for the 11th Edition of the NAB Engineering Handbook, and serves as contributing editor of the IEEE’s Broadcast Technology publication, and as associate editor of the SMPTE Motion Imaging Journal. He is a SMPTE Life Fellow, and a member of the SBE and Life Senior Member of the IEEE.